(2.19)

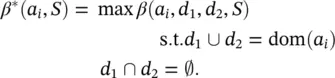

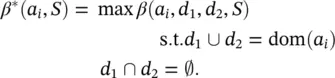

Twoing criterion : The Gini index may encounter problems when the domain of the target attribute is relatively wide. In this case, they suggest using the binary criterion called the twoing ( tw ) criterion. This criterion is defined as

(2.20)

When the target attribute is binary, the Gini and twoing criteria are equivalent. For multiclass problems, the twoing criterion prefers attributes with evenly divided splits.

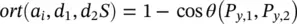

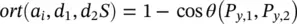

Orthogonality ( ort ) criterion: This binary criterion is defined as

where θ ( P y,1, P y,2) is the angle between two distribution vectors P y,1and P y,2of the target attribute y on the bags  and

and  , respectively. It was shown that this criterion performs better than the information gain and the Gini index for specific problem constellations.

, respectively. It was shown that this criterion performs better than the information gain and the Gini index for specific problem constellations.

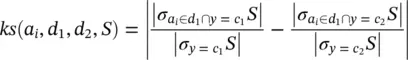

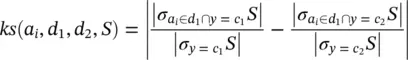

Kolmogorov – Smirnov ( K–S ) criterion : Assuming a binary target attribute, namely, dom( y ) = { c 1, c 2}, the criterion is defined as

(2.21)

It was suggest extending this measure to handle target attributes with multiple classes and missing data values. The results indicate that the suggested method outperforms the gain ratio criteria.

Stopping criteria: The tree splitting (growing phase) continues until a stopping criterion is triggered. The following conditions are common stopping rules: (i) all instances in the training set belong to a single value of y, (ii) the maximum tree depth has been reached, (iii) the number of cases in the terminal node is less than the minimum number of cases for the parent nodes, and (iv) if the node were split, the number of cases in one or more child nodes would be less than the minimum number of cases for child nodes. The best splitting criteria is not greater than a certain threshold.

Pruning methods: Employing tightly stopping criteria tends to create small and underfitted decision trees. On the other hand, using loosely stopping criteria tends to generate large decision trees that are overfitted to the training set. Pruning methods were developed to solve this dilemma. There are various techniques for pruning decision trees. Most of them perform top‐down or bottom‐up traversal of the nodes. A node is pruned if this operation improves a certain criterion. Next, we describe the most popular techniques.

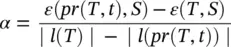

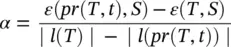

Cost ‐ complexity pruning ( pr ): This proceeds in two stages. In the first stage, a sequence of trees T 0, T 1, … T kis built on the training data, where T 0is the original tree before pruning and T kis the root tree. In the second stage, one of these trees is chosen as the pruned tree, based on its generalization error estimation. The tree T i + 1is obtained by replacing one or more of the subtrees in the predecessor tree T iwith suitable leaves. The subtrees that are pruned are those that obtain the lowest increase in apparent error rate per pruned leaf ( l ):

(2.22)

where ε ( T , S ) indicates the error rate of the tree T over the sample S , and ∣ l ( T )∣ denotes the number of leaves in T . The parameter pr ( T , t ) denote the tree obtained by replacing the node t in T with a suitable leaf. In the second phase, the generalization error of each pruned tree T 0, T 1, … T kis estimated. The best pruned tree is then selected. If the given dataset is large enough, it is suggested to break it into a training set and a pruning set. The trees are constructed using the training set and evaluated on the pruning set. On the other hand, if the given dataset is not large enough, the cross‐validation methodology is suggested, despite the computational complexity implications.

Reduced error pruning : While traversing the internal nodes from the bottom to the top, the procedure checks, for each internal node, whether replacing it with the most frequent class reduces the tree’s accuracy. If not, the node is pruned. The procedure continues until any further pruning would decrease the accuracy. It can be shown that this procedure ends with the smallest accurate subtree with respect to a given pruning set.

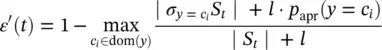

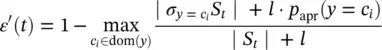

Minimum ‐ error pruning ( MEP ): It performs bottom‐up traversal of the internal nodes. In each node, it compares the l‐probability‐error rate estimation with and without pruning. The l‐probability‐error rate estimation is a correction to the simple probability estimation using frequencies. If S tdenote the instances that have reached node t , then the error rate obtained if this node was pruned is

(2.23)

where p apr( y = c i) is the a priori probability of y getting the value c i, and denotes the weight given to the a priori probability. A node is pruned if it does not increase the m probability‐error rate.

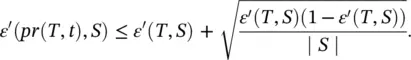

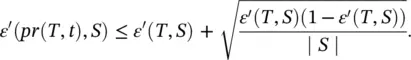

Pessimistic pruning : The basic idea is that the error ratio estimated using the training set is not reliable enough. Instead, a more realistic measure known as continuity correction for binomial distribution should be used: ε ′( T , S ) = ε ( T , S ) + ∣ l ( T ) ∣ /2 · ∣ S ∣. However, this correction still produces an optimistic error rate. Consequently, it was suggested to prune an internal node t if its error rate is within one standard error from a reference tree, namely

(2.24)

The last condition is based on the statistical confidence interval for proportions. Usually, the last condition is used such that T refers to a subtree whose root is the internal node t , and S denotes the portion of the training set that refers to the node. The pessimistic pruning procedure performs top‐down traversing over the internal nodes. If an internal node is pruned, then all its descendants are removed from the pruning process, resulting in a relatively fast pruning.

Читать дальше

and

and  , respectively. It was shown that this criterion performs better than the information gain and the Gini index for specific problem constellations.

, respectively. It was shown that this criterion performs better than the information gain and the Gini index for specific problem constellations.