Chris McCain - Mastering VMware® Infrastructure3

Здесь есть возможность читать онлайн «Chris McCain - Mastering VMware® Infrastructure3» — ознакомительный отрывок электронной книги совершенно бесплатно, а после прочтения отрывка купить полную версию. В некоторых случаях можно слушать аудио, скачать через торрент в формате fb2 и присутствует краткое содержание. Город: Indianapolis, Год выпуска: 2008, ISBN: 2008, Издательство: WILEY Wiley Publishing, Inc., Жанр: Программы, ОС и Сети, на английском языке. Описание произведения, (предисловие) а так же отзывы посетителей доступны на портале библиотеки ЛибКат.

- Название:Mastering VMware® Infrastructure3

- Автор:

- Издательство:WILEY Wiley Publishing, Inc.

- Жанр:

- Год:2008

- Город:Indianapolis

- ISBN:978-0-470-18313-7

- Рейтинг книги:5 / 5. Голосов: 1

-

Избранное:Добавить в избранное

- Отзывы:

-

Ваша оценка:

- 100

- 1

- 2

- 3

- 4

- 5

Mastering VMware® Infrastructure3: краткое содержание, описание и аннотация

Предлагаем к чтению аннотацию, описание, краткое содержание или предисловие (зависит от того, что написал сам автор книги «Mastering VMware® Infrastructure3»). Если вы не нашли необходимую информацию о книге — напишите в комментариях, мы постараемся отыскать её.

Mastering VMware® Infrastructure3 — читать онлайн ознакомительный отрывок

Ниже представлен текст книги, разбитый по страницам. Система сохранения места последней прочитанной страницы, позволяет с удобством читать онлайн бесплатно книгу «Mastering VMware® Infrastructure3», без необходимости каждый раз заново искать на чём Вы остановились. Поставьте закладку, и сможете в любой момент перейти на страницу, на которой закончили чтение.

Интервал:

Закладка:

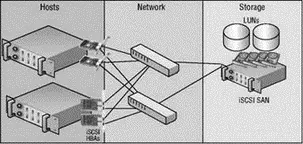

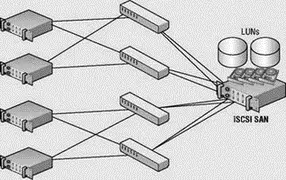

Figure 4.19An iSCSI SAN includes an overall architecture similar to fibre channel, but the individual components differ in their communication mechanisms.

When deploying an iSCSI storage network, you'll find that adhering to the following rules can help mitigate performance degradation or security concerns:

♦ Always deploy iSCSI storage on a dedicated network.

♦ Configure all nodes on the storage network with static IP addresses.

♦ Configure the network adapters to use full-duplex, gigabit autonegotiated recommended communication.

♦ Avoid funneling storage requests from multiple servers into a single link between the network switch and the storage device.

Deploying a dedicated iSCSI storage network reduces network bandwidth contention between the storage traffic and other common network traffic types such as e-mail, Internet, and file transfer. A dedicated network also offers administrators the luxury of isolating the SCSI communication protocol from ‘‘prying eyes’’ that have no legitimate need to access the data on the storage device.

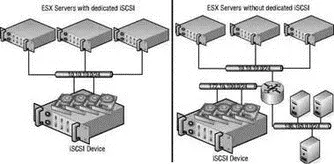

iSCSI storage deployments should always utilize dedicated storage networks to minimize contention and increase security. Achieving this goal is a matter of implementing a dedicated switch or switches to isolate the storage traffic from the rest of the network. Figure 4.20 shows the differences and one that is integrated with the other network segments.

If a dedicated physical network is not possible, using a virtual local area network (VLAN) will segregate the traffic to ensure storage traffic security. Figure 4.21 shows iSCSI implemented over a VLAN to achieve better security. However, this type of configuration still forces the iSCSI communication to compete with other types of network traffic.

Figure 4.21 iSCSI can be implemented across VLANs to enhance security.

Figure 4.20iSCSI should have a dedicated and isolated network.

Figure 4.21iSCSI communication traffic can be isoloated from other network traffic by using vLANs.

Real World Scenario

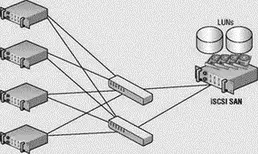

A common deployment error with iSCSI storage networks is the failure to provide enough connectivity between the Ethernet switches and the storage device to adequately handle the traffic requests from the ESX Server hosts. In the sample architecture shown here, four ESX Server hosts are configured with redundant connections to two Ethernet switches, which each have a connection to the iSCSI storage device. At first glance, it looks as if the infrastructure has been designed to support a redundant storage communication strategy. And perhaps it has. But what it has not done is maximize the efficiency of the storage traffic.

If each link between the ESX Server hosts and the Ethernet switches is a 1GB link, that means there is a total storage bandwidth of 8GB or 4GB per Ethernet switch. However, the connection between the Ethernet switches and the iSCSI storage device consists of a single 1GB link per switch. If each host maximizes the throughput from host to switch, the bandwidth needs will exceed the capabilities of the switch-to-storage link and will force packets to be dropped. Since TCP is a reliable transmission protocol, the dropped packets will be re-sent as needed until they have reached their destination. All of the new data processing, coupled with the persistent retries of dropped packets, consumes more and more resources and strains the communication, thus resulting in a degradation of server performance.

To protect against funneling too much data to the switch-to-storage link, the iSCSI storage network should be configured with multiple available links between the switches and the storage device. The image shown here represents an iSCSI storage network configuration that promotes redundancy and communication efficiency by increasing the available bandwidth between the switches and the storage device. This configuration will result in reduced resource usage as a result of less packet-dropping and less retrying.

To learn more about iSCSI, visit the Storage Networking Industry Association website at http://www.snia.org/tech_activities/ip_storage/iscsi.

Configuring ESX for iSCSI Storage

I can't go into the details of configuring the iSCSI storage side of things because each product has nuances that do not cross vendor boundaries, and companies don't typically carry an iSCSI SAN from each potential vendor. On the bright side, what I can and most certainly will cover in great detail is how to configure an ESX Server host to connect to an iSCSI storage device using both hardware and software iSCSI initiation.

As noted in the previous section, VMware is limited in its support for hardware device compatibility. As with fibre channel, you should always check VMware's website to review the latest SAN compatibility guide before purchasing any new storage devices. While software-initiated iSCSI has maintained full support since the release of ESX 3.0, hardware initiation with iSCSI devices did not garner full support until the ESX 3.0.1 release. The prior release, ESX 3.0, provided only experimental support for hardware-initiated iSCSI.

Each of the manufacturers listed here provides an iSCSI storage solution that has been tested and approved for use by VMware:

♦ 3PAR: http://www.3par.com

♦ Compellent: http://www.compellent.com

♦ Dell: http://www.dell.com

♦ EMC: http://www.emc.com

♦ EqualLogic: http://www.equallogic.com

♦ Fujitsu Siemens: http://www.fujitsu-siemens.com

♦ HP: http://www.hp.com

♦ IBM: http://www.ibm.com

♦ LeftHand Networks: http://www.lefthandnetworks.com

♦ Network Appliance (NetApp): http://www.netapp.com

♦ Sun Microsystems: http://www.sun.com

An ESX Server host can initiate communication with an iSCSI storage device by using a hardware device with dedicated iSCSI technology built into the device, or by using a software-based initiator that utilizes standard Ethernet hardware and is managed like normal network communication. Using a dedicated iSCSI HBA that understands the TCP/IP stack and the iSCSI communication protocol provides an advantage over software initiation. Hardware initiation eliminates some processing overhead in the Service Console and VMkernel by offloading the TCP/IP stack to the hardware device. This technology is often referred to as the TCP/IP Offload Engine (TOE). When you use an iSCSI HBA for hardware initiation, the VMkernel needs only the drivers for the HBA and the rest is handled by the device.

For best performance, the iSCSI hardware-based initiation is the appropriate deployment. After you boot the server, the iSCSI HBA will display all its information in the Storage Adapters node of the Configuration tab, as shown in Figure 4.22. By default, as shown in Figure 4.23, iSCSI HBA devices will assign an IQN in the BIOS of the iSCSI HBA. Configuring the hardware iSCSI initiation with an HBA installed on the host is very similar to configuring a fibre channel HBA — the device will appear in the Storage Adapters node of the Configuration tab. The vmhba#'s for fibre channel and iSCSI HBAs will be enumerated numerically. For example, if an ESX Server host includes two fibre channel HBAs labeled vmhba1 and vmhba2, adding two iSCSI HBAs will result in labels of vmhba3 and vmhba4. The software iSCSI adapter in ESX Server 3.5 will always be labeled as vmhba32.. You can then configure the adapter(s) to support the storage infrastructure.

Читать дальшеИнтервал:

Закладка:

Похожие книги на «Mastering VMware® Infrastructure3»

Представляем Вашему вниманию похожие книги на «Mastering VMware® Infrastructure3» списком для выбора. Мы отобрали схожую по названию и смыслу литературу в надежде предоставить читателям больше вариантов отыскать новые, интересные, ещё непрочитанные произведения.

Обсуждение, отзывы о книге «Mastering VMware® Infrastructure3» и просто собственные мнения читателей. Оставьте ваши комментарии, напишите, что Вы думаете о произведении, его смысле или главных героях. Укажите что конкретно понравилось, а что нет, и почему Вы так считаете.