Chris McCain - Mastering VMware® Infrastructure3

Здесь есть возможность читать онлайн «Chris McCain - Mastering VMware® Infrastructure3» — ознакомительный отрывок электронной книги совершенно бесплатно, а после прочтения отрывка купить полную версию. В некоторых случаях можно слушать аудио, скачать через торрент в формате fb2 и присутствует краткое содержание. Город: Indianapolis, Год выпуска: 2008, ISBN: 2008, Издательство: WILEY Wiley Publishing, Inc., Жанр: Программы, ОС и Сети, на английском языке. Описание произведения, (предисловие) а так же отзывы посетителей доступны на портале библиотеки ЛибКат.

- Название:Mastering VMware® Infrastructure3

- Автор:

- Издательство:WILEY Wiley Publishing, Inc.

- Жанр:

- Год:2008

- Город:Indianapolis

- ISBN:978-0-470-18313-7

- Рейтинг книги:5 / 5. Голосов: 1

-

Избранное:Добавить в избранное

- Отзывы:

-

Ваша оценка:

- 100

- 1

- 2

- 3

- 4

- 5

Mastering VMware® Infrastructure3: краткое содержание, описание и аннотация

Предлагаем к чтению аннотацию, описание, краткое содержание или предисловие (зависит от того, что написал сам автор книги «Mastering VMware® Infrastructure3»). Если вы не нашли необходимую информацию о книге — напишите в комментариях, мы постараемся отыскать её.

Mastering VMware® Infrastructure3 — читать онлайн ознакомительный отрывок

Ниже представлен текст книги, разбитый по страницам. Система сохранения места последней прочитанной страницы, позволяет с удобством читать онлайн бесплатно книгу «Mastering VMware® Infrastructure3», без необходимости каждый раз заново искать на чём Вы остановились. Поставьте закладку, и сможете в любой момент перейти на страницу, на которой закончили чтение.

Интервал:

Закладка:

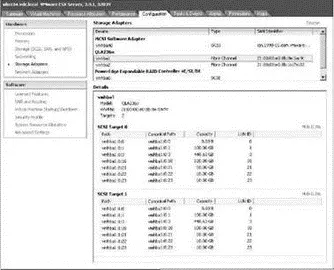

Figure 4.14An ESX Server configured through Vir-tualCenter with two QLogic 236x fibre channel HBAs and multiple SCSI targets or storage processors (SPs) in the storage device.

Once fibre channel storage is presented to a server and the server recognizes the pools of storage, then the administrator can create datastores. A datastore is a storage pool on an ESX Server host that can be a local disk, fibre channel LUN, iSCSI LUN, or NFS share. A datastore provides a location for placing virtual machine files, ISO images, and templates.

For the VI3 administrator, the configuration of datastores on fibre channel storage is straightforward. It is the LUN masking, LUN design, and LUN management that incur significant administrative overhead (or more to the point, brainpower!). For VI3 administrators who are not responsible for SAN management and configuration, it is essential to work closely with the storage personnel to ensure performance and security of the storage pools used by the ESX Server hosts.

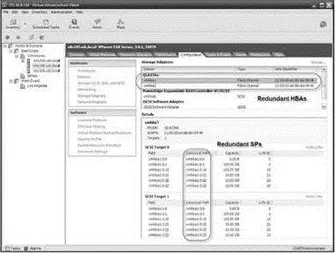

Later in this chapter we'll discuss LUN design in greater detail, but for now let's assume that LUNs have been created and masking has been performed. With those assumptions in place, the work required by the VI3 administrator is quick and easy. Figure 4.15 identifies five LUNs that are available to silo105.vdc.local through its redundant connection to the storage device. The ESX Server silo105.vdc.local has two HBAs connecting to a storage device, with two SPs creating redundant paths to the available LUNs. Although there are six LUNs in the targets list, the LUN with ID 0 is disregarded since it is not available to the ESX Server for storage.

A portion of the ESX Server boot process includes LUN discovery. An ESX Server, at boot-up and by default, will attempt to enumerate LUNs with LUN IDs between 1 and 255.

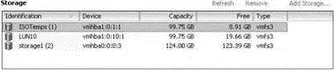

Even though silo105.vdc.local is presented with five LUNs, it does not mean that all five LUNs are currently being used to store data for the server. Figure 4.16 shows that silo105.vdc.local has three datastores, only two of which are LUNs presented by the fibre channel storage device. With two fibre channel SAN LUNs already in use, silo105.vdc.local has three more LUNs available when needed. Later in this chapter you'll learn how to use the LUNs as VMFS volumes.

Figure 4.15An ESX Server discovers its available LUNs and displays them under each available SCSI target. Here, five LUNs are available to the ESX Server for storage.

Figure 4.16An ESX Server host with a local datastore named storage1 (2) and two datastores ISOTemps (1) and LUN10 on a fibre channel storage device.

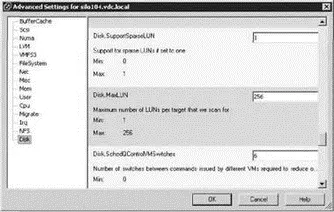

When an ESX Server host is powered on, it will process the first 256 LUNs (LUN 0 through LUN 255) on the storage devices to which it is given access. ESX will perform this enumeration at every boot, even if many of the LUNs have been masked out from the storage processor side. You can configure individual ESX Server hosts not to scan all the way up to LUN 255 by editing the Disk.MaxLUN configuration setting. Figure 4.17 shows the default configuration of the Disk.MaxLUN value that results in accessibility to the first 256 LUNs.

Despite the potential benefit of performing LUN masking at the ESX Server (to speed up the boot process), the work necessary to consistently manage LUNs on each ESX Server may offset that benefit. 1 suggest that you perform LUN masking at the SAN.

To change the Disk.MaxLUN setting, perform the following steps:

1. Use the VI client to connect to a VirtualCenter Server or an individual ESX Server host.

2. Select the hostname in the inventory tree and select the Configuration tab in the details pane on the right.

3. In the Software section, click the Advanced Settings link.

4. In the Advanced Settings for window, select the Disk option from the selection tree.

5. In the Disk.MaxLUN text box, enter the desired integer value for the number of LUNs to scan.

Figure 4.17Altering the Disk.MaxLUN value can result in a faster boot or rescan process for an ESX Server host. However, it may also require attention when new LUNs must be made available that exceed the custom configuration.

You should alter the Disk.MaxLUN parameter only when you are certain that LUN IDs will never exceed the custom value. Otherwise, though a performance benefit might result, you will have to revisit the setting each time available LUN IDs must exceed the custom value.

Although LUN masking is most commonly performed at the storage processor, as it should be, it is also possible to configure LUN masking on each individual ESX Server host to speed up the boot process.

Let's take an example where an administrator configures LUN masking at the storage processor. Once the masking at the storage processor is complete, the LUNs that have been presented to the hosts are the ones numbered 117 through 127. However, since the default configuration for ESX Server is set to enumerate the first 256 LUNs by default, it will move through each potential LUN even if the storage processor is preventing the LUN from being seen. In an effort to speed up the boot process, an ESX Server administrator can perform LUN masking at the server. In this example, if the administrator were to mask LUN 1 through LUN 116 and LUN 128 through LUN 256, then the server would only be enumerating the LUNs that it is allowed to see and, as a result, would boot quicker. To enable LUN masking on an ESX Server, you must edit the Disk.MaskLUN option (which you access by clicking the Advanced Settings link on the Configuration tab). The Disk.MaskLUN text box requires this format:

: : ;

For example, to mask the LUNs from the previous example (1 through 116 and 128 through 256) that are accessible through the first HBA and two different storage processors, you'd enter the following in the Disk.MaskLUN text box entry:

vmhba1:0:1-116,128-256;vmhba1:1:1-116,128-256;

The downside to configuring LUN masking on the ESX Server is the administrative overhead involved when a new LUN is presented to the server or servers. To continue with the previous example, if the VI3 administrator requests five new LUNs and the SAN administrator provisions LUNs with LUN IDs of 136 through 140, the VI3 administrator will have to edit all of the local masking configurations on each ESX Server host to read as follows:

Vmhba1:0:1-116,128-135,141-254;vmhba1:1:1-116,128-135,141-256;

In theory, LUN masking on each ESX Server host sounds like it could be a benefit. But in practice, masking LUNs at the ESX Server in an attempt to speed up the boot process is not worth the effort. An ESX Server host should not require frequent reboots, and therefore the effect of masking LUNs on each server would seldom be felt. Additional administrative effort would be needed since each host would have to be revisited every time new LUNs are presented to the server.

Be sure that storage administrators do not carve LUNs for an ESX Server that have ID numbers greater than 255. ESX hosts have a maximum capability of 256 LUNs, beginning with ID 1 and on through ID 255. Clicking the Rescan link located in the Storage Adapters node of the Configuration tab on a host will force the host to identify new LUNs or new VMFS volumes, with the exception that any LUNs with IDs greater than 255 will not be discoverable by an ESX host.

Читать дальшеИнтервал:

Закладка:

Похожие книги на «Mastering VMware® Infrastructure3»

Представляем Вашему вниманию похожие книги на «Mastering VMware® Infrastructure3» списком для выбора. Мы отобрали схожую по названию и смыслу литературу в надежде предоставить читателям больше вариантов отыскать новые, интересные, ещё непрочитанные произведения.

Обсуждение, отзывы о книге «Mastering VMware® Infrastructure3» и просто собственные мнения читателей. Оставьте ваши комментарии, напишите, что Вы думаете о произведении, его смысле или главных героях. Укажите что конкретно понравилось, а что нет, и почему Вы так считаете.