Chris McCain - Mastering VMware® Infrastructure3

Здесь есть возможность читать онлайн «Chris McCain - Mastering VMware® Infrastructure3» — ознакомительный отрывок электронной книги совершенно бесплатно, а после прочтения отрывка купить полную версию. В некоторых случаях можно слушать аудио, скачать через торрент в формате fb2 и присутствует краткое содержание. Город: Indianapolis, Год выпуска: 2008, ISBN: 2008, Издательство: WILEY Wiley Publishing, Inc., Жанр: Программы, ОС и Сети, на английском языке. Описание произведения, (предисловие) а так же отзывы посетителей доступны на портале библиотеки ЛибКат.

- Название:Mastering VMware® Infrastructure3

- Автор:

- Издательство:WILEY Wiley Publishing, Inc.

- Жанр:

- Год:2008

- Город:Indianapolis

- ISBN:978-0-470-18313-7

- Рейтинг книги:5 / 5. Голосов: 1

-

Избранное:Добавить в избранное

- Отзывы:

-

Ваша оценка:

- 100

- 1

- 2

- 3

- 4

- 5

Mastering VMware® Infrastructure3: краткое содержание, описание и аннотация

Предлагаем к чтению аннотацию, описание, краткое содержание или предисловие (зависит от того, что написал сам автор книги «Mastering VMware® Infrastructure3»). Если вы не нашли необходимую информацию о книге — напишите в комментариях, мы постараемся отыскать её.

Mastering VMware® Infrastructure3 — читать онлайн ознакомительный отрывок

Ниже представлен текст книги, разбитый по страницам. Система сохранения места последней прочитанной страницы, позволяет с удобством читать онлайн бесплатно книгу «Mastering VMware® Infrastructure3», без необходимости каждый раз заново искать на чём Вы остановились. Поставьте закладку, и сможете в любой момент перейти на страницу, на которой закончили чтение.

Интервал:

Закладка:

Although adding a new HBA to an ESX Server host requires you to shut down the server, presenting and finding new LUNs only requires that you initiate a rescan from the ESX Server host.

To identify new storage devices and/or new VMFS volumes that have been added since the last scan, click the Rescan link located in the Storage node of the Configuration tab. The host will launch an enumeration process beginning with the lowest possible LUN ID to the highest (1 to 255), which can be a slow process (unless LUN masking has been configured on the host as well as the storage processor).

You have probably seen by now, and hopefully agree, that VMware has done a great job of creating a graphical user interface (GUI) that is friendly, intuitive, and easy to use. Administrators also have the ability to manage LUNs from a Service Console command line on an ESX Server host.

The ability to scan for new storage is available in the VI Client using the Rescan link in the Storage Adapters node of the Configuration page, but it is also possible to rescan from a command line.

The root user account does not have secure shell (SSH) capability by default. You must set the Permit-RootLogin entry in the /etc/ssh/sshdconfig file to Yes to allow access. Alternatively, you can log on to the console as a different user and use the #su - option to elevate the logon permissions. Opting to use the #su - option still requires that you know the root user's password but does not expose the system to allowing remote root logon via SSH.

Use the following syntax to rescan vmhba1 from a Service Console command line:

1. Log on to a console session as a nonroot user.

2. Type su — and then click Enter.

3. Type the root user password and then click Enter.

4. Type esxcfg-rescan vmhba1at the # prompt.

When multiple vmhba devices are available to the ESX Server, repeat the command, replacing vmhba#with each device.

You can identify LUNs using the physical address (i.e., vmhba#:target#:lun:partition), but the Service Console references the LUNs using the device filename (i.e., sda, sdb, etc.). You can see the device filenames when installing an ESX Server that is connected to a SAN with accessible LUNs. By using an SSH tool (putty.exe) to establish a connection and then issuing the esxcfg commands, you can perform command-line LUN management.

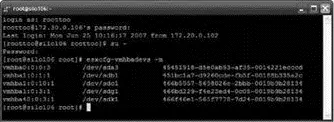

To display a list of available LUNs with their associated paths, device names, and UUIDs, perform the following steps:

1. Log on to a console session as a nonroot user.

2. Type su — and then click Enter.

3. Type the root user password and then click Enter.

4. Type esxcfg-vmhbadevs -mat the # prompt.

Figure 4.18 shows the resulting output for an ESX Server with an IP address of 172.30.0.106 and a nonroot user named roottoo .

Figure 4.18The esxcfg commands offer parameters and switches for managing and identifying LUNs available to an ESX Server host.

The UUIDs displayed in the output are unique identifiers used by the Service Console and VMkernel. These values are also reflected in the Virtual Infrastructure Client; however, we do not commonly refer to them because using the friendly names or even the physical paths is much easier.

Fibre channel storage has a strong performance history and will continue to progress in the areas of performance, manageability, reliability, and scalability. Unfortunately, the large financial investment required to implement a fibre channel solution has scared off many organizations looking to deploy a virtual infrastructure that offers all the VMotion, DRS, and HA bells that VI3 provides. Luckily for the IT community, VMware now offers lower-cost (and potentially lower-performance) options in iSCSI and NAS/NFS.

iSCSI Network Storage

As a response to the needs of not-so-deep-pocketed network administrators, Internet Small Computer Systems Interface (iSCSI) has become a strong alternative to fibre channel. The popularity of iSCSI storage, which offers both lower cost and increasing speeds, will continue to grow as it finds its place in virtualized networks.

iSCSI storage provides a block-level transfer of data using the SCSI communication protocol over a standard TCP/IP network. By using block-level transfer, as in a fibre channel solution, the storage device looks like a local device to the requesting host. With proper planning, an iSCSI SAN can perform nearly as well as a fibre channel SAN — or better. This depends on other factors, but we can dive into those in a moment. And before we make that dive into the configuration of iSCSI with ESX, let's first take a look at the components involved in an iSCSI SAN. Despite the fact that the goals and overall architecture of iSCSI are similar to fibre channel, when you dig into the configuration details, the communication architecture, and individual components of iSCSI, the differences are profound.

The components that make up an iSCSI SAN architecture, shown in Figure 4.19, include:

Hardware initiator A hardware device referred to as an iSCSI host bus adapter (HBA) that resides in an ESX Server host and initiates storage communication to the storage processor (SP) of the iSCSI storage device.

Software initiator A software-based storage driver initiator that does not require specific hardware and transmits over standard, supported Ethernet adapters.

Storage device The physical device that houses the disk subsystem upon which LUNs are built.

Logical unit number (LUN) A logical configuration of disk space created from one or more underlying physical disks. LUNs are most commonly created on multiple disks in a RAID configuration appropriate for the disk usage. LUN design considerations and methodologies will be covered later in this chapter.

Storage processor (SP) A communication device in the storage device that receives storage requests from storage area network nodes.

Challenge Handshake Authentication Protocol (CHAP)An authentication protocol used by the iSCSI initiator and target that involves validating a single set of credentials provided by any of the connecting ESX Server hosts.

Ethernet switchesStandard hardware devices used for managing the flow of traffic between ESX Server nodes and the storage device.

iSCSI qualified name (IQN) The full name of an iSCSI node in the format of iqn. - .com. domain:alias. For example, iqn.1998-08.com.vmware:silo1-1 represents the registration of vmware.com on the Internet in August (08) of 1998. Nodes on an iSCSI deployment will have default IQNs that can be changed. However, changing an IQN requires a reboot of the ESX Server host.

iSCSI is thus a cheaper shared storage solution than fibre channel. Of course, the reduced cost does come at the expense of the better performance that fibre channel offers. Ultimately, the question comes down to that difference in performance. The performance difference can, in large part, reflect the storage design and the disk intensity of the virtual machines stored on the iSCSI LUNs. Although this is true for fibre channel storage as well, it is less of a concern given the greater bandwidth available via a 4GB fibre channel architecture. In either case, it is the duty of the ESX Server administrator and the SAN administrator to regularly monitor the saturation level of the storage network.

Читать дальшеИнтервал:

Закладка:

Похожие книги на «Mastering VMware® Infrastructure3»

Представляем Вашему вниманию похожие книги на «Mastering VMware® Infrastructure3» списком для выбора. Мы отобрали схожую по названию и смыслу литературу в надежде предоставить читателям больше вариантов отыскать новые, интересные, ещё непрочитанные произведения.

Обсуждение, отзывы о книге «Mastering VMware® Infrastructure3» и просто собственные мнения читателей. Оставьте ваши комментарии, напишите, что Вы думаете о произведении, его смысле или главных героях. Укажите что конкретно понравилось, а что нет, и почему Вы так считаете.