Two of the main parameters that vary during capturing digital data for image processing are the size of the silkworm egg and uniform illumination spread across the image. Firstly, since the image processing (including blob analysis) algorithms are designed to identify a particular egg size or range of egg sizes, exceeding this limit causes error in the final result. Since no constant distance is set between the egg sheet and camera, in any of the earlier papers, the pixel size of captured eggs varies which causes the problem to the image processing algorithm. Also, the irregular distribution of illumination over ROI causes the digital cameras to record the data slightly in a different way, which may over saturate or under saturate the ROI. The image processing algorithms such as contrast stretch and histogram equalization perform well on the limited scenario and do not provide complete confidence to enhance low-quality data.

To overcome these issues, a constant illumination light source with a fixed distance between camera and egg sheets of a paper scanner is used to capture the digital data of the silkworm egg sheets. Since the distance between the camera array of the paper scanner is fixed, the egg size can be approximated to stay within a specific range, i.e., around 28 to 36 pixels in diameter in our experiment. However, not all manufacturers of paper scanner follow strict dimensions while designing, hence the silkworm eggs scanned with different scanner results are found to be different. For example, the eggs scanned with Canon ®scanner have a diameter of 28 to 32 pixels under, while 36 to 40 pixels with Hewlett-Packard ®(HP) scanners for the same resolution and dots per inch (dpi).

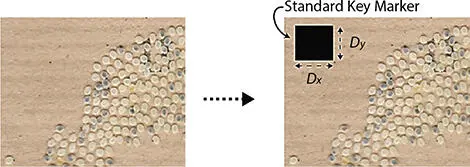

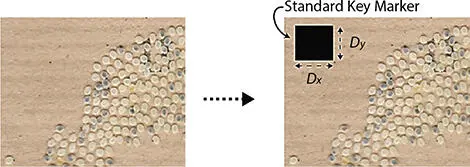

Figure 2.1 Adding a key marker on the silkworm egg sheet.

Also, by changing the scanner parameters such as resolution and dpi, the resulting egg diameter is of different pixels size for the same scanner. Hence, a key marker is printed on the egg sheet before it is scanned to capture the details in a digital format. The dimension of the key marker is 100 × 100 pixels (10 × 10 mm) which are considered as a standard dimension in our experiment. Let R ( hxw ) be the standard resolution required by the image processing algorithm, while R ′( h’ x w’ ) be the resolution at which the egg sheet is scanned and R″ is the resulting resolution of the image. Also, let ( Dx, Dy ) be the standard dimensions of the key marker, while  is the key marker dimension calculated from the new scan. Figure 2.1shows a part of the silkworm egg sheet before and after the key marker was stamped. Figure 2.1(left) represents the original egg sheet while Figure 2.1(right) represents the egg sheet with key marker stamped. Equations (2.1)to (2.3)represent the method of converting images with any dpi into the standard dpi by comparing the dimensions ( Dx, Dy ) with

is the key marker dimension calculated from the new scan. Figure 2.1shows a part of the silkworm egg sheet before and after the key marker was stamped. Figure 2.1(left) represents the original egg sheet while Figure 2.1(right) represents the egg sheet with key marker stamped. Equations (2.1)to (2.3)represent the method of converting images with any dpi into the standard dpi by comparing the dimensions ( Dx, Dy ) with  , where R ″ values are used in image processing platforms such as MATLAB to resize the image sheet to match the standard required dimensions.

, where R ″ values are used in image processing platforms such as MATLAB to resize the image sheet to match the standard required dimensions.

(2.1)

(2.2)

(2.3)

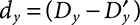

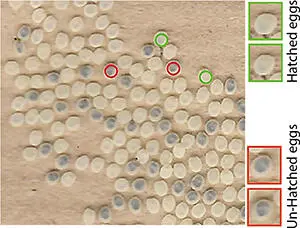

To overcome the problem with conventional image processing algorithms as stated in Section 2.2, machine learning (ML) techniques were employed to segment the eggs from the background (egg sheet). The features to be considered for segmentation such as color (grayscale pixel values), the diameter of eggs was collected manually using image processing and feature engineering, later fed into various ML algorithms (KNN, decision trees, and SVM). ML algorithms provide accuracy over 90% but fail when the input data is of a different class (breed in terms of sericulture field) when compared to the class for which the algorithm was trained since the color of eggs is not the same for different breeds of the silkworm. To overcome this issue, a supervised CNN technique is used which requires the true label while the features are selected automatically. The primary aim of our approach was to accurately count several silkworm eggs present in a given digital image and further classify them into respective classes such as hatched and unhatched. Figure 2.2represents a sample digital image of the egg sheet with a different class of eggs being marked with specific colors manually. The eggs marked with green color represent the hatched class (HC), while eggs marked with red color represent the unhatched class (UHC).

To identify the core features of the eggs, for segmenting them from the background sheet and to classify them into respective categories, a simple deep learning technique was used with four hidden layers to provide results that are much more accurate compared to conventional methods. Deep learning models are trained using TensorFlow framework to provide three different results such as foreground-background segmentation, detecting eggs, and classifying detected egg.

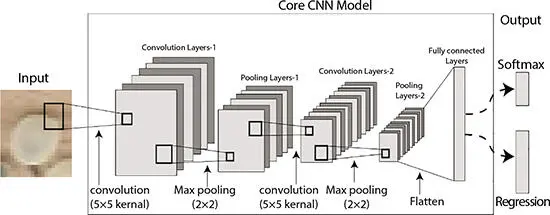

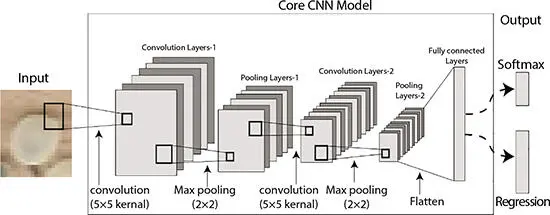

The core deep learning model used in our experiments is shown in Figure 2.3. The model consists of convolution layers, max-pooling layers, and fully connected layers. These layers are trained to identify the features of egg, while the last layer is modified to provide categorical or continuous data. The core CNN model is trained using a stride of (2 × 2) and kernel of (5 × 5) for convolution, with (2 × 2) max pooling and a fully connected layer consisting of 3200 neurons. The input to the CNN model is a 32 × 32 dimension image with three color channels, RGB.

Figure 2.2 Silkworm egg classes: hatched eggs and unhatched eggs.

Figure 2.3 Core CNN model.

2.3.2 Foreground-Background Segmentation

The basic requirement for accurate counting of silkworm egg is to perform foreground-background (FB) segmentation. In the previous attempts, the background was segmented based on the intensity value of the eggs [8]. The region that has no pixel values corresponding to the eggs is considered as background and discarded before the image processing stage. However, this is not ideal in all situations, since the silkworm eggs laid on the sheet may also contain urine from the silkworms that discolor the background. The urinated background dries into a white layer that resembles an egg pixel intensity value close to 230 for an 8-bit grayscale image.

Читать дальше

is the key marker dimension calculated from the new scan. Figure 2.1shows a part of the silkworm egg sheet before and after the key marker was stamped. Figure 2.1(left) represents the original egg sheet while Figure 2.1(right) represents the egg sheet with key marker stamped. Equations (2.1)to (2.3)represent the method of converting images with any dpi into the standard dpi by comparing the dimensions ( Dx, Dy ) with

is the key marker dimension calculated from the new scan. Figure 2.1shows a part of the silkworm egg sheet before and after the key marker was stamped. Figure 2.1(left) represents the original egg sheet while Figure 2.1(right) represents the egg sheet with key marker stamped. Equations (2.1)to (2.3)represent the method of converting images with any dpi into the standard dpi by comparing the dimensions ( Dx, Dy ) with  , where R ″ values are used in image processing platforms such as MATLAB to resize the image sheet to match the standard required dimensions.

, where R ″ values are used in image processing platforms such as MATLAB to resize the image sheet to match the standard required dimensions.