The breakthrough came in the early 1980s, when Judea Pearl, a professor of computer science at the University of California, Los Angeles, invented a new representation: Bayesian networks. Pearl is one of the most distinguished computer scientists in the world, his methods having swept through machine learning, AI, and many other fields. He won the Turing Award, the Nobel Prize of computer science, in 2012.

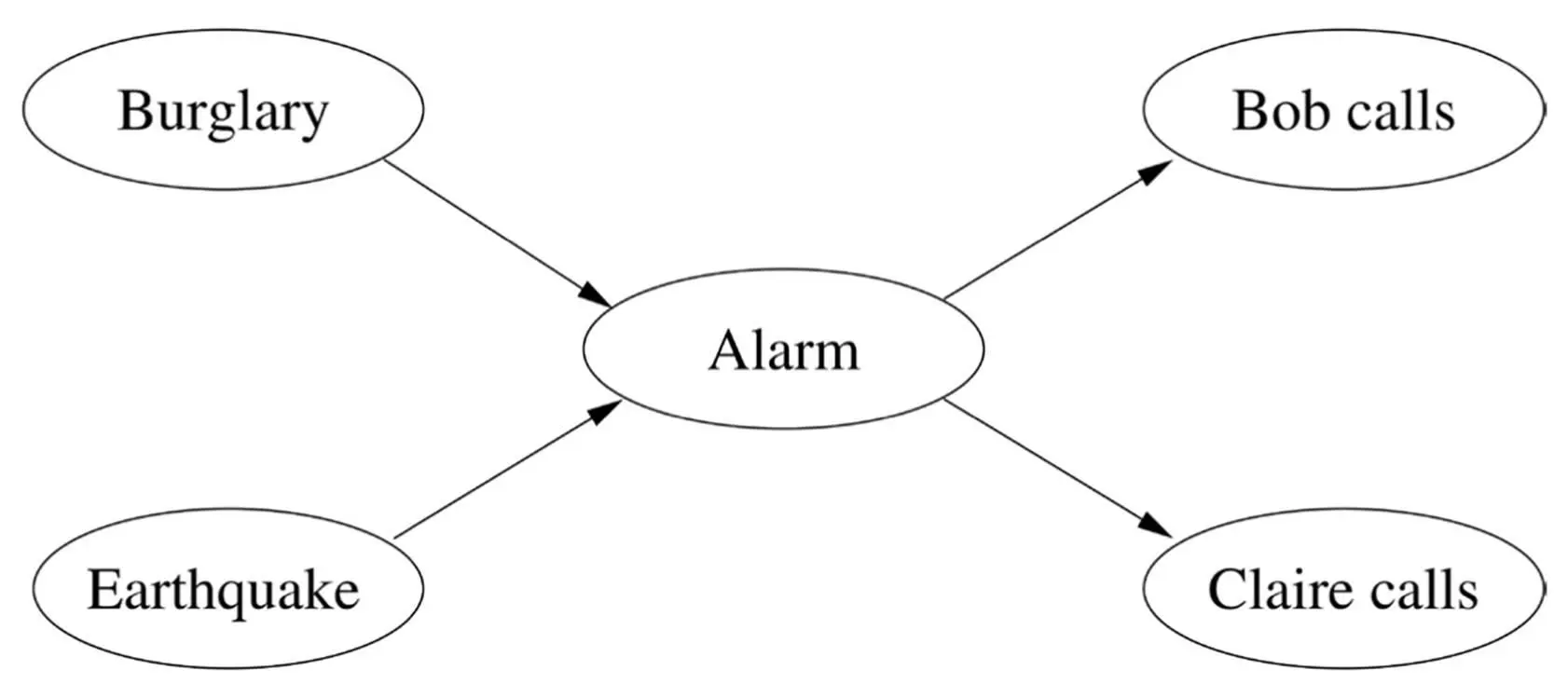

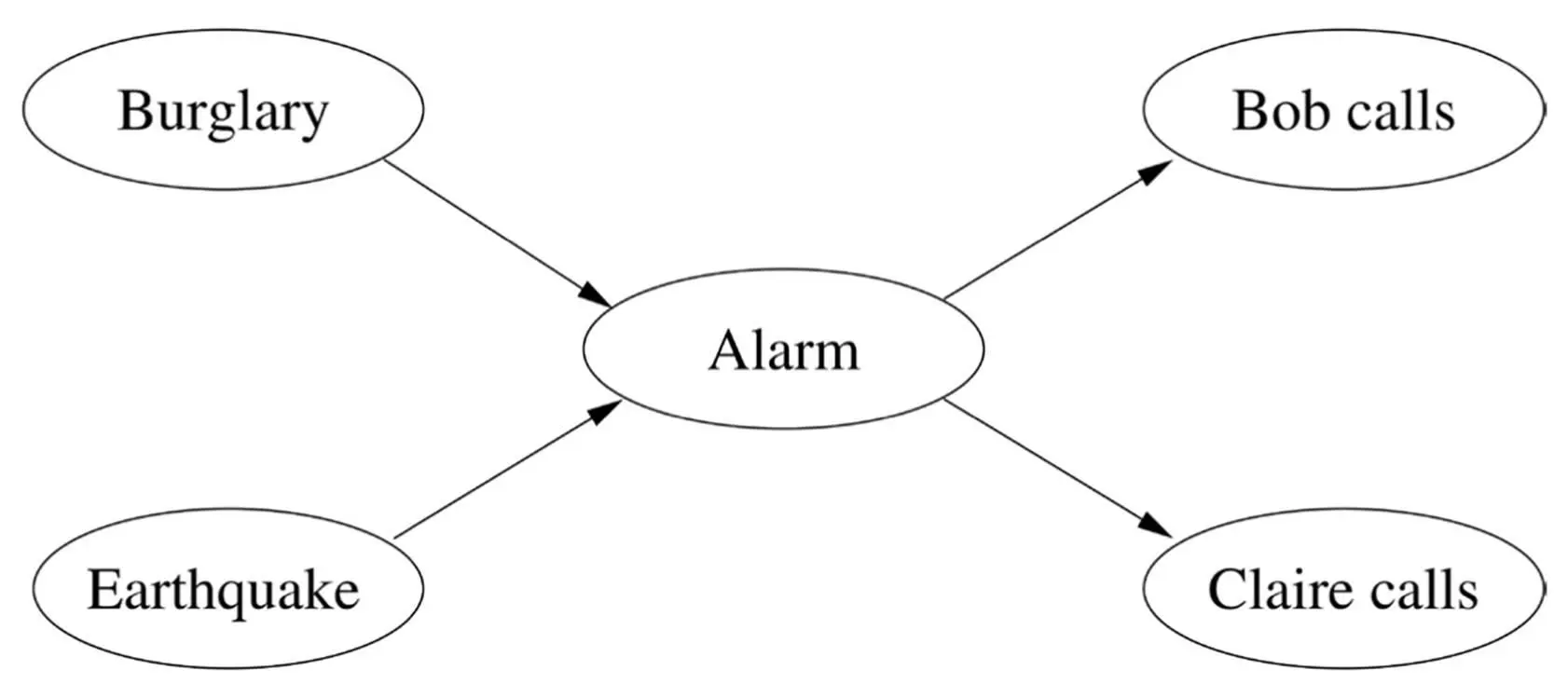

Pearl realized that it’s OK to have a complex network of dependencies among random variables, provided each variable depends directly on only a few others. We can represent these dependencies with a graph like the ones we saw for Markov chains and HMMs, except now the graph can have any structure (as long as the arrows don’t form closed loops). One of Pearl’s favorite examples is burglar alarms. The alarm at your house should go off if a burglar attempts to break in, but it could also be triggered by an earthquake. (In Los Angeles, where Pearl lives, earthquakes are almost as frequent as burglaries.) If you’re working late one night and your neighbor Bob calls to say he just heard your alarm go off, but your neighbor Claire doesn’t, should you call the police? Here’s the graph of dependencies:

If there’s an arrow from one node to another in the graph, we say that the first node is a parent of the second. So Alarm ’s parents are Burglary and Earthquake , and Alarm is the sole parent of Bob calls and Claire calls . A Bayesian network is a graph of dependencies like this, together with a table for each variable, giving its probability for each combination of values of its parents. For Burglary and Earthquake we only need one probability each, since they have no parents. For Alarm we need four: the probability that it goes off even if there’s no burglary or earthquake, the probability that it goes off if there’s a burglary and no earthquake, and so on. For Bob calls we need two probabilities (given alarm and given no alarm), and similarly for Claire.

Here’s the crucial point: Bob calling depends on Burglary and Earthquake , but only through Alarm . Bob’s call is conditionally independent of Burglary and Earthquake given Alarm , and so is Claire’s. If the alarm doesn’t go off, your neighbors sleep soundly, and the burglar proceeds undisturbed. Also, Bob and Claire are independent given Alarm . Without this independence structure, you’d need to learn 2 5= 32 probabilities, one for each possible state of the five variables. (Or 31, if you’re a stickler for details, since the last one can be left implicit.) With the conditional independencies, all you need is 1 + 1 + 4 + 2 + 2 = 10, a savings of 68 percent. And that’s just in this tiny example; with hundreds or thousands of variables, the savings would be very close to 100 percent.

The first law of ecology, according to biologist Barry Commoner, is that everything is connected to everything else. That may be true, but it would also make the world impossible to understand, if not for the saving grace of conditional independence: everything is connected, but only indirectly. In order to affect me, something that happens a mile away must first affect something in my neighborhood, even if only through the propagation of light. As one wag put it, space is the reason everything doesn’t happen to you. Put another way, the structure of space is an instance of conditional independence.

In the burglary example, the full table of thirty-two probabilities is never represented explicitly, but it’s implicit in the collection of smaller tables and graph structure. To obtain P(Burglary, Earthquake, Alarm, Bob calls, Claire calls) , all I have to do is multiply P(Burglary) , P(Earthquake) , P(Alarm | Burglary, Earthquake) , P(Bob calls | Alarm) , and P(Claire calls | Alarm) . It’s the same in any Bayesian network: to obtain the probability of a complete state, just multiply the probabilities from the corresponding lines in the individual variables’ tables. So, provided the conditional independencies hold, no information is lost by switching to the more compact representation. And in this way we can easily compute the probabilities of extremely unusual states, including states that were never observed before. Bayesian networks give the lie to the common misconception that machine learning can’t predict very rare events, or “black swans,” as Nassim Taleb calls them.

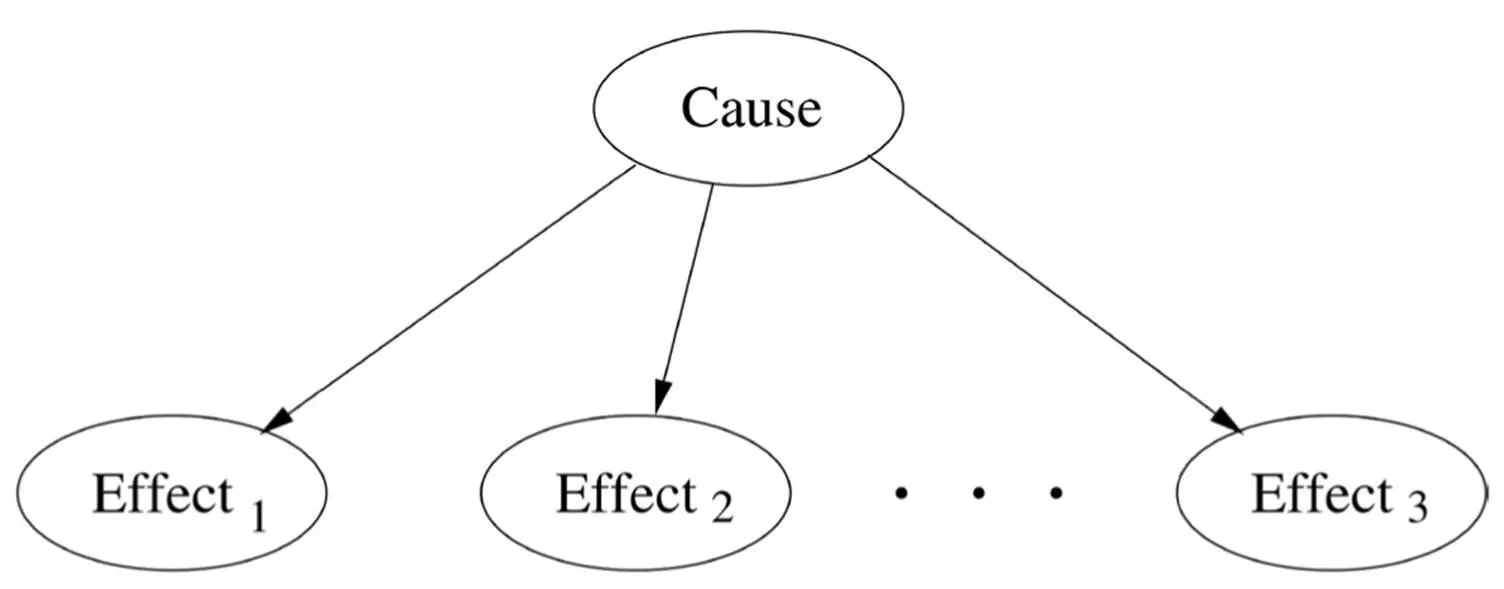

In retrospect, we can see that Naïve Bayes, Markov chains, and HMMs are all special cases of Bayesian networks. The structure of Naïve Bayes is:

Markov chains encode the assumption that the future is conditionally independent of the past given the present. HMMs assume in addition that each observation depends only on the corresponding state. Bayesian networks are for Bayesians what logic is for symbolists: a lingua franca that allows us to elegantly encode a dizzying variety of situations and devise algorithms that work uniformly in all of them.

We can think of a Bayesian network as a “generative model,” a recipe for probabilistically generating a state of the world: first decide independently whether there’s a burglary and/or an earthquake, then based on that decide whether the alarm goes off, and then based on that whether Bob and Claire call. A Bayesian network tells a story: A happened, and it led to B; at the same time, C also happened, and B and C together caused D. To compute the probability of a particular story, we just multiply the probabilities of all of its different strands.

One of the most exciting applications of Bayesian networks is modeling how genes regulate each other in living cells. Billions of dollars have been spent trying to discover pairwise correlations between individual genes and specific diseases, but the yield has been disappointingly low. In retrospect, this is not so surprising: a cell’s behavior is the result of complex interactions among genes and the environment, and a single gene has limited predictive power. But with Bayesian networks, we can uncover these interactions, provided we have the requisite data, and with the spread of DNA microarrays, we increasingly do.

After pioneering the application of machine learning to spam filtering, David Heckerman turned to using Bayesian networks in the fight against AIDS. The AIDS virus is a tough adversary because it mutates rapidly, making it difficult for any one vaccine or drug to pin it down for long. Heckerman noticed that this is the same cat-and-mouse game that spam filters play with spam and decided to apply a lesson he had learned there: attack the weakest link. In the case of spam, weak links include the URLs you have to use to take payment from the customer. In the case of HIV, they’re small regions of the virus protein that can’t change without hurting the virus. If he could train the immune system to recognize these regions and attack the cells displaying them, he just might have an AIDS vaccine. Heckerman and coworkers used a Bayesian network to help identify the vulnerable regions and developed a vaccine delivery mechanism that could teach the immune system to attack just those regions. The delivery mechanism worked in mice, and clinical trials are now in preparation.

It often happens that, even after we take all conditional independences into account, some nodes in a Bayesian network still have too many parents. Some networks are so dense with arrows that when we print them, the page turns solid black. (The physicist Mark Newman calls them “ridiculograms.”) A doctor needs to simultaneously diagnose all the possible diseases a patient could have, not just one, and every disease is a parent of many different symptoms. A fever could be caused by any number of conditions besides the flu, but it’s hopeless to try to predict its probability given every possible combination of conditions. All is not lost. Instead of a table specifying the node’s conditional probability for every state of its parents, we can learn a simpler distribution. The most popular choice is a probabilistic version of the logical OR operation: any cause alone can provoke a fever, but each cause has a certain probability of failing to do so, even if it’s usually sufficient. Heckerman and others have learned Bayesian networks that diagnose hundreds of infectious diseases in this way. Google uses a giant Bayesian network of this type in its AdSense system for automatically choosing ads to place on web pages. The network relates a million content variables to each other and to twelve million words and phrases via over three hundred million arrows, all learned from a hundred billion text snippets and search queries.

Читать дальше