5.5.5 Message authentication code

Another official mode of operation of a block cipher is not used to encipher data, but to protect its integrity and authenticity. This is the message authentication code , or MAC. To compute a MAC on a message using a block cipher, we encrypt it using CBC mode and throw away all the output ciphertext blocks except the last one; this last block is the MAC. (The intermediate results are kept secret in order to prevent splicing attacks.)

This construction makes the MAC depend on all the plaintext blocks as well as on the key. It is secure provided the message length is fixed; Mihir Bellare, Joe Kilian and Philip Rogaway proved that any attack on a MAC under these circumstances would give an attack on the underlying block cipher [212].

If the message length is variable, you have to ensure that a MAC computed on one string can't be used as the IV for computing a MAC on a different string, so that an opponent can't cheat by getting a MAC on the composition of the two strings. In order to fix this problem, NIST has standardised CMAC, in which a variant of the key is xor-ed in before the last encryption [1407]. (CMAC is based on a proposal by Tetsu Iwata and Kaoru Kurosawa [967].) You may see legacy systems in which the MAC consists of only half of the last output block, with the other half thrown away, or used in other mechanisms.

There are other possible constructions of MACs: the most common one is HMAC, which uses a hash function with a key; we'll describe it in section 5.6.2.

5.5.6 Galois counter mode

The above modes were all developed for DES in the 1970s and 1980s (although counter mode only became an official US government standard in 2002). They are not efficient for bulk encryption where you need to protect integrity as well as confidentiality; if you use either CBC mode or counter mode to encrypt your data and a CBC-MAC or CMAC to protect its integrity, then you invoke the block cipher twice for each block of data you process, and the operation cannot be parallelised.

The modern approach is to use a mode of operation designed for authenticated encryption. Galois Counter Mode (GCM) has taken over as the default since being approved by NIST in 2007 [1409]. It uses only one invocation of the block cipher per block of text, and it's parallelisable so you can get high throughput on fast data links with low cost and low latency. Encryption is performed in a variant of counter mode; the resulting ciphertexts are also used as coefficients of a polynomial which is evaluated at a key-dependent point over a Galois field of  elements to give an authenticator tag. The tag computation is a universal hash function of the kind I described in section 5.2.4and is provably secure so long as keys are never reused. The supplied key is used along with a random IV to generate both a unique message key and a unique authenticator key. The output is thus a ciphertext of the same length as the plaintext, plus an IV and a tag of typically 128 bits each.

elements to give an authenticator tag. The tag computation is a universal hash function of the kind I described in section 5.2.4and is provably secure so long as keys are never reused. The supplied key is used along with a random IV to generate both a unique message key and a unique authenticator key. The output is thus a ciphertext of the same length as the plaintext, plus an IV and a tag of typically 128 bits each.

GCM also has an interesting incremental property: a new authenticator and ciphertext can be calculated with an amount of effort proportional to the number of bits that were changed. GCM was invented by David McGrew and John Viega of Cisco; their goal was to create an efficient authenticated encryption mode suitable for use in high-performance network hardware [1270]. It is the sensible default for authenticated encryption of bulk content. (There's an earlier composite mode, CCM, which you'll find used in Bluetooth 4.0 and later; this combines counter mode with CBC-MAC, so it costs about twice as much effort to compute, and cannot be parallelised or recomputed incrementally [1408].)

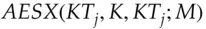

GCM and other authenticated encryption modes expand the plaintext by adding a message key and an authenticator tag. This is very inconvenient in applications such as hard disk encryption, where we prefer a mode of operation that preserves plaintext length. Disk encryption systems used to use CBC with the sector number providing an IV, but since Windows 10, Microsoft has been using a new mode of operation, XTS-AES, inspired by GCM and standardised in 2007. This is a codebook mode but with the plaintext whitened by a tweak key derived from the disk sector. Formally, the message  encrypted with the key

encrypted with the key  at block

at block  is

is

where the tweak key  is derived by encrypting the IV using a different key and then multiplying it repeatedly with a suitable constant so as to give a different whitener for each block. This means that if an attacker swaps two encrypted blocks, all 256 bits will decrypt to randomly wrong values. You still need higher-layer mechanisms to detect ciphertext manipulation, but simple checksums will be sufficient.

is derived by encrypting the IV using a different key and then multiplying it repeatedly with a suitable constant so as to give a different whitener for each block. This means that if an attacker swaps two encrypted blocks, all 256 bits will decrypt to randomly wrong values. You still need higher-layer mechanisms to detect ciphertext manipulation, but simple checksums will be sufficient.

In section 5.4.3.1I showed how the Luby-Rackoff theorem enables us to construct a block cipher from a hash function. It's also possible to construct a hash function from a block cipher 5. The trick is to feed the message blocks one at a time to the key input of our block cipher, and use it to update a hash value (which starts off at say  = 0). In order to make this operation non-invertible, we add feedforward: the

= 0). In order to make this operation non-invertible, we add feedforward: the  st hash value is exclusive or'ed with the output of round

st hash value is exclusive or'ed with the output of round  . This Davies-Meyer construction gives our final mode of operation of a block cipher ( Figure 5.16).

. This Davies-Meyer construction gives our final mode of operation of a block cipher ( Figure 5.16).

The birthday theorem makes another appearance here, in that if a hash function  is built using an

is built using an  bit block cipher, it is possible to find two messages

bit block cipher, it is possible to find two messages  with

with  with about

with about  effort (hash slightly more than that many messages

effort (hash slightly more than that many messages  and look for a match). So a 64 bit block cipher is not adequate, as forging a message would cost of the order of

and look for a match). So a 64 bit block cipher is not adequate, as forging a message would cost of the order of  messages, which is just too easy. A 128-bit cipher such as AES used to be just about adequate, and in fact the AACS content protection mechanism in Blu-ray DVDs used ‘AES-H’, the hash function derived from AES in this way.

messages, which is just too easy. A 128-bit cipher such as AES used to be just about adequate, and in fact the AACS content protection mechanism in Blu-ray DVDs used ‘AES-H’, the hash function derived from AES in this way.

Читать дальше

elements to give an authenticator tag. The tag computation is a universal hash function of the kind I described in section 5.2.4and is provably secure so long as keys are never reused. The supplied key is used along with a random IV to generate both a unique message key and a unique authenticator key. The output is thus a ciphertext of the same length as the plaintext, plus an IV and a tag of typically 128 bits each.

elements to give an authenticator tag. The tag computation is a universal hash function of the kind I described in section 5.2.4and is provably secure so long as keys are never reused. The supplied key is used along with a random IV to generate both a unique message key and a unique authenticator key. The output is thus a ciphertext of the same length as the plaintext, plus an IV and a tag of typically 128 bits each. encrypted with the key

encrypted with the key  at block

at block  is

is

is derived by encrypting the IV using a different key and then multiplying it repeatedly with a suitable constant so as to give a different whitener for each block. This means that if an attacker swaps two encrypted blocks, all 256 bits will decrypt to randomly wrong values. You still need higher-layer mechanisms to detect ciphertext manipulation, but simple checksums will be sufficient.

is derived by encrypting the IV using a different key and then multiplying it repeatedly with a suitable constant so as to give a different whitener for each block. This means that if an attacker swaps two encrypted blocks, all 256 bits will decrypt to randomly wrong values. You still need higher-layer mechanisms to detect ciphertext manipulation, but simple checksums will be sufficient. = 0). In order to make this operation non-invertible, we add feedforward: the

= 0). In order to make this operation non-invertible, we add feedforward: the  st hash value is exclusive or'ed with the output of round

st hash value is exclusive or'ed with the output of round  . This Davies-Meyer construction gives our final mode of operation of a block cipher ( Figure 5.16).

. This Davies-Meyer construction gives our final mode of operation of a block cipher ( Figure 5.16). is built using an

is built using an  bit block cipher, it is possible to find two messages

bit block cipher, it is possible to find two messages  with

with  with about

with about  effort (hash slightly more than that many messages

effort (hash slightly more than that many messages  and look for a match). So a 64 bit block cipher is not adequate, as forging a message would cost of the order of

and look for a match). So a 64 bit block cipher is not adequate, as forging a message would cost of the order of  messages, which is just too easy. A 128-bit cipher such as AES used to be just about adequate, and in fact the AACS content protection mechanism in Blu-ray DVDs used ‘AES-H’, the hash function derived from AES in this way.

messages, which is just too easy. A 128-bit cipher such as AES used to be just about adequate, and in fact the AACS content protection mechanism in Blu-ray DVDs used ‘AES-H’, the hash function derived from AES in this way.