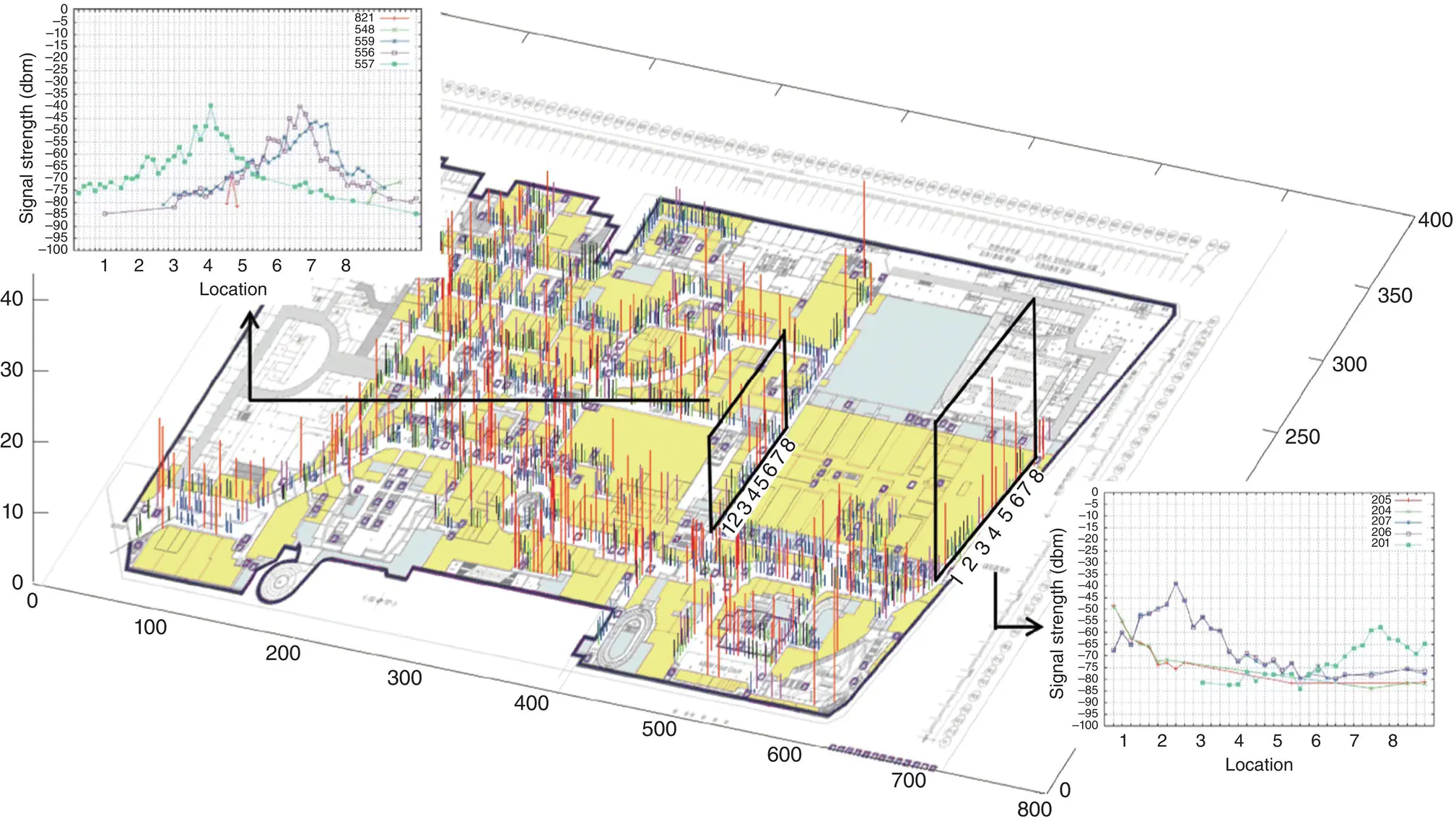

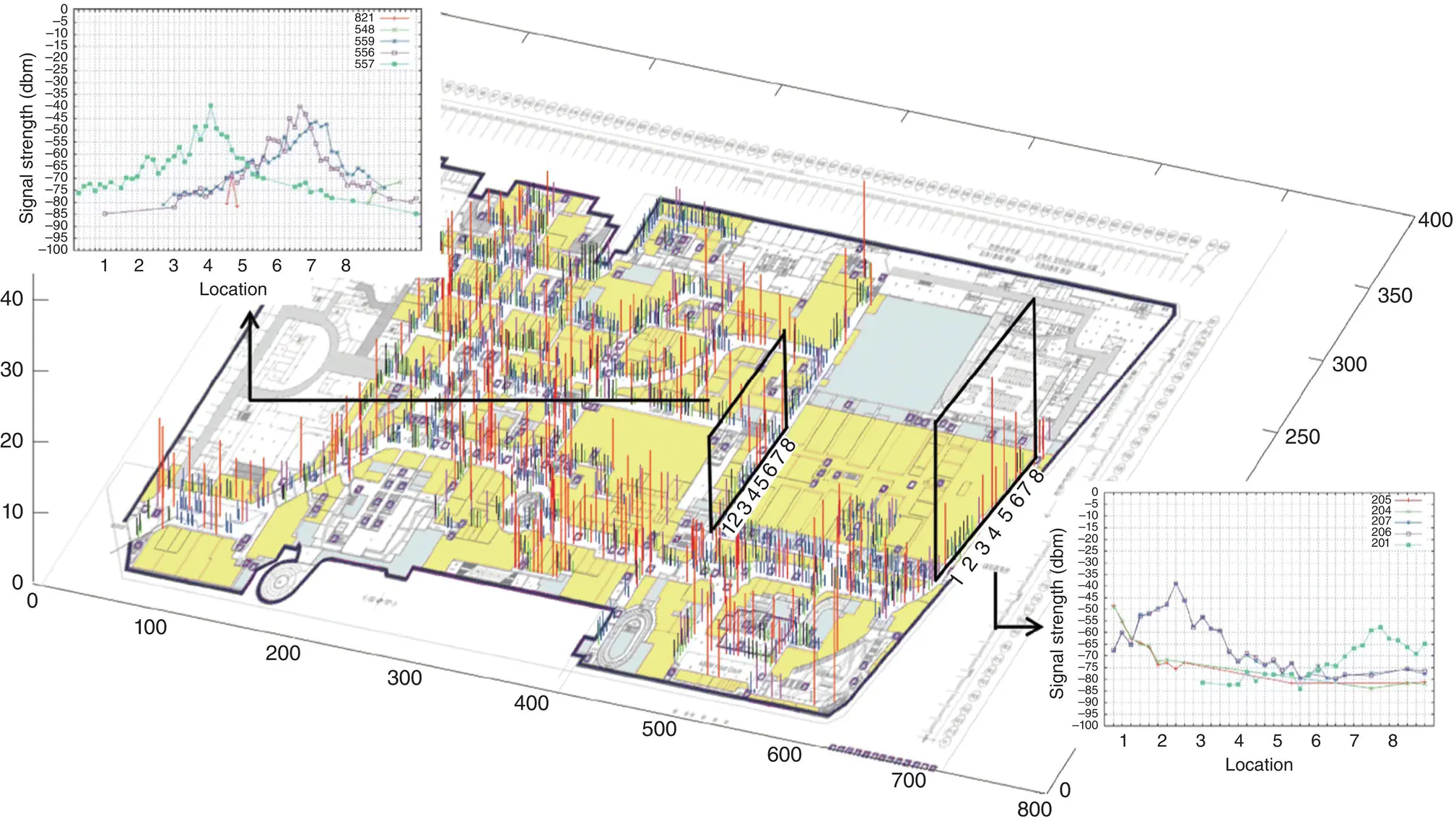

Figure 37.5 Measured accuracy and Wi‐Fi signal distributions excerpted for an indoor location. Different locations are often covered by different Wi‐Fi access points (APs) and or have different signal strength characteristics for the same Wi‐Fi AP (as can be seen from the two plots in the figure), allowing for unique fingerprinting. Black, blue, violet, and red bars on the map represent 3‐, 6‐, 9‐, and more than 12 m error distances, respectively, when using Wi‐Fi RSSI fingerprinting for localization [64].

Source: Reproduced with permission of IEEE.

Although empirical RSSI‐based localization schemes are very popular, a disadvantage of these methods, as mentioned earlier, is that they require labor‐intensive surveying of the environment to generate radio maps. Crowdsourcing is one possible solution to simplify the radio map generation process, by utilizing data from multiple users carrying smartphones [80]. Utilizing principles from Simultaneous Localization and Mapping (SLAM) approaches proposed for robot navigation in a priori unknown environments can also be beneficial for quickly building maps of indoor locales [81]. Another challenge is that RSSI readings are susceptible to wireless multipath interference as well as shadowing or occlusions created by walls, windows, or even the human body; thus, in dynamic environments such as a shopping mall with moving crowds, the performance of fingerprinting can degrade dramatically. To overcome multipath interference, a recent effort [82] proposes using the energy of the direct path (EDP), and ignoring the multipath reflections between the mobile client and APs. EDP can improve performance over RSSI because RSSI includes the energy carried by multipath reflections which travel longer distances than the actual distance between the client and the APs.

Several approaches have proposed computer‐vision‐based fingerprinting [83–86]. These techniques require the mobile subject to either carry a camera or use a camera embedded in a handheld device, such as a smartphone. When the subject moves around in an indoor environment, the camera captures images (visual fingerprints) of the environment, and then determines the subject’s position and orientation by matching the images against a database of images with known location. One challenge with such an approach is the high storage capacity required for storing the images of an indoor environment. Significant computing power may be required to perform the image matching, which may be challenging to implement on mobile devices. If subjects are required to carry supporting computing equipment [85], it may impede their mobility. In [87, 88], it is assumed that the illumination intensity and ambient color vary from room to room and thus can be used as fingerprints for room‐level localization. Light matching [89] utilizes the position, orientation, and shape information of various indoor luminaires, and models the illumination intensity using an inverse‐square law. To distinguish different luminaires, the work relies on asymmetries/irregularities of luminaire placement. Absolute and relative intensity measurements for localization have been proposed in [90, 91], respectively, with knowledge of the receiver orientation assumed to be available to solve for a position. These techniques employ received intensity measurements to extract position information from multiple transmitters using a suitable channel model. Other approaches exploit the directionality of free space optics, where angular information is encoded by discrete emitters (e.g. modulated LED‐based beacons) [92–94]. The Xbox Kinect [95] uses continuously projected infrared (IR) structured light to fingerprint and capture 3D scene information with an infrared camera. The 3D structure can be computed from the distortion of a pseudorandom pattern of structured IR light dots. People can be tracked simultaneously up to a distance of 3.5 m at a frame rate of 30 Hz. An accuracy of 1 cm at 2 m distance has been reported.

Some approaches utilize dedicated coded markers or tags in the environment for visual fingerprinting, to help with localization [96–100]. Such approaches can overcome the limitations of traditional vision‐based localization systems that rely entirely on natural features in images which often lack of robustness, for example, under conditions with varying illumination. Common types of markers include concentric rings, barcodes, or patterns consisting of colored dots. Such markers greatly simplify the automatic detection of corresponding points, allow determination of the system scale, and enable distinguishing between objects by using a unique code for different types of objects. An optical navigation system for forklift trucks in warehouses was proposed in [96]. Coded reference markers were deployed on ceilings along various routes. On the roof of each forklift, an optical sensor took images that were forwarded to a centralized server for processing. Another low‐cost indoor localization system was proposed in [97] that made use of phone cameras and bar‐coded markers in the environment. The markers were placed on walls, posters, and other objects. If an image of these markers was captured, the pose of the device could be determined with an accuracy of “a few centimeters.” Additional location‐based information (e.g. about the next meeting in the room) could also be displayed.

An alternative approach to physically deploying markers is to project reference points or patterns onto the environment. In contrast to systems relying only on natural image features, the detection of projected patterns is facilitated by the distinct color, shape, and brightness of the projected features. For example, in [101], the TrackSense system was proposed, consisting of a projector and a simple webcam. A grid pattern is projected onto plain walls in the camera’s field of view. Using an edge detection algorithm, the lines and intersection points are determined. By the principle of triangulation – analogous to stereo vision – the distance and orientation to each point relative to the camera are computed. With a sufficient number of points, TrackSense is able determine the camera’s orientation relative to fixed large planes, such as walls and ceilings.

Techniques that are based on the presence of the mobile subject in the vicinity of a sensor (with a finite range and analysis capabilities) are referred to as proximity‐based localization approaches. The proximity of the mobile subject can be detected via physical contact or by monitoring a physical quantity in the vicinity of the sensor, such as a magnetic field. When a mobile subject is detected by a single sensor, it is considered to be collocated with it. Several proximity‐based localization techniques have been implemented, involving IR, RFID, and cell identification (Cell‐ID).

One of the first IR‐based proximity indoor positioning systems was the Active Badge system [102] designed at AT&T Cambridge in the 1990s. By estimating the location of active badges worn by people in the building, the Active Badge system was able to locate persons in a building. The active badges would transmit a globally unique IR signal every 15 s (with a battery life of 6 months to a year). In each room, one or more sensors were fixed and detected the IR signal sent by an active badge. Using the measured location of the people in the building, the system was able to track the employees, their location (room numbers), and the nearest telephone to reach them. The accuracy of the system was driven by the operating range of the IR sender, which was 6 m. The proximity system based on wearable IR emitters was small, lightweight, and easy to carry; however, the network of fixed sensors deployed across the building had a substantial cost associated with it. Moreover, the update rate of 15 s is too large for real‐time localization (navigation).

Читать дальше