In digital marketing, experimental units are available in very large quantities – think website visitors. However, measurement can sometimes be problematic – what constitutes a “successful” website visit? Is success an immediate purchase or a purchase three weeks later? How can you tell the same visitor returned three weeks later? Control of treatments can be difficult. We can put an ad on a webpage that a customer visited, but how do we know he actually saw the ad? In the case of television, we can run a commercial, but how do we know who actually saw it? How do we know which of the persons who bought our product this week have seen the ad? These are some of the problems we will deal with in later chapters.

1.4.3 Most Experiments Fail

It is important to remember that the purpose of an experiment is to test some idea, not prove something and also that most experiments fail! This may sound depressing, but it is hugely effective if you can create a process that allows bad ideas to fail quickly and with minimal investment:

“[Our company has] tested over 150 000 ideas in over 13 000 MVT [multivariate testing] projects during the past 22 years. Of all the business improvement ideas that were tested, only about 25 percent (one in four) actually produced improved results; 53 percent (about half) made no difference (and were a waste of everybody's times); and 22 percent (that would have been implemented otherwise) actually hurt the results they were intended to help” (Holland and Cochran, 2005, p. 21).

“Netflix considers 90% of what they try to be wrong” (Moran, 2007, p. 240).

“I have observed the results of thousands of business experiments. The two most important substantive observations across them are stark. First, innovative ideas rarely work When a new business program is proposed, it typically fails to increase shareholder value versus the previous best alternative” (Manzi, 2012, p. 13).

Writing of the credit card company Capital One (Goncalves, 2008, p. 27): “We run thirty thousand tests a year, and they all compete against each other on the basis of economic results. The vast majority of these experiments fail, but the ones that succeed can hit very big[.]”

“Given a ten percent chance of a 100 times payoff, you should take that bet every time. But you're still going to be wrong nine times out of ten.” Amazon CEO Jeff Bezos wrote this in his 2016 letter to shareholders.

“Economic development builds on business experiments. Many, perhaps most experiments fail” (Eliasson, 2010, p. 117).

You are not going to get useful results from most of the experiments that you conduct. But, as Thomas Edison said of inventing the lightbulb, “I have not failed. I've just found 10 000 ways that didn't work.” Failed experiments are not worthless; they can contain much useful information: Why didn't the experiment work? Did we maintain false assumptions? Was the design faulty? Everything learned from a failed experiment can help make the next experiment better.

When dealing with human subjects, where response sizes are small and there are lots of noise, there can be a tendency toward false positives (especially when sample sizes are small!), so follow‐up experiments of small sample experiments are important to document that the discovered effect really exists.

Even with large samples, it is best to make sure that a discovered effect really exists. In webpage development, an experiment to optimize a webpage might prove fruitful, yet the improvement will not immediately be rolled out to all users. Instead, it might be rolled out to 5% of users to guard against the possibility that some unforeseen development might render the improvement futile or worse, harmful. Only after it has been deemed successful with the 5% sample will it be rolled out to all users.

1 1.4.1 Suppose the company in the invoice example billed quarterly and had, on average, $10 million in accounts receivable each quarter. If short‐term money costs 6%, how much does the company save?

2 1.4.2 Give an example of a business hypothesis, e.g. we think that raising price from $2 to $2.25 won't cost us sales. Describe an experiment to test your hypothesis. What data need to be collected? How should the data be collected?

3 1.4.3 Find an example of a business experiment reported in the popular business literature, e.g. Forbes or The Wall Street Journal.

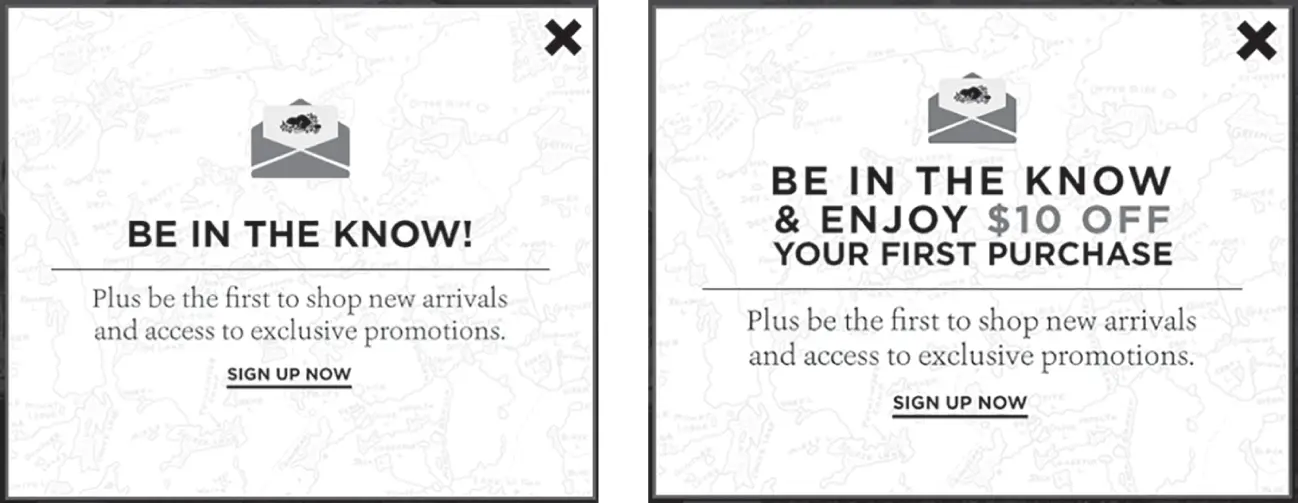

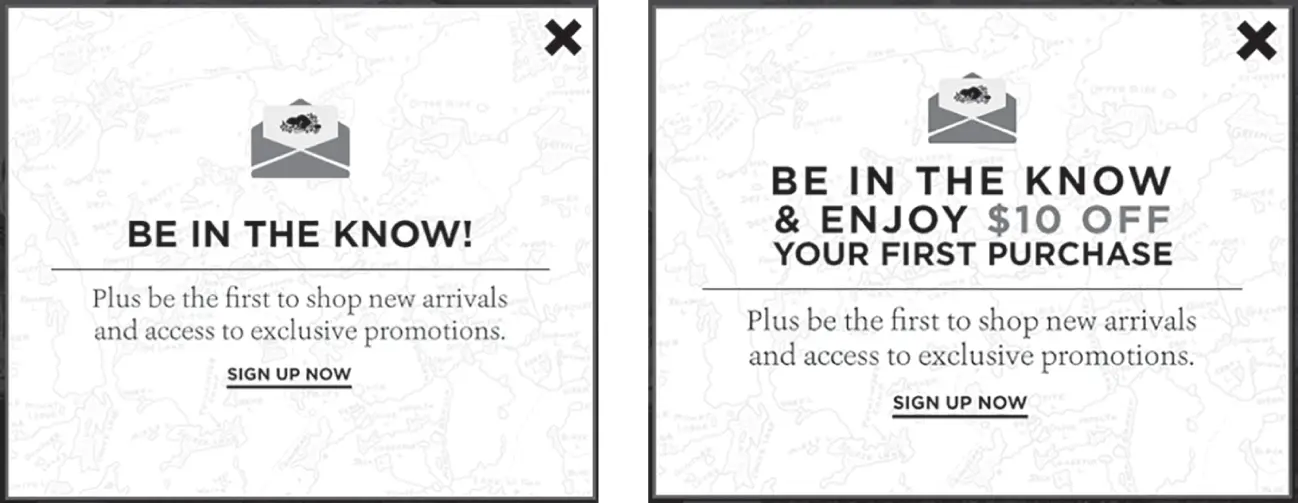

1.5 Improving Website Designs

One of the most popular types of experiment in business is the A/B website test. For example, Figure 1.4shows two different versions of an offer made to website visitors of an iconic clothing retailer to induce them to sign up for the retailer's mailing list. The rationale for the test was that these visitors were already at the website and knew about the store and its products, so maybe a monetary inducement was unnecessary. If, indeed, it was unnecessary, then the $10 coupon would be just giving money away needlessly. Visitors to the website are randomly shown one of the two ads. The two groups are typically labeled “A” and “B,” thus the name “A/B testing.” Digital analytics software allows website owners to track the online behavior of visitors in each group, such as what customers click on, what files they download, and whether they make a purchase, allowing comparison between the two groups. In this case, the software tracked whether a visitor signed up for the mailing list or not. A test like this will typically run for a few days or weeks, until enough users have visited the page so that we have a good idea of which version is performing better. Once we have the results of the test, the retailer can deploy the better ad to all visitors. In this case, over a 30‐day period, 400 000 visitors were randomly assigned to see one of the two ads. Do you think a $10 coupon really mattered to people who spent hundreds of dollars on clothes?

In this test, the $10 incentive really did make a difference and resulted in more sign‐ups. While it may not be surprising that the version with the $10 incentive won the test, the test gives us a quantitative estimate of how much better this version image performs: it increased sign‐ups by 300% compared with the version without the incentive. The reason tests like this have become so popular is that they allow us to measure the causal impact of the landing page version on sales. The landing pages were assigned to users at random , and when we average over a large number of users and see a difference between the A users and the B users, the resulting difference must be due to the landing page and not anything else. We'll discuss causality and testing more in Chapter 3.

Figure 1.4 A/B test for mailing list sign‐ups.

Source: courtesy GuessTheTest.com.

Website A/B testing has become so popular that nearly every large website has an ongoing testing program, often conducting dozens of tests every month on every possible feature of the website: colors, images, fonts, text copy, layouts, rules governing when pop‐ups or banners appear, etc. Organizations such as GuessTheTest.comregularly feature examples of tests and invite the reader to guess which version of a website performed better. (The example in Figure 1.4was provided by GuessTheTest.com.) In Figures 1.5–1.7, we give three more example website tests where users were randomly assigned to see one of two different versions of a website. As you read through them, try to guess which test performed better or whether they were the same.

Website tests can also span across multiple pages in a site. For example, an online retailer wanted to know how best to display images of skirts on their website. Should the skirt be shown as part of a complete outfit (left image in Figure 1.5), or should the image of the skirt be shown with the model's torso and face cropped out to better show the details of the skirts? In this test, users were assigned to one of the two treatments and then shown either full or cropped images for every skirt on the product listing pages. (Doing this requires a bit more setup than the simple one‐page tests but is still possible with most testing software.) The website analytics software measured the sales of skirts (total revenue in $) for the two groups. Which images do you think produced more skirt sales?

Читать дальше