The learning rates were found to be 0.00001 for valence, 0.001 for arousal & a gradient momentum of 0.9. These models resulted in 4.51 & 4.96% improvement in classifying valence and arousal respectively among 2 classes (High/Low) in valence & 3 classes (High/Normal/Low) in arousal. The learning rate is marginally more useful, but dropout probability secures the best classification across levels. They also noted that wrong choice of activation functions especially 1st CNN layer will cause severe defects to models. The models were highly accurate with respect to previous researchers and prove the fact that neural networks are the key for EEG classification of emotions in a step to unlocking the brain.

Hence Deep Neural Networks are used to analyze human emotions and classify them by PSD and frontal asymmetry features. Training model for emotional dataset are created to identify its instances. Emotions are of 2 types—Discrete, classified as a synchronized response in neural anatomy, physiology & morphological expressions and Dimensional, i.e., they can be represented by a collection of small number of underlying effective dimensions, in other words, vectors in a multidimensional space.

The aim of this paper is to identify excitement, meditation, boredom and frustration from the DEAP emotion dataset by a classification algorithm. The Python language is used including libraries like SciKit Learn Toolbox, SciPy and Keras Library. The DEAP dataset contains physiological readings of 32 participants recorded at a sampling rate of 512 Hz with a “bandpass frequency filter” with a range of 4.0 to 45.0 Hz and eliminated EOG artifacts. Power Spectral Density (PSD), based on Fast Fourier Transform, decomposes the data into 4 distinct frequency ranges, i.e., theta (4–8 Hz), alpha (8–13 Hz), beta (13–30 Hz) and gamma (30–40 Hz) using the avgpower function available in Python’s Signal Processing toolbox. The left hemisphere of brain has more frequently activation with positive valence and the right hemisphere has negative valence.

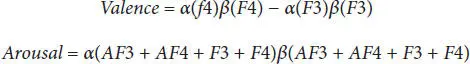

Emotion estimation on EEG frontal asymmetry:

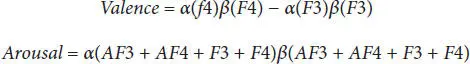

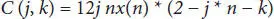

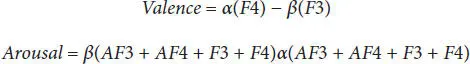

“Ramirez et al. classified emotional states by computing levels of arousal as prefrontal cortex and valence levels as below”:

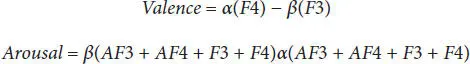

“Whenever the arousal was computed as beta to alpha activity ratio in frontal cortex, valence was computed as relative frontal alpha activity in right lobe compared to left lobe as below”:

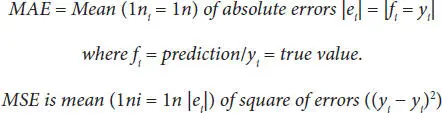

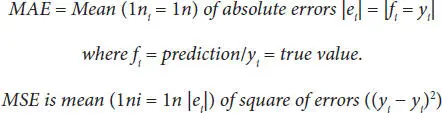

“A time-frequency transform was used to extract spectral features alpha (8–11 Hz) and beta (12–29 Hz). Lastly Mean absolute error (MAE), Mean squared error (MSE) and Pearson Correlation (Corr) is used.”

By scaling (1–9) into valence & arousal (High & Low) we see that feeling of frustration and excitement triggers as high arousal in a low valence area and high valence area respectively whereas meditation and boredom triggers as low arousal in high valence area and in low valence area respectively.

The DNN classifier has 2,184 units with each hidden layer having 60% of its predecessor’s units. Training was done using roughly 10% of the dataset divided into a train set, validation set and a test set. After setting a dropout of 0.2 for input layer & 0.5 for hidden layers, the model recognized arousal & valence with rates of 73.06% (73.14%), 60.7% (62.33%), and 46.69% (45.32%) for 2, 3, and 5 classes, respectively. The kernel-based classifier was observed to have better accuracy compared to other methods like Naïve Bayes and SVM. The result was a set of 2,184 unique features describing EEG activity during each trial. These extracted features were used to train a DNN classifier & random forest classifier. This was exclusively successful for BCI where datasets are huge.

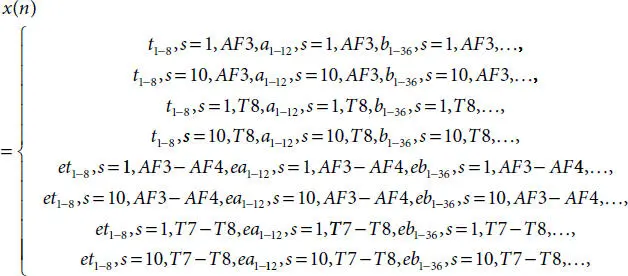

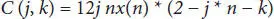

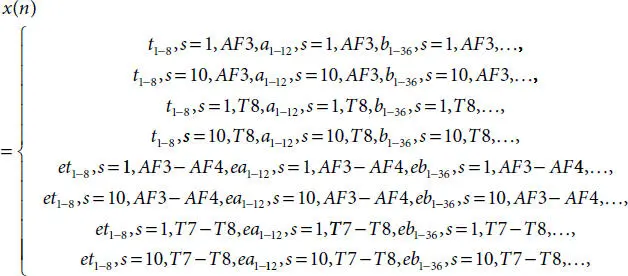

Emotion monitoring using LVQ and EEG is used to identify emotions for the purpose of medical therapy and rehabilitation purposes. [3] proposes a monitoring system for humane emotions in real time through wavelet and “Learning Vector Quantization”. Training data from 10 trials with 10 subjects, “3 classes and 16 segments (equal to 480 sets of data)” is processed within 10 seconds and classified into 4 frequency bands. These bands then become input for LVQ and sort into excited, relaxed or sad emotions. The alpha waves appear frequently when people are relaxed, beta wave occurs when people think, theta wave occurs when people are under stress, tired or sleepy and delta wave occurs when people are in deep sleep. EEG data is captured using an Emotive Insight wireless EEG on 10 participants. They used wireless EEG electrodes on “AF3”, “T7”, “T8” and “AF4” with a 128 Hz sampling frequency to record at morning, noon and night. 1,280 points are recorded in a set, which occurs every 3 min segmented every 10 s. Each participant is analyzed with excited, relaxed or sad states. Using the “LVQ wavelet transform”, EEG was extracted into the required frequencies. “Discrete wavelet transforms (DWT)” again X(n) signal is described as follows:

Known as wavelet base function. Approximation signal below is a resulted signal generated from convoluted processes of original signal mapping with high pass filter.

Where , x ( n ) = original signal

g(n) = low pass filter coeff

h(n) = high pass filter coeff

K, n = index 1 = till length of signal

Scale function coefficient (Low pass filter)g0 = 1 − 342, g1 = 3 − 342, g2 = 3 + 3, 342, g3 = 1+342

Wavelet function coefficient (High pass filter)h0 = 1 − 342, h1 = − 3 − 342, h2 = 3 + 3, 342, h3 = − 1 + 342

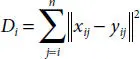

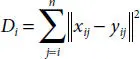

When each input data with class label is known, a supervised version of vector quantization called “Learning Vector quantization” can be used to obtain the class that depends on the Euclidean distance between reference vectors and weights. Each training data’s class was compared based on:

Following is the series of input identification systems:

“As stated, The LVS algorithm attempted to correct winning weight Wi with minimum D by shifting the input by the following values:

1 If the input xi and wining wi have the same class label, then move them closer together by ΔWi(j) = B(j)(Xij − Wij).

Читать дальше