Making Sure the Cloud Is Always Available

In this section, you'll become familiar with common deployment architectures used by many of the leading cloud providers to address availability, survivability, and resilience in their services offerings.

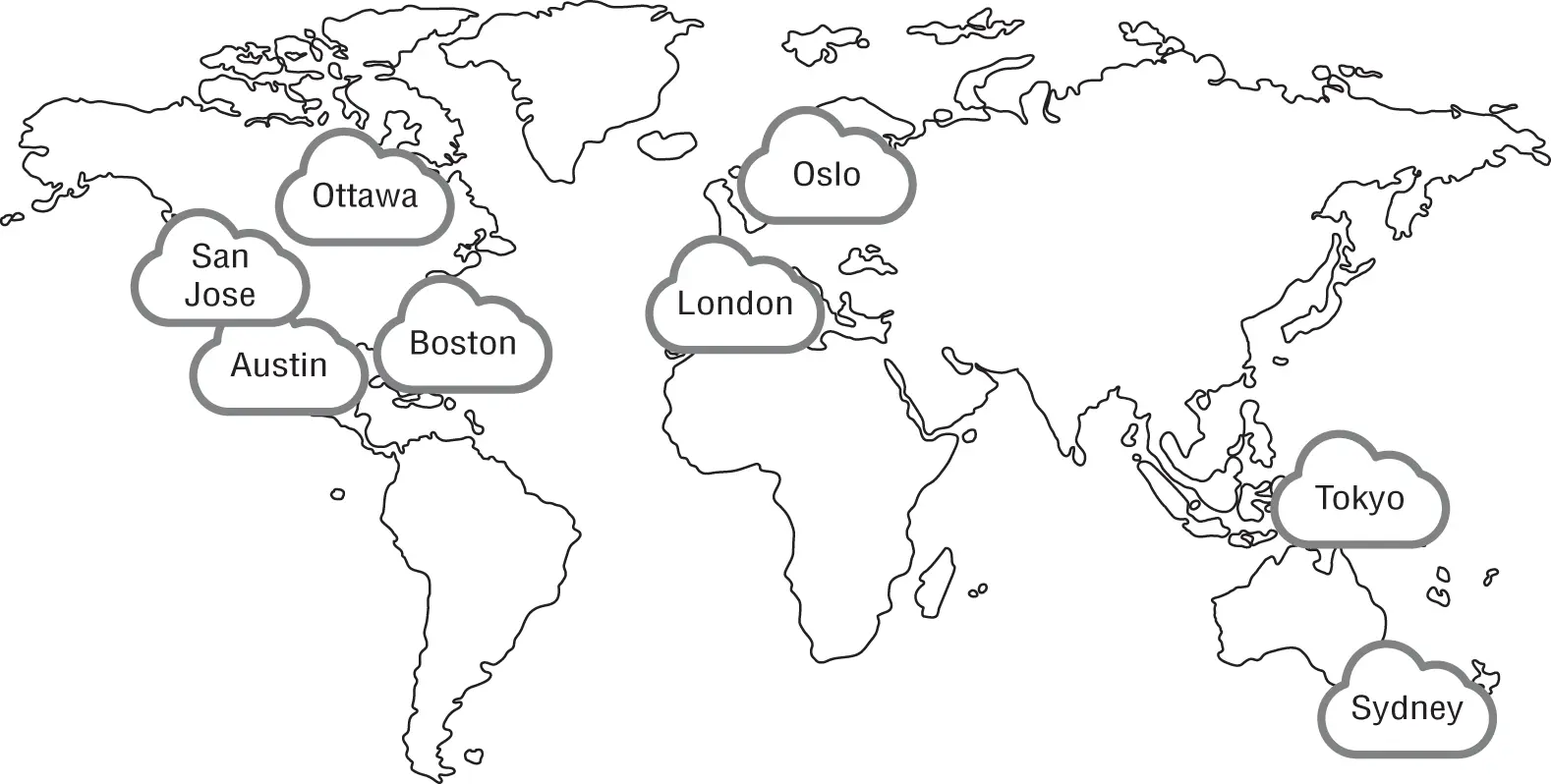

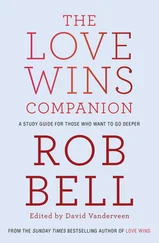

Major cloud providers partition their operations into regions for fault tolerance and to offer localized performance advantages. A region is not a monolithic data center but rather a geographical area of presence that usually falls within a defined political boundary, such as a state or country. For example, a cloud company may offer regions throughout the world, as shown in Figure 1.20. They may have regions in Sydney and Tokyo in the Asia Pacific region, and in Europe there may be regions called London and Oslo. In North America there could be regions in Boston, Ottawa, Austin, and San Jose.

FIGURE 1.20 Cloud regions

All of the regions are interconnected to each other and the Internet with high-speed optical networks but are isolated from each other, so if there is an outage in one region, it should not affect the operations of other regions.

Generally, data and resources in one region aren't replicated to any other regions unless you specifically configure such replication to occur. One of the reasons for this is to address regulatory and compliance issues that require data to remain in its country of origin.

When you deploy your cloud operations, you'll be given a choice of what region you want to use. Also, for a global presence and to reduce network delays, the cloud customer can choose to replicate operations in multiple regions around the world.

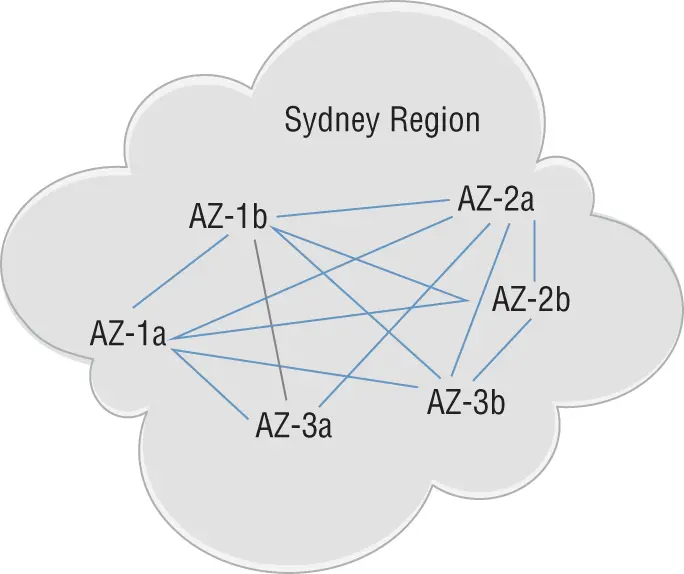

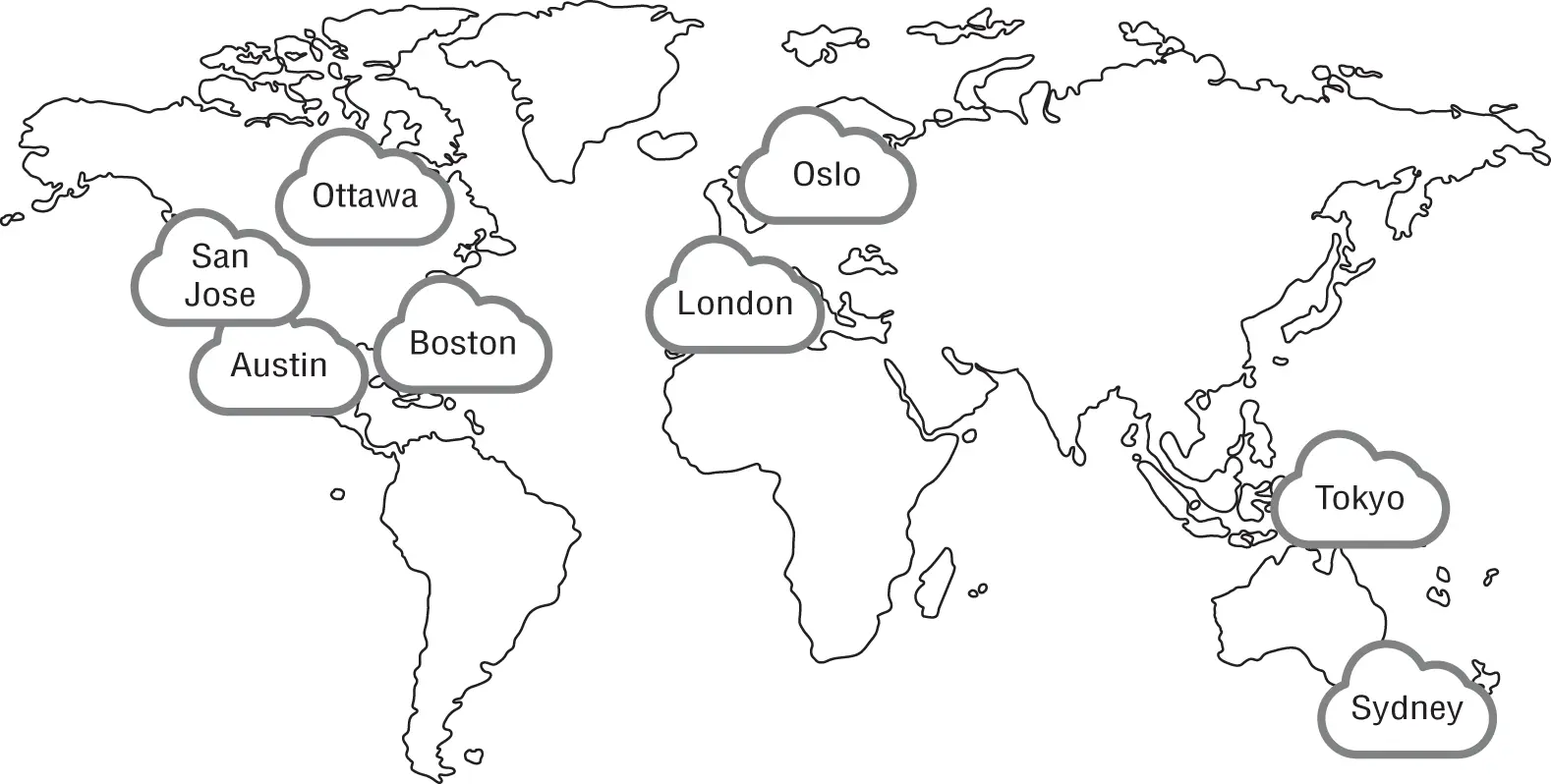

Regions are divided into one or more availability zones (AZs) . Each region will usually have two or more availability zones for fault tolerance. AZs almost always correspond to individual data centers. Each AZ has its own redundant power and network connections. Within a region, AZs may be located a greater distance apart, especially if the region is in an area prone to natural disasters such as hurricanes or earthquakes. When running redundant VMs in the cloud, it's a best practice to spread them across AZs to take advantage of the resiliency that they provide. Figure 1.21illustrates the concept of availability zones.

FIGURE 1.21 Availability zones

As I alluded to earlier, you can choose to run VMs on different virtualization hosts for redundancy in case one host fails. However, there are times when you want VMs to run on the same host, such as when the VMs need extremely low-latency network connectivity to one another. To achieve this, you would group these VMs into the same cluster and implement a cluster placement rule to ensure that the VMs always run on the same host. If this sounds familiar, it's because this is the same principle you read about in the discussion of hypervisor affinity rules.

This approach obviously isn't resilient if that host fails, so what you may also do is to create multiple clusters of redundant VMs that run on different hosts, and perhaps even in different AZs.

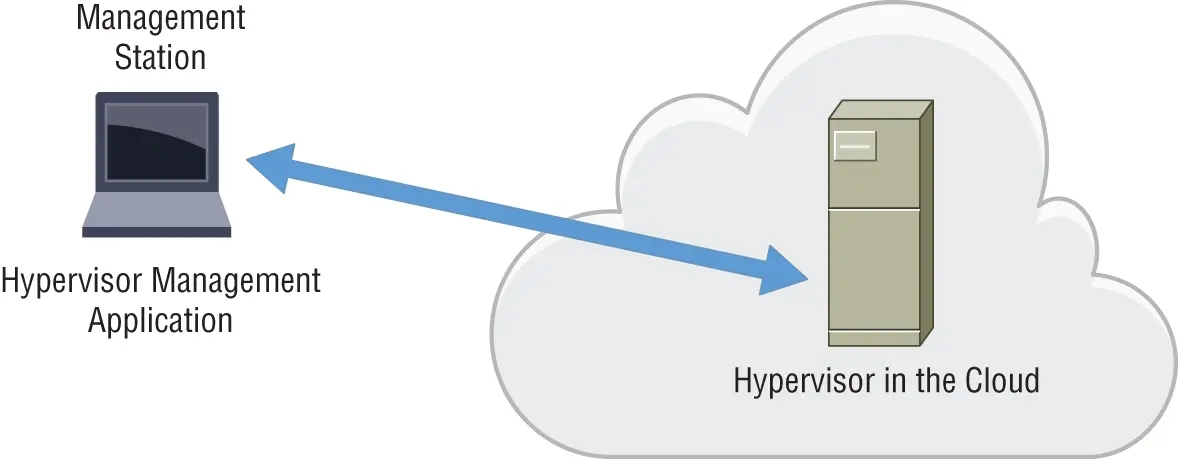

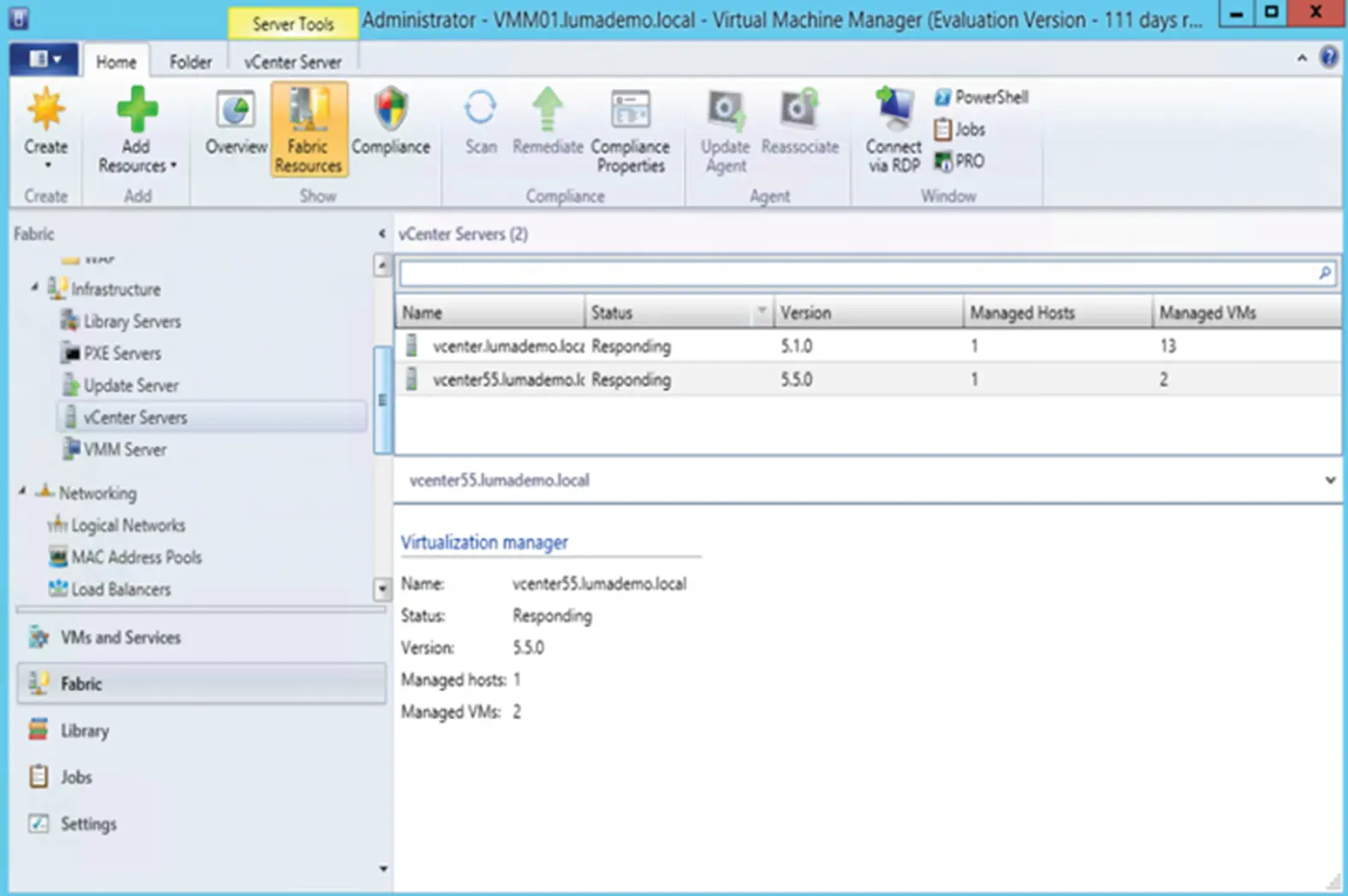

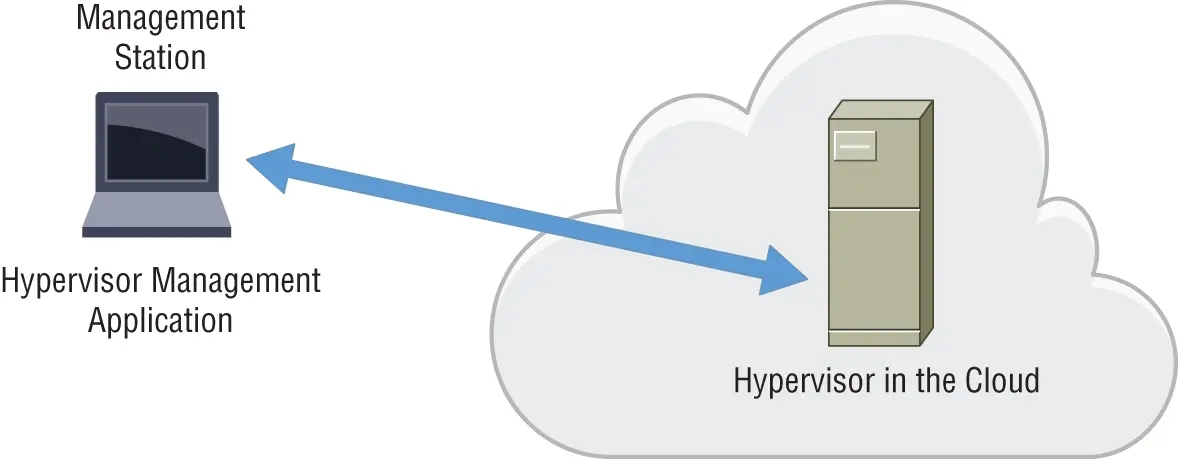

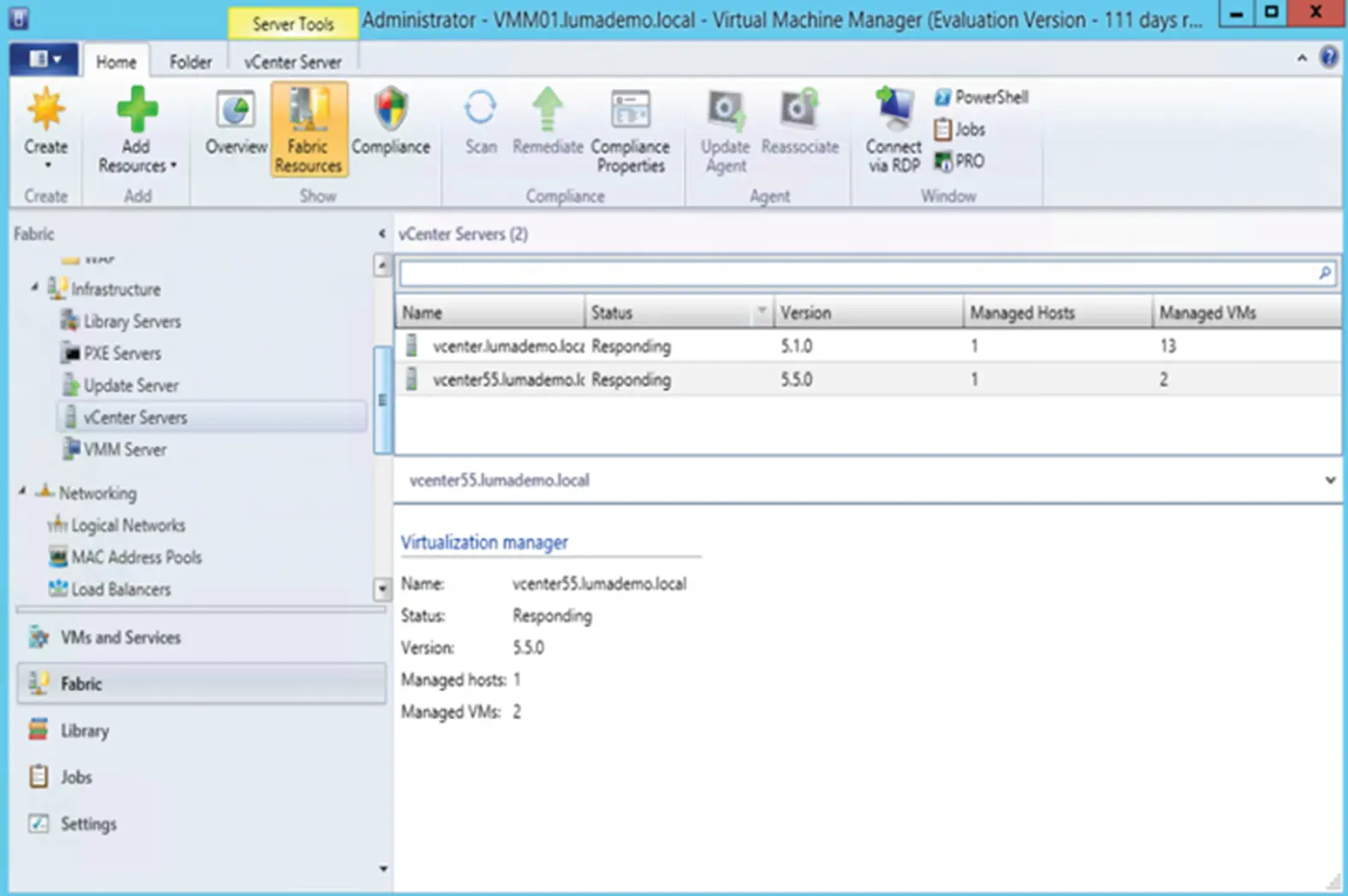

In this section, you'll learn about remote access techniques and look at what tools are available to manage and monitor your VMs. Remember that you don't have physical access to the cloud provider's data centers, so remote access is your only option for managing your servers. Furthermore, the cloud provider will not give you direct access to the hypervisor, which is typically going to be proprietary. This is unlike a traditional data center environment in which you can install a hypervisor management application on a workstation and fully configure and manage the hypervisor, as Figure 1.22and Figure 1.23illustrate.

FIGURE 1.22 Local computer running the hypervisor management application

FIGURE 1.23 Remote hypervisor management application

As we've discussed, your options for managing your VMs and other cloud resources are limited to the web management interface, command-line tools, and APIs that the provider gives you. But once you provision a VM, managing the OS and applications running on it is a much more familiar task. You'll manage them in almost the exact same way as you would in a data center. In fact, the whole reason providers offer IaaS services is to replicate the data center infrastructure closely enough that you can take your existing VMs and migrate them to the cloud with minimal fuss. Let's revisit some of these management options with which you're probably already familiar.

RDP Remote Desktop Protocol (RDP) is a proprietary protocol developed by Microsoft to allow remote access to Windows devices, as illustrated in Figure 1.24. RDP is invaluable for managing remote Windows virtual machines, since it allows you to work remotely as if you were locally connected to the server. Microsoft calls the application Remote Desktop Services, formerly Terminal Services. The remote desktop application comes preinstalled on all modern versions of Windows. RDP uses the TCP port 3389. FIGURE 1.24 Local computer running Remote Desktop Services to remotely access a Windows server graphical interface in the cloudThe graphical client will request the name of the remote server in the cloud, and once it's connected, you'll be presented with a standard Windows interface to log in. Then you'll see the standard Windows desktop of the remote server on your local workstation.

SSH The Secure Shell (SSH) protocol has largely replaced Telnet as a remote access method. SSH supports encryption, whereas Telnet does not, making Telnet insecure. To use SSH, the SSH service must be enabled on the VM. This is pretty much standard on any Linux distribution, and it can also be installed on Windows devices.Many SSH clients are available on the market as both commercial software and freeware in the public domain. The SSH client connects over the network using TCP port 22 via an encrypted connection, as shown in Figure 1.25. Once you are connected, you have a command-line interface to manage your cloud services. SSH is a common remote connection method used to configure network devices such as switches and routers. FIGURE 1.25 Secure Shell encrypted remote access

Monitoring Your Cloud Resources

Just because you have created an operational cloud deployment doesn't mean your work is over! You must continually monitor performance and also make sure that there are no interruptions to services. Fortunately, this function has largely been automated. As you'll learn in later chapters, cloud providers offer ways to collect and analyze performance and health metrics. You can also configure automated responses to various events, such as increased application response time or a VM going down. Also, alerts such as text messages, emails, or calls to other applicators can be defined and sent in response to such events.

Читать дальше