IaaS: Provision VMs, create elastic block storage volumes, create virtual networks

PaaS: Upload and execute an application written in Python, deploy a web application from a Git repository

SaaS: Send and receive email, create and collaborate on documents

Note that when it comes to the PaaS and SaaS side of things, there's considerable overlap between managing a service and using it.

Command-Line Interface, APIs, and SDKs

Cloud providers offer one or more command-line interfaces to allow scripted/programmatic management of your cloud resources. The command-line interface is geared toward sysadmins who want to perform routine management tasks without having to log in and click around a web interface.

Command-line interfaces work by using the cloud provider's APIs. In simple terms, the API allows you to manage your cloud resources programmatically. In contrast to a web management interface, in which you're clicking and typing, an API endpoint is a web service that listens for specially structured requests. Cloud provider API endpoints are usually open to the Internet, encrypted using Transport Layer Security (TLS), and require some form of authentication.

Cloud providers offer software development kits (SDKs) for software developers who want to write applications that integrate with the cloud. SDKs take care of the details of communicating with the API endpoints so that developers can focus on writing their application.

Connecting to Your Cloud Resources

How you connect to your cloud resources depends on how you set them up. As I alluded to earlier, cloud resources that you create are not necessarily reachable via the Internet by default. There are three ways that you can connect to your resources:

Internet

VPN access

Dedicated private connections

If you're hosting an application that needs to be reachable anytime and anywhere, you'll likely open it up to the Internet. If a resource is open to the Internet, it will have a publicly routable Internet IP address. This is typically going to be a web application, but it doesn't have to be. Although anywhere, anytime access can be a great benefit, keep in mind that traffic traversing the Internet is subject to high, unpredictable latency.

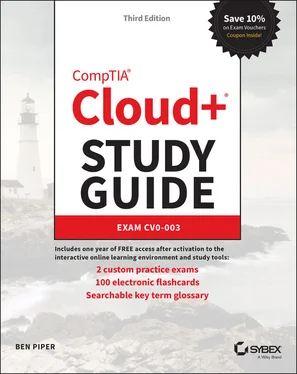

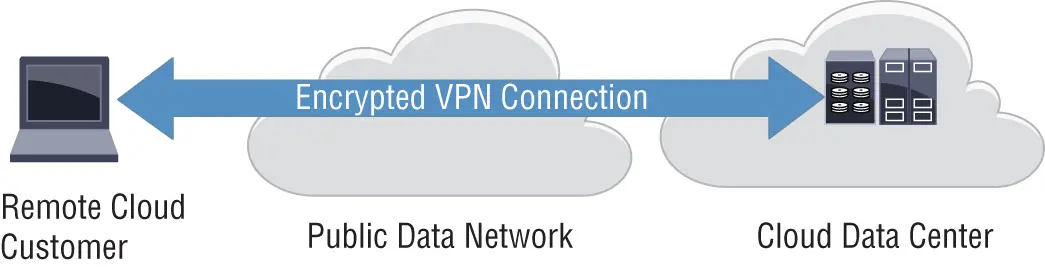

A virtual private network (VPN) allows for secure and usually encrypted connections over an insecure network (like the Internet), as shown in Figure 1.15. Usually, a VPN connection is set up between a customer-owned device deployment and the cloud. VPNs are appropriate for applications that do not need anywhere, anytime access. Organizations often use VPNs to connect cloud resources to offices and data centers.

FIGURE 1.15 Remote VPN access to a data center

Dedicated Private Connections

Cloud providers offer connections to their data centers via private leased lines instead of the Internet. These connections offer dedicated bandwidth and predictable latency—something you can't get with Internet or VPN access. Dedicated private connections do not traverse the Internet, nor do they offer built-in encryption. Keep in mind that dedicated connections don't usually provide Internet access. For that, you'll need a separate Internet connection.

Is My Data Safe? (Replication and Synchronization)

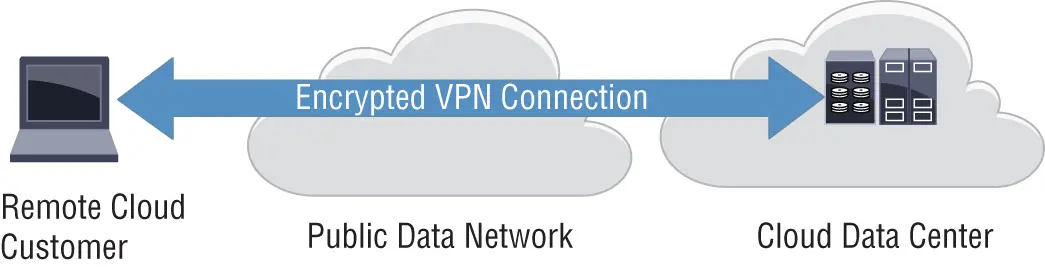

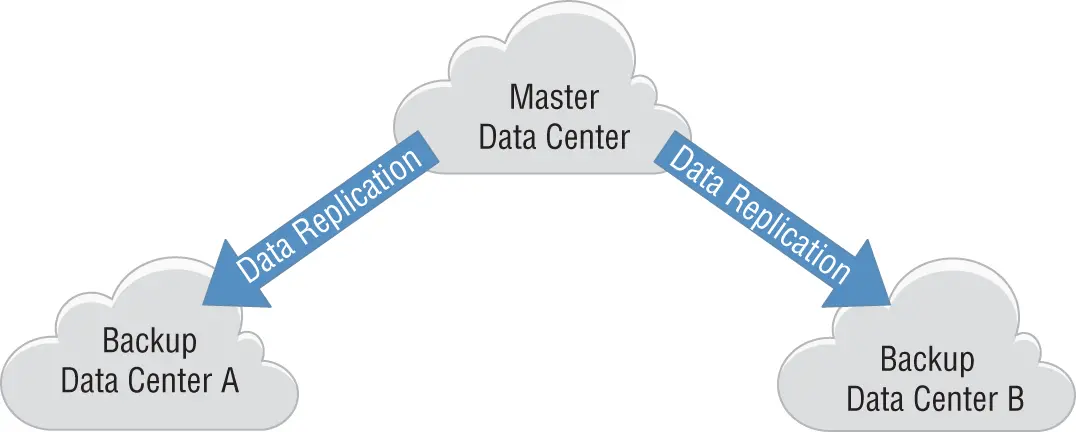

Replication is the transfer and synchronization of data between computing or storage resources, and typically between multiple regions or data centers, as illustrated in Figure 1.16. For disaster recovery purposes and data security, your data must be transferred, or replicated, between data centers. Remote copies of data have traditionally been implemented with storage backup applications. However, with the virtualization of servers in the cloud, you can easily replicate complete VM instances, which allows you to replicate complete server instances, with all of the applications, service packs, and content, to a remote facility.

FIGURE 1.16 Site-to-site replication of data

Applications such as databases have built-in replication processes that can be utilized based on your requirements. Also, many cloud service offerings can include data replication as a built-in feature or as a chargeable option.

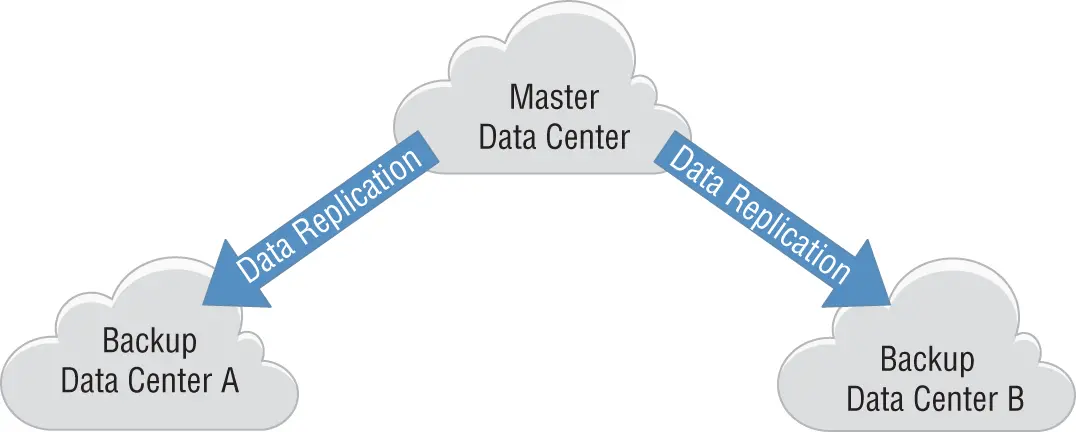

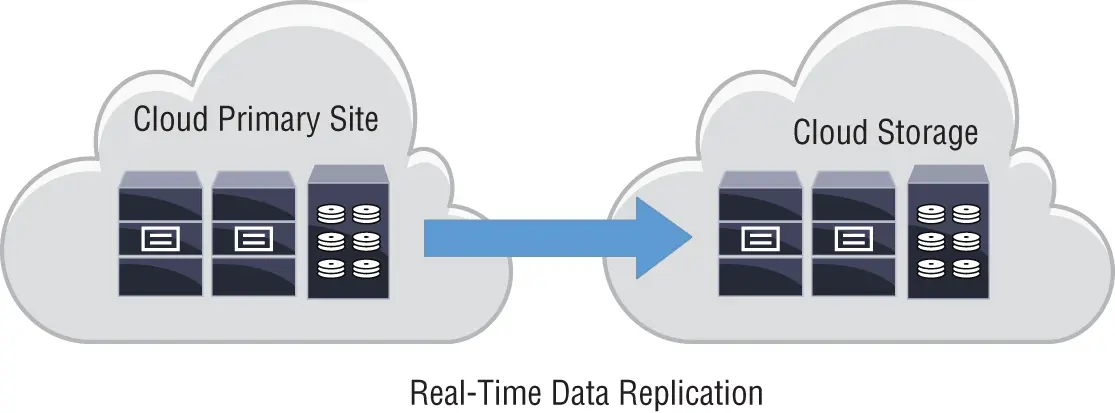

Synchronous replication is the process of replicating data in real time from the primary storage system to a remote facility, as shown in Figure 1.17. Synchronous replication allows you to store current data at a remote location from the primary data center that can be brought online with a short recovery time and limited loss of data. Relational database systems offer synchronous replication along with automatic failover to achieve high availability.

FIGURE 1.17 Synchronous replication

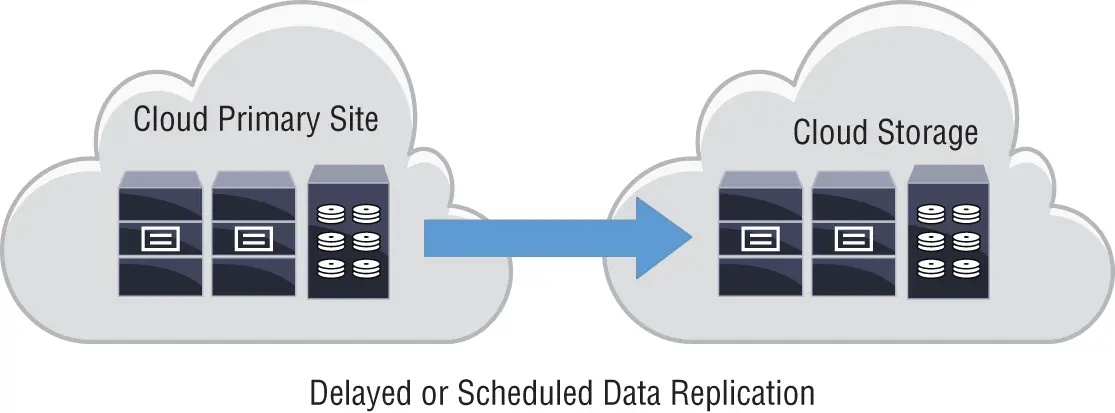

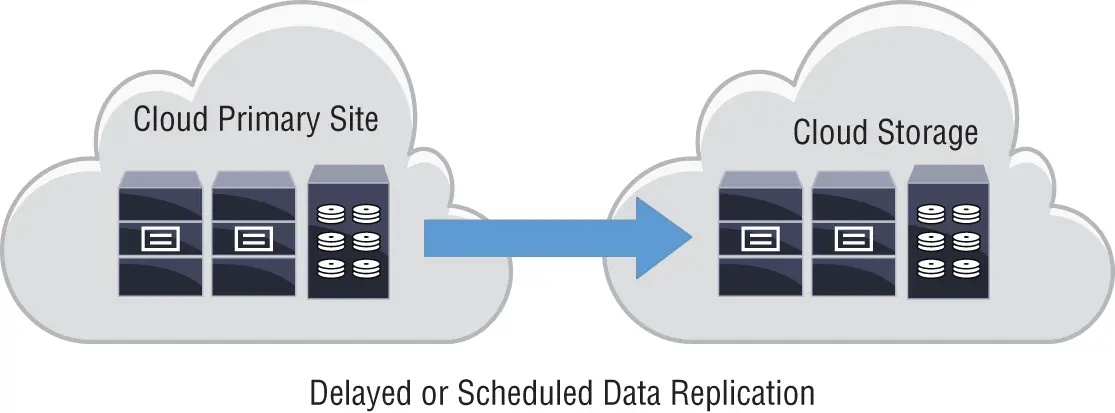

With asynchronous replication , the data is first written to the primary storage system in the primary storage facility or cloud location. After the data is stored, it is then copied to a remote location on a delayed or scheduled basis, as shown in Figure 1.18.

FIGURE 1.18 Asynchronous replication

One common use case for asynchronous replication involves taking scheduled snapshots of VM storage volumes and storing those snapshots offline. The snapshots may also be replicated to a remote location for safekeeping. If you ever need to restore the VM, you can do so from the snapshot.

Another example of asynchronous replication is the creation of database read replicas. When an organization needs to run intensive, complex reports against a database, it can tax the database server and slow it down. Rather than taxing the primary database server, which might be performing critical business functions, you can asynchronously replicate the data to a read replica and then run your reports against the replica.

Asynchronous replication can be more cost effective than implementing a synchronous replication offering. Cloud providers often charge for data transfer between regions or availability zones. Because asynchronous replication is not in real time, there's typically less data to transfer.

Understanding Load Balancers

Loose coupling (also called decoupling) is a design principle in which application components are broken up in such a way that they can run on different servers. With this approach, redundant application components can be deployed to achieve high availability and scalability.

Let's take a look at a familiar example. Most database-backed web applications decouple the web component from the database so that they can run on separate servers. This makes it possible to run redundant web servers for scaling and high availability.

But loose coupling introduces a new challenge: If there are multiple web servers that users can access, how do you distribute traffic among them? And what if one of the servers fails? The answer is load balancing . A load balancer accepts connections from users and distributes those connections to web servers, typically in a round-robin fashion. When a load balancer sits in front of web servers, users connect to an IP address of the load balancer instead of an IP address of one of the web servers.

Читать дальше