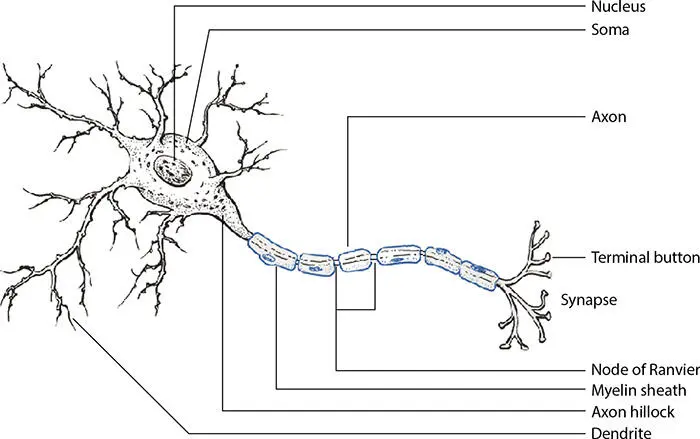

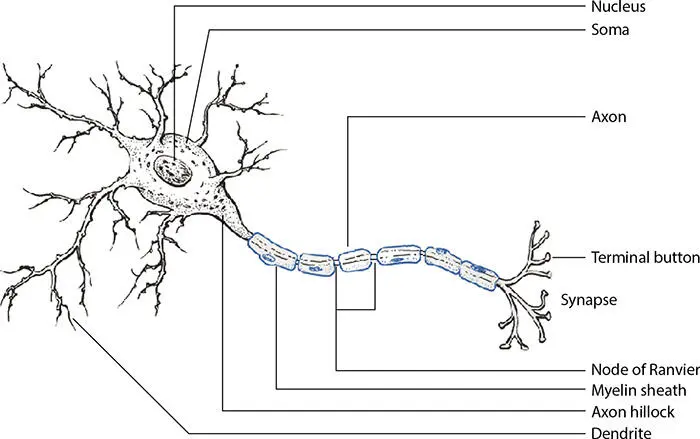

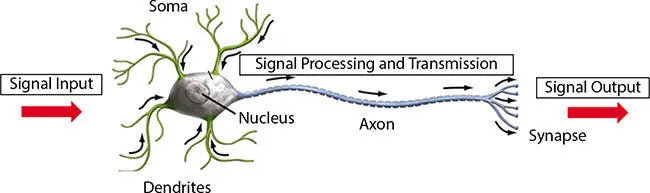

Figure 2.1 Schematic diagram of biological neuron.

The general structural parts of the neuron and their functions are briefly discussed below:

• Dendrites: These are the branched extensions at the beginning of neuron. Dendrites are covered with synapses.■ Function:i. increases the surface area of the cell body;ii. the synapses receive information in form of electrochemical signal from other neurons and transmit it to the cell body, soma.

• Soma: It is the spherical shaped cell body of the neuron which contains the nucleus. It is the connector between dendrites and axon of the neuron. It does not take active role in information transmission.■ Function:i. it produces all the proteins required for the axons, dendrites, and synaptic terminals;ii. contains the cell organelles, viz., Golgi apparatus, mitochondria, endoplasmic reticulum, secretory granules, polysomes, and ribosomes;iii. generates neurotransmitters and keeps the neuron active.

• Axon hillock: This specialized part of the cell body connects the soma to the axon which is the site of summation for incoming electrochemical signal.■ Function:i. The neuron has a particular threshold for incoming electrochemical signal. If it is exceeded, the axon hillock produces a signal, termed as action potential, down the axon.

• Axon: It is also termed as nerve fiber. It is the elongated projection from cell body to the terminal endings. The speed of transmission of the electrochemical signal, i.e., the information, is directly proportional to the axon length.■ Function:i. The neuron has a particular threshold for incoming electrochemical signal. If it is exceeded, the axon hillock produces a signal, termed as action potential, down the axon.

• Myelin Sheath: Some axons are covered with lipid-rich, i.e., fatty, insulating layer called myelin. Sometimes, gaps exist between the myelin sheaths along the axon.■ Function:i. it protects the axon;ii. it is the electrical insulator of the neuron, i.e., it blocks the electrical impulses traveling through itself;iii. it prevents depolarization;iv. as the electrical impulses cannot pass through the sheath, it jumps from a gap between the sheaths to another gap, and thus, the myelin sheath speeds up the transmission of the signal along the neuron efficiently.

• Nodes of Ranvier: The uninsulated, ion-rich gaps between myelin sheaths, which are approximately 1 μm wide, are called the nodes of Ranvier.■ Function:i. it mediates the exchange of certain ions, like sodium and chloride;ii. helps in rapid transmission of action potential along the axon

• Terminal Buttons: Small knob-like structures located at the end of the neuron is termed as terminal buttons. It contains vesicles containing neurotransmitter.■ Function:i. It covert the electrical impulses into chemical signal. When the electrical impulses reach at these buttons, neurotransmitter is secreted which sends the electrochemical signal to other neurons.

• Synapse: The gap between two neurons or a neuron and a gland or a muscle is called synapse.■ Function:i. It transmits the electrochemical signal from one cell to another cell.

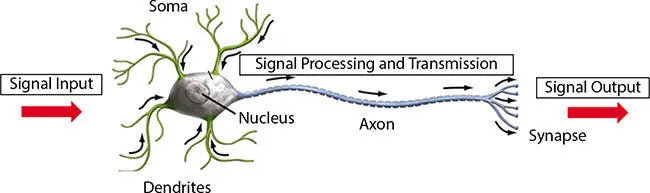

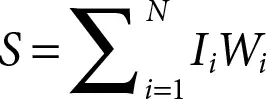

The propagation of signal through neurons and resemblance with artificial neurons are shown in Figure 2.2.

Figure 2.2 Propagation of signal through neurons.

ANN, which is a domain of artificial intelligence, mimics the above discussed biological neural networks of nervous system. The connections of the neurons in ANN are computationally and mathematically modeled in more or less same way as the connections between the biological neurons.

In the following section, we highlight the basic network topology and different types of models in ANN and the learning rules.

2.3 Artificial Neural Networks

An ANN can be defined as a mathematical and computational tool for nonlinear statistical data modeling, influenced by the structure and function of biological nervous system. A large number of immensely interconnected processing units, termed as neurons, build ANN.

Generally, ANN receives a set of inputs and produces the weighted sum, and then, the result is passed to the nonlinear function which generates the output. Like human being, ANN also learns by example. The models of ANN are required to be appropriately trained to generate the output efficiently. In biological nervous system, learning involves adaptations in the synaptic connections between the neurons. This idea influences the learning procedure of ANN. The system parameter of ANNs can be adjusted according to I/O pattern. Through learning process, ANN can be applied in the domains of data classification, pattern recognition, etc.

The researchers are working on ANN for past several decades. This domain has been established even before the advent of computers. The artificial neuron [1] was first introduced by Warren McCulloch, the neurophysiologist, and Walter Pits, the logician, in 1943.

2.3.1 McCulloch-Pitts Neural Model

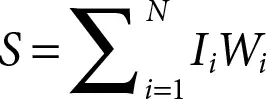

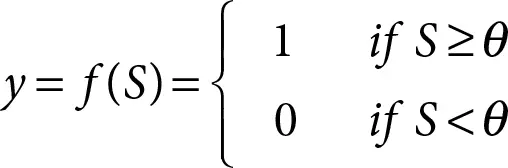

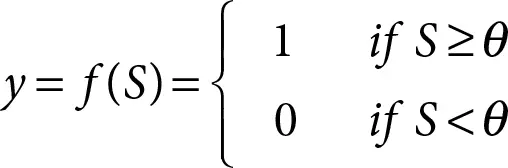

The model proposed by McCulloch and Pitts is documented as linear threshold gate [1]. The artificial neuron takes a set of input I 1, I 2, I 3, … …, IN ∈ {0, 1} and produces one output, y ∈ {0, 1}. Input sets are of two types: one is dependent input termed as excitatory input and the other is independent input termed as inhibitory input . Mathematically, the function can be expressed by the following equations:

(2.1)

(2.2)

where

W 1, W 2, W 3, …, …, WN ≡ weight values associated with the corresponding input which are normalized in the range of either (0, 1) or (−1, 1);

S ≡ weighted sum;

θ ≡ threshold constant.

The function f is called linear step function shown in Figure 2.3.

The schematic diagram of linear threshold gate is given in Figure 2.4.

This initial two-state model of ANN is simple but has immense computational power. The disadvantage of this model is lack of flexibility because of fixed weights and threshold values. Later McCulloch-Pitts neuron model has been improved incorporating more flexible features to extend its application domain.

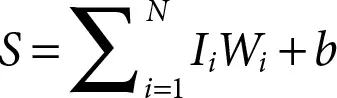

McCulloch-Pitts neuron model was enhanced by Frank Rosenblatt in 1957 where he proposed the concept of the perceptron [2] to solve linear classification problems. This algorithm supervises the learning process of binary classifiers. This binary single neuron model merges the concept of McCulloch-Pitts model [1] with Hebbian learning rule of adjusting weights [3]. In perceptron, an extra constant, termed as bias , is added. The decision boundary can be shifted by bias away from the origin. It is independent of any input value. To define perceptron, Equation (2.1)has been modified as follows:

(2.3)

Читать дальше