Figure 6.5: Wait-and-signal synchronization between two tasks.

In this situation, the binary semaphore is initially unavailable (value of 0). tWaitTask has higher priority and runs first. The task makes a request to acquire the semaphore but is blocked because the semaphore is unavailable. This step gives the lower priority tSignalTask a chance to run; at some point, tSignalTask releases the binary semaphore and unblocks tWaitTask. The pseudo code for this scenario is shown in Listing 6.1.

Listing 6.1: Pseudo code for wait-and-signal synchronization

tWaitTask () {

:

Acquire binary semaphore token

:

}

tSignalTask () {

:

Release binary semaphore token

:

}

Because tWaitTask's priority is higher than tSignalTask's priority, as soon as the semaphore is released, tWaitTask preempts tSignalTask and starts to execute.

6.4.2 Multiple-Task Wait-and-Signal Synchronization

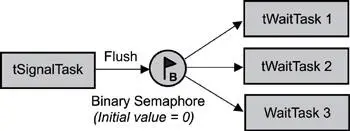

When coordinating the synchronization of more than two tasks, use the flush operation on the task-waiting list of a binary semaphore, as shown in Figure 6.6.

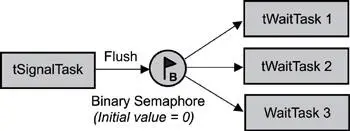

Figure 6.6: Wait-and-signal synchronization between multiple tasks.

As in the previous case, the binary semaphore is initially unavailable (value of 0). The higher priority tWaitTasks 1, 2, and 3 all do some processing; when they are done, they try to acquire the unavailable semaphore and, as a result, block. This action gives tSignalTask a chance to complete its processing and execute a flush command on the semaphore, effectively unblocking the three tWaitTasks, as shown in Listing 6.2. Note that similar code is used for tWaitTask 1, 2, and 3.

Listing 6.2: Pseudo code for wait-and-signal synchronization.

tWaitTask () {

:

Do some processing specific to task

Acquire binary semaphore token

:

}

tSignalTask () {

:

Do some processing Flush binary semaphore's task-waiting list

:

}

Because the tWaitTasks' priorities are higher than tSignalTask's priority, as soon as the semaphore is released, one of the higher priority tWaitTasks preempts tSignalTask and starts to execute.

Note that in the wait-and-signal synchronization shown in Figure 6.6 the value of the binary semaphore after the flush operation is implementation dependent. Therefore, the return value of the acquire operation must be properly checked to see if either a return-from-flush or an error condition has occurred.

6.4.3 Credit-Tracking Synchronization

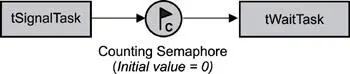

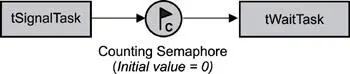

Sometimes the rate at which the signaling task executes is higher than that of the signaled task. In this case, a mechanism is needed to count each signaling occurrence. The counting semaphore provides just this facility. With a counting semaphore, the signaling task can continue to execute and increment a count at its own pace, while the wait task, when unblocked, executes at its own pace, as shown in Figure 6.7.

Figure 6.7: Credit-tracking synchronization between two tasks.

Again, the counting semaphore's count is initially 0, making it unavailable. The lower priority tWaitTask tries to acquire this semaphore but blocks until tSignalTask makes the semaphore available by performing a release on it. Even then, tWaitTask will waits in the ready state until the higher priority tSignalTask eventually relinquishes the CPU by making a blocking call or delaying itself, as shown in Listing 6.3.

Listing 6.3: Pseudo code for credit-tracking synchronization.

tWaitTask () {

:

Acquire counting semaphore token

:

}

tSignalTask () {

:

Release counting semaphore token

:

}

Because tSignalTask is set to a higher priority and executes at its own rate, it might increment the counting semaphore multiple times before tWaitTask starts processing the first request. Hence, the counting semaphore allows a credit buildup of the number of times that the tWaitTask can execute before the semaphore becomes unavailable.

Eventually, when tSignalTask's rate of releasing the semaphore tokens slows, tWaitTask can catch up and eventually deplete the count until the counting semaphore is empty. At this point, tWaitTask blocks again at the counting semaphore, waiting for tSignalTask to release the semaphore again.

Note that this credit-tracking mechanism is useful if tSignalTask releases semaphores in bursts, giving tWaitTask the chance to catch up every once in a while.

Using this mechanism with an ISR that acts in a similar way to the signaling task can be quite useful. Interrupts have higher priorities than tasks. Hence, an interrupt's associated higher priority ISR executes when the hardware interrupt is triggered and typically offloads some work to a lower priority task waiting on a semaphore.

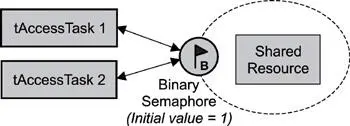

6.4.4 Single Shared-Resource-Access Synchronization

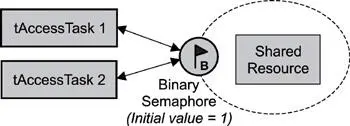

One of the more common uses of semaphores is to provide for mutually exclusive access to a shared resource. A shared resource might be a memory location, a data structure, or an I/O device-essentially anything that might have to be shared between two or more concurrent threads of execution. A semaphore can be used to serialize access to a shared resource, as shown in Figure 6.8.

Figure 6.8: Single shared-resource-access synchronization.

In this scenario, a binary semaphore is initially created in the available state (value = 1) and is used to protect the shared resource. To access the shared resource, task 1 or 2 needs to first successfully acquire the binary semaphore before reading from or writing to the shared resource. The pseudo code for both tAccessTask 1 and 2 is similar to Listing 6.4.

Listing 6.4: Pseudo code for tasks accessing a shared resource.

tAccessTask () {

:

Acquire binary semaphore token

Read or write to shared resource

Release binary semaphore token

:

}

This code serializes the access to the shared resource. If tAccessTask 1 executes first, it makes a request to acquire the semaphore and is successful because the semaphore is available. Having acquired the semaphore, this task is granted access to the shared resource and can read and write to it.

Meanwhile, the higher priority tAccessTask 2 wakes up and runs due to a timeout or some external event. It tries to access the same semaphore but is blocked because tAccessTask 1 currently has access to it. After tAccessTask 1 releases the semaphore, tAccessTask 2 is unblocked and starts to execute.

One of the dangers to this design is that any task can accidentally release the binary semaphore, even one that never acquired the semaphore in the first place. If this issue were to happen in this scenario, both tAccessTask 1 and tAccessTask 2 could end up acquiring the semaphore and reading and writing to the shared resource at the same time, which would lead to incorrect program behavior.

Читать дальше