Parallel computers with multiple processors have emerged to meet energy-efficient performance demands of current and future challenging computing applications. These multiprocessor systems need interconnection networks for connecting processors and memory modules. Advances in parallel and distributed computing have made interconnection networks a potential networking alternative to meet the growing demands of high-performance computing applications. These networks need to perform continuously without repair for long periods of time. Therefore, these need to be reliable and fault-tolerant as these networks are quite complex and big in size. Failure of its components should not lead to complete network failure as it may be catastrophic.

The main objective of this book is to device approaches for reliability modeling and evaluation of such complex networks. Such evaluation helps to understand which network can give us better reliability by their design. New designs of fault-tolerant interconnection network layouts are proposed, which are capable of providing high reliability through path redundancy and fault tolerance through reduction of common elements in paths. This book covers the reliability evaluation of various network topologies considering multiple reliability performance parameters (two terminal reliability, broadcast reliability, all terminal reliability, and multiple sources to multiple destinations reliability).

For decades, work on network reliability evaluation has been going on. Our S.C. School of Quality and Reliability, earlier known as Reliability Engineering Centre, has significantly contributed to this area of research during the 1980s and onwards. The school has been involved in developing models for reliability evaluation of complex networks but static and ad hoc. The authors of this paper have significant contributions in this area.

Interconnection networks pose another challenge to reliability evaluation due to their complex and large architectures. There are very few in this area. This subject matter has not been much addressed in easy-to-follow book materials. This book tries to bring the aspects of latest research conducted at S.C. School of Quality and Reliability to have better reach to audiences. This is the first edition of the book, so there are many improvements possible. The authors can be provided with feedback to improve the contents of the book in the possible next edition.

This book first introduces the basic concepts of network reliability and the popular interconnection network architectures in the first two chapters. Then it discusses approaches used for reliability evaluation of such a network in Chapters 3, 4, and 5. Some new novel architectures providing better reliability and fault tolerance while being cost effective are presented in Chapter 6.

The authors would like to thank everyone (faculty, students, and staff) from S.C. School of Quality and Reliability, IIT Kharagpur, India, for their encouragement, suggestions, and support while carrying this analysis. We would also like to thank Prof. K.B. Mishra and Martin Scrivener for their guidance and support in writing this book.

Dr. Neeraj Kumar Goyal and Dr. S. Rajkumar

August 2020

1

Introduction

1.1 Introduction

In recent years’ human beings have become largely dependent on communication networks, such as computer communication networks, telecommunication networks, mobile switching networks etc., for their day-to-day activities [1]. In today’s world, humans and critical machines depend on these communication networks to work properly. Failure of these communication networks can result in situations where people may find themselves isolated, helpless and exposed to hazards. It is fact that every component or system can fail and its failure probability increases with size and complexity.

Therefore, it is essential to compute and assure the reliability of these networks, which are growing and becoming complex. Reliability modeling and computation is necessary for reliability and safety assurance of these networks [2]. It also helps to identify weak links. These weak links can be improved cost effectively using reliability design techniques. Recent developments in communication hardware industry has resulted in increasingly reliable and non-repairable (need to be replaced when got bad 1) network components. However new designs involve new components, which tend to be less reliable. A good network design [3] involving fault tolerance and redundancies can deliver better system reliability at lesser cost. This allows new designs to be released faster and works reliably even when components are not mature enough from reliability point of view.

The computation of reliability measures [4] for a large and complex communication network, up to the desired level of accuracy, is time consuming, complex and costly. It has not been realistic to model and compute the reliability of real-life communication networks, which are quite large, using desktop computer due to large execution time and high memory requirements. Such computations are usually performed on high-end processors for critical systems only. Reliability professionals and researchers have carried out a lot of research and developed techniques to minimize these efforts and develop a practical tool for all the communication network designers [4–6].

This book presents novel and efficient tools, techniques and approaches for reliability evaluation, reliability analysis, and design of reliable communication networks using graph theoretic concepts.

1.2 Network Reliability Measures

Earlier attempts to measure network reliability belong to two distinct classes: deterministic and probabilistic [1, 2]. The deterministic measures assumed that the network is subjected to a destructive force with complete knowledge of the network topology. The reliability is measured in terms of the least amount of damage required to make the network inoperative.

Deterministic measures thus provide simple bounds on the reliability of the network, since they are often measured for the network’s worst-case environment. For example, in the terminal pair reliability problem, two deterministic measures of reliability are:

1 The minimum number of edges that must be destroyed or removed to disrupt the communication between the specified nodes (s and t), which is simply the number of edges in a minimum cardinality cutset, and

2 The minimum number of nodes that must be destroyed or removed to disrupt the communication between the specified nodes (s and t).

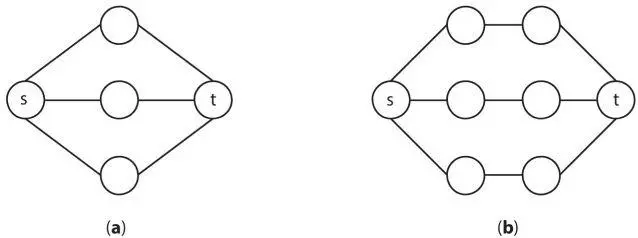

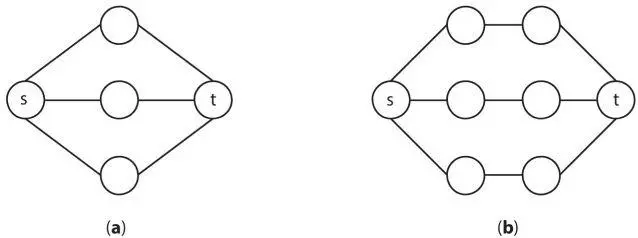

Both of these measures are computable in polynomial time. However, one of the main problems with deterministic measures is these give rise to some counter intuitive notions of network reliability. For example, consider the networks shown in Figure 1.1. According to second deterministic measure of the graph’s reliability, the graphs of Figure 1.1 (a) and Figure 1.1 (b) are equally reliable since both of these require minimum three nodes to be destroyed for breaking the s-f node connectivity.

Figure 1.1Example networks for deterministic reliability measurement.

However intuitively one can easily find out that graph (a) is the more reliable among the two. The same problem arises when the cardinality of a minimum (s, t)-cut set is used as a measure of unreliability. Consider the graphs shown in Figure 1.2. Both graphs (a) and (b) have a minimum cardinality for (s, t) cut of size one, which implies both networks are equally reliable.

This clearly shows that deterministic measures of reliability are insufficient to correctly relate network components used in network layout with network reliability. Moreover, failure of network components is probabilistic in nature therefore only probabilistic measures can define system reliability appropriately.

Читать дальше