The gray rectangle in Figure 5.6shows the runtime aspects of a cloud service where the service is monitored and policies, rule sets, and configuration parameters are adapted in order to force the service to adhere to the defined agreement. With DSA as a cloud service, policies, rule sets, and configuration parameter updates can be triggered by the DSA cognitive engine resource monitor, as shown in Figure 5.2, or by the decision maker, as shown in Figure 5.3. These actions are made in order to enforce the service to adhere to the service agreement during runtime. With DSA as a service, the design can create log files that can be analyzed as post processed in order to evaluate the DSA service accountability over a long period of time.

With DSA as a set of cloud services, while service agreements can be driven from system requirements, the design of DSA cloud services has to create metrics to help force the services to conform to the agreement and use metrics for measuring services accountability. The cognitive engine‐based design would have to gain understanding of the properties of the metrics used to force the service to adhere to the service agreement and scripted scenarios must be used to assess service accountability before system deployment.

5.3.2 DSA Cloud Services Metrology

Metrology is the scientific method used to create measurements. With cloud services, we need to create measurements to:

1 quantify or measure properties of the DSA cloud services

2 obtain a common understanding of these properties.

A DSA cloud services metric provides knowledge about a DSA service property. Collected measurements of this metric help the DSA cognitive engine estimate the property of this metric during runtime. Post‐processing analysis can provide further knowledge of the metric property.

It is important to look at DSA cloud services metrics not as software properties measurements. DSA cloud services metrology measures physical aspects not functional aspects of the services. The designer of DSA as a set of cloud services should be able to provide measurable metrics such that a service agreement can be created and evaluated during runtime and in post processing. Since the model used here is a hierarchical model, a metric used at different layers of the hierarchy is evaluated differently at each layer. For example, as explained in Chapter 1, response time when providing DSA as a local service should be less than response time when providing DSA as a distributed cooperative service, which is also less than when providing DSA as a centralized service.

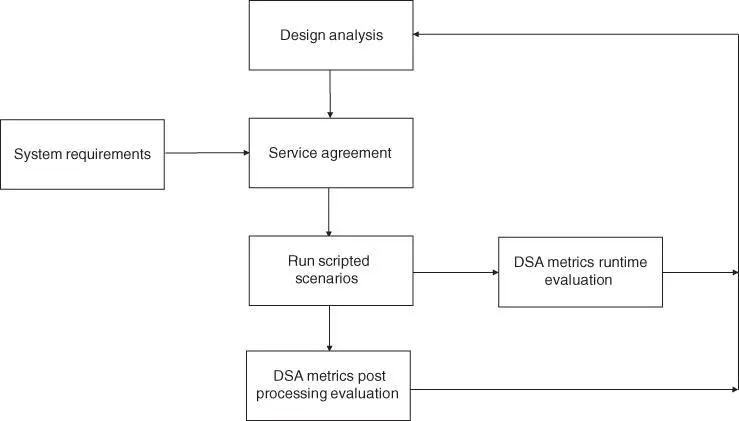

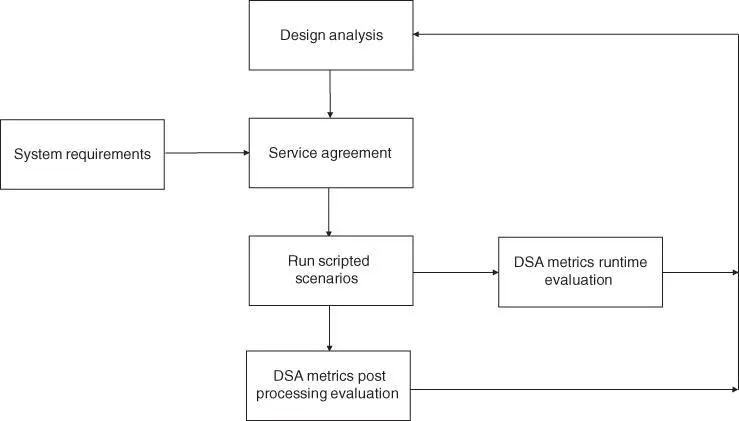

With the concept of providing DSA as a set of cloud services, the design should be able to go through an iterative process before the model is deemed workable. The design should include the following steps:

1 Create an initial service agreement driven from requirements and design analysis.

2 Run scripted scenarios to evaluate how the agreement is met during runtime through created metrics.

3 Run post‐processing analysis of these scripted scenarios to gain further knowledge of the properties of the selected metrics.

4 Refine the service agreement.

Figure 5.7illustrates this iterative concept. The outcome of this processing is a defined service agreement with measurable metrics that a deployed system is expected to meet.

Figure 5.7Iterative process to create a workable DSA service agreement.

With standard cloud services, a customer should be able to compare two service agreements from two different providers and select the provider that best meets his needs. The provider of an IaaS attempts to optimize the infrastructure resources use dynamically in order to create an attractive service agreement. If the scripted scenarios in Figure 5.7are selected to represent deployed scenarios accurately, and if the iterative process in Figure 5.7is run sufficiently enough and with enough samples, the service agreement created should be met with the deployed system. However, there should still be room to refine the cognitive algorithms, policies, rule sets, and configuration parameters after deployment if post‐processing analysis necessitates this change. A good system design should only require refining of policies, rule sets, and configuration parameters without the need for software modification. This system design should allow for the deployed cognitive engine to morph based on post‐processing analysis results.

5.3.3 Examples of DSA Cloud Services Metrics

This section presents some examples of DSA cloud services metrics that can be considered in DSA design. Note that these are examples and the designer can choose to add more metrics depending on the system requirements and design analysis.

Metric name : Response time.

Metric description : Response time between when an entity requests a DSA service and when the service is granted.

Metric measured property : Time.

Metric scale : Milliseconds.

Metric source : Depends on the hierarchy of the networks. The source is always a DSA cognitive engine but the response can be local, distributed cooperative, or centralized. The response can also be deferred to a higher hierarchy DSA cognitive engine.

Note : Response time can be more than one metric. Response time for a local decision is measured differently from response time from a gateway or a central arbitrator. The design can create more than one response time metric.

Metric name : Hidden node detection/misdetection.

Metric description : Success or failure in detecting a hidden node.

Metric measured property : Success or failure.

Metric scale : Binary.

Metric source : An external entity, a primary user, files a complaint about using its spectrum by the designed system.

Note : Need scripted scenarios to evaluate this metric. It is evaluated by an external entity not the designed system.

5.3.3.3 Meeting Traffic Demand

Metric name : Global throughput.

Metric description : Traffic going through the system over time (global throughput efficiency).

Metric measured property : Averaged over time.

Metric scale : bps.

Metric source : Global measure of traffic going through the system. Successful use of spectrum resources dynamically should increase the wireless network's capacity to accommodate higher traffic in bps.

Note : This metric is system dependent. Some systems, such as cellular systems, link this traffic demand to revenue making. The metric is not only interested in getting insight into achieving higher throughput, but the higher number of users that increases revenues. Some users' rates can be lowered but the service continues in order to accommodate more users as long as the service agreement is met.

Metric name : Rippling.

Metric description : The stability of the assigned spectrum.

Metric measured property : Time.

Metric scale : Minutes.

Metric source : The DSA cognitive engine can track the time between two consecutive frequency updates.

Note : Rippling can have a negative impact on the previous metric (meeting global throughput). It can reduce the network throughput. This metric can be measured at the node level, at the gateway level, and at the central arbitrator level. The rippling impact at higher levels (e.g., central arbitrator) can have much worse impact than rippling at the local node. Evaluation of this metric depends on where it was measured.

Читать дальше