(2.2)

This study focuses on character domain. Therefore, we use the Omniglot dataset to train the model to learn a discriminative function and features of the images. Omniglot dataset consists of 1,623 handwritten characters that belong to 50 alphabets [6]. Each character has 20 samples, which is written by 20 individuals through the Amazon Mechanical Turk platform. The dataset is divided into a training set with 30 alphabets and test set with 20 alphabets. For the training sessions, we use data from the training set only and validate using the data in the test set.

The learning model is trained on an AWS EC2 instance consists of four vCPUs and Nvidia Tesla V100 GPU with 16GB memory. We trained our models up to 500 epochs while manually adjusting the learning rate depending on the convergence.

Before the model training, images were coupled. For the images of the same category, the expected prediction is 1 and for others 0. Data fetching is done on the CPU, at the same time they are fed and processed in the GPU. This significantly reduced the training time.

Algorithm 1 states the data generation process for model training. The process takes the category list of the characters and the images that belong to each category as the inputs. This process generates the image couples and the expected output values as output. The process starts with generating similar couples. As stated in line 1, the loop goes through each character category and generates the couples belonging to the same category, as given in the get_similar_couples function in line 2. These image couples are added to the output array training_couples in line 3, along with the expected value as given in line 4. For the matching image couples, the prediction is one, hence number 1 is added to the expected values array for the count of couples.

In lines 5 and 6, the algorithm loops through category list for two times, and check for the similar categories in line 7. If the two categories are the same, the process immediately goes to the next iteration of the loop, using the continue keyword in line 8. If there are different categories, then the process generates the mismatching image couples from the category images in each of the considered categories, as given in line 9. Then the image couples are added to the training_couples array. Since these are the false couples, the prediction should be zero. Thus, in line 11, the value 0 is added to the expected values array for the same length of the image_couples array.

After that, in line 12, the output arrays are shuffled before the training model, to generate random training samples.

Algorithm 1: Data generation

Input: cat_list[], category_images [] Output: training_couples[], expected_values[] 1. for (category in cat_list[]) 2. image_couples = get_similar_couples

(category_images[category]) 3. traing_couples.add(image_couples) 4. expected_values.add([1] * image_couples.length) 5. for (category1 in cat_list[]) 6. for (category2 in cat_list[]) 7. If (category1 == category2) 8. Continue 9. image_couples=get_different_couples

(category_images[category1],category_images[category2]) 10. training_couples.add(image_couples) 11. expected_values.add([0] * image_couples.length) 12. Shuffle (training_couples, expected_values) 13. return training_couples[], expected_values []

2.4 Experiments and Results

The proposed methodology has experimented with a few models based on capsule networks, while keeping the convolutional Siamese network that has proposed by Koch et al. as a baseline. As an initial attempt to understand the applicability of Capsules in Siamese networks, we integrate the network proposed by Sabour et al. [9] to a Siamese network, which does not give satisfactory result due to its inability to converge properly. Sabour et al. proposed this model for the MNIST dataset which is a collection of 28 × 28 images of numbers. However, in our study, we scale out this model to 105 × 105 images of Omniglot dataset, which makes it highly compute-intensive to train the learning model.

In order to mitigate the high computational power, improvements were made to the previous model based on the ideas proposed in DeepCaps [34] to stack multiple capsule layers and finally replace the L1 distance layer with a vector difference layer. Validation accuracies for different models are reported in Table 2.2. Here, three Siamese networks were tested while keeping Convolutional Siamese network [7], as the base. The network is purely based on Sabour et al. [9] showed poor performance, while Capsule Siamese 1 with deep capsule networks and Capsule Siamese 2 with deep capsule and new vector difference layer shows on par performance to the base model. This is an indication that the original Siamese network with classical Capsule layers is not generalized enough.

Table 2.2 Model validation accuracy.

| Class |

Agreement (%) |

| Convolutional Siamese |

94 ± 2% |

| Sabour et al. Capsule Siamese |

78 ± 5% |

| Deep Capsule Siamese 1 |

89 ± 3% |

| Deep Capsule Siamese 2 |

95 ± 2.5% |

2.4.1 N-Way Classification

One expectation of this model is achieving the ability to generalize previous experience and use it to make decisions with completely new unseen alphabets. Thus, the n-way classification task was designed to evaluate the model in classifying previously unseen characters. Here, we have used 30 alphabets having 659 characters from the evaluation set of Omniglot dataset which was not used in the training. However, that makes the model completely unfamiliar with these characters.

In this experiment, we have designed the one-shot learning task as deciding the category of a given test image X out of n given categories. For an n-way classification task, we selected n character categories and selected one-character category from the same set as the test category. Then the one-shot task is prepared with one test image ( X ) from test category and reference image set { XN }; one image for each character category. The Siamese network is fed with X , Xn couples and predict the similarity. Belonging category, n * is selected as category with the maximum similarity as in Equation (2.3). The argmax function denotes the index of n that maximize F function.

(2.3)

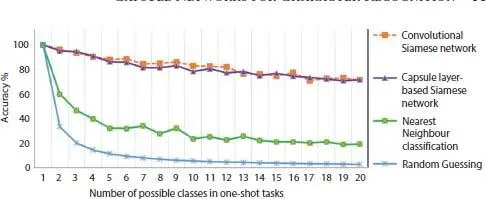

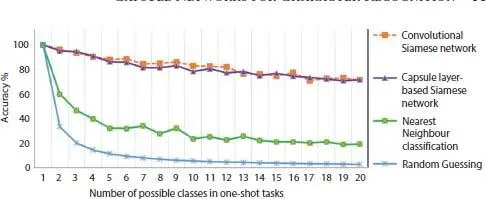

The model is evaluated by N-way classification, N varying in the range [1, 40] and results are depicted in Figure 2.2.

Figure 2.2 Omniglot one-shot learning performance of Siamese networks.

According to Figure 2.2, the proposed model of this study, capsule layer-based Siamese network classification has on par results with Koch et al.’s model with the convolutional Siamese network classification. However, our model has 2.4 million parameters, which is 40% less compared to 4 million parameters in Koch et al.’s model. Although the overall performance of Koch et al.’s model with the convolutional classification, and the proposed model in this study which is based on capsule network, are on par, there are certain cases our model shows superior performance. For instance, the proposed model has a superior capability of identifying minor changes in characters.

Читать дальше