After capturing patients' movements, it is critical to reduce information so that the key features representing the characteristics of the movements can be selected. Therefore, before conducting an automated performance assessment, it is critical to extract these features by encoding human motions.

1.5.1 Human motion encoders in action recognition

Actually, this problem has been extensively studied in the field of human action recognition. For instance, Ren et al . [297] employed the silhouette of a dancer to represent his/her performance by extracting local features to control animated human characters. Wang et al . [372] obtained the contour of a walker from his/her silhouette to represent the walking motion. A spatio‐temporal silhouette representation, the silhouette energy image (SEI), and variability action models were used by Ahmad et al . [19] to represent and classify human actions. In both visual‐based and non‐visual‐based human action recognition of differential features , such as velocity and acceleration, motion statistics, their spectra and a variety of clustering and smoothing methods have been used to identify motion types. A two‐stage dynamic model was established by Kristan et al . [182] to track the centre of gravity of subjects in images. Velocity was employed as one of the features by Yoon et al . [390] to represent the hand movement for the purpose of classification. Further, Panahandeh et al . [272] collected acceleration and rotation data from an inertial measurement unit (IMU) mounted on a pedestrian's chest to classify the activities with a continuous hidden Markov model. Ito [154] estimated human walking motion by monitoring the acceleration of the subject with 3D acceleration sensors. Moreover, angular features, especially the joint angle and angular velocity, have been used to monitor and reconstruct articulated rigid body models corresponding to action states and types. Zhang et al . [397] fused various raw data into angular velocity and orientation of the upper arm to estimate its motion. Donno et al . [97] collected angle and angular velocity data from a goniometer to monitor the motions of human joints. Angle was also utilised by Gu et al . [129] to recognise human motions to instruct a robot. Amft et al . [26] detected the feeding phases by constructing a hidden Markov model with the angle feature from the lower arm rotation. Apart from the above, only a few have considered a similar approach of trajectory shape features such as curvature and torsion. For example, Zhou et al . [401] extracted the trajectories of the upper limb and classified its motion by computing the similarity of these trajectories.

1.5.2 Human motion encoders in physical telerehabilitation

Although a number of encoding approaches have been investigated, not all of these approaches have been adopted in the physical telerehabilitation field, where the angles and trajectories of joints are usually utilised.

In these two representations, the angle‐based approach is more widely used. This is mainly because human limbs are normally modelled as articulated rigid bodies. Additionally, some measurement devices, such as IMUs, are able to measure the orientation of limbs easily. Therefore, angles of joints and orientation of limbs can be acquired without much difficulty. Limb segments are hinged together with various degrees of freedom (DOF), which can be seen in Tables 1.1and 1.2.

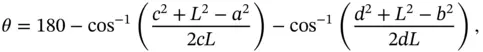

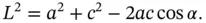

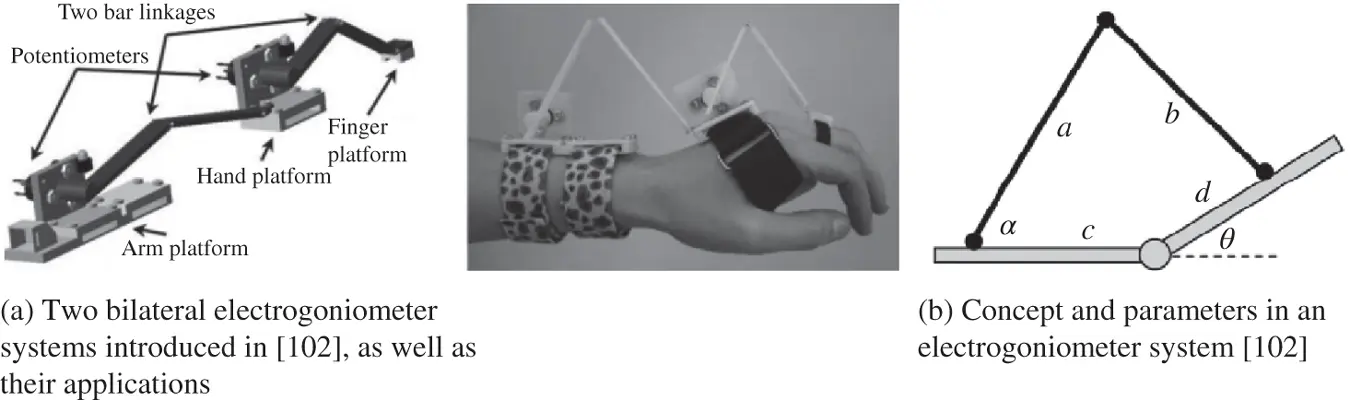

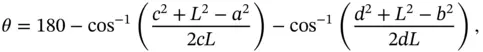

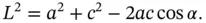

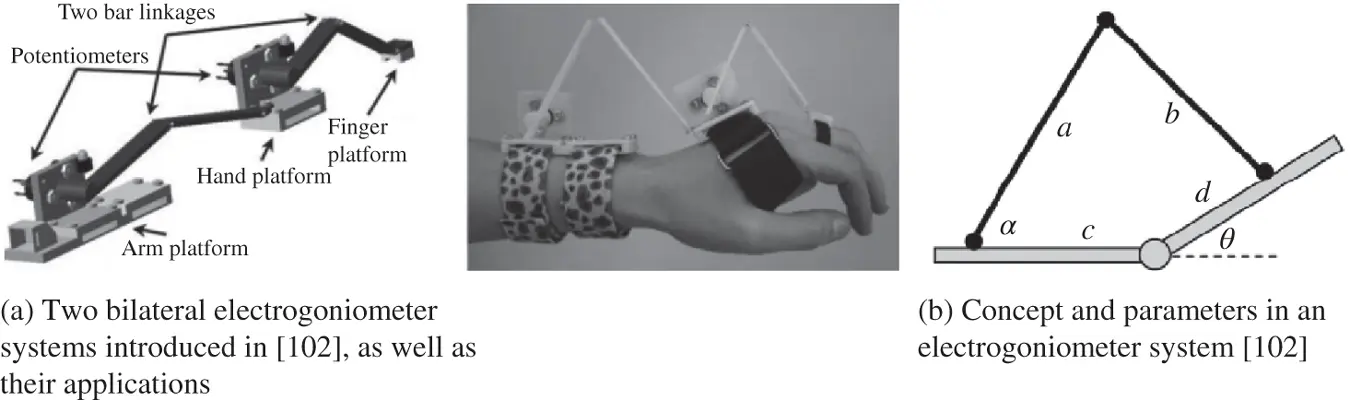

A number of examples utilising angle and its derivatives as encoders can be found. For instance, Tseng et al . [357] evaluated two platforms (Octopus II and ECO) to capture human motions for home rehabilitation. In two platforms, angles of joints were measured to represent the movement of limbs by the same type of compass (TDCM3 electrical compass) and different accelerometers, including the FreeScale MMA7260QT accelerometer and the Hitachi‐Metal H34C accelerometer, respectively. Two types of angles were taken into consideration. One was the joint angle between limbs, such as the angle of the elbow and knee. The second type was the angle between the orientation of a sensor and gravity. Moreover, in the telerehabilitation system developed by Luo et al . [218], angles of joints were utilised to encode the movements of the upper extremity. In this system, the angles of the shoulder and wrist were measured by two IMUs, as these two joints were modelled with three degrees of freedom on each joint while those of the elbow and fingers were measured by an optical linear encoder (OLE) and a glove made by multiple OLEs because these joints could be modelled with one degree of freedom. Additionally, Durfee et al . [102] introduced two bilateral electrogoniometers in a home telerehabilitation system for post-stroke patients. These bilateral electrogoniometers were attached to the wrist and hand of a subject, respectively. The angles of flexion and extension movements in the wrist and the first MCP joints were measured to represent the movement of the wrist and hand. Two potentiometers (refer to Figure 1.10a) were utilised to calculate the angles of joints ( θ ) as

(1.4)

where the distance from the anatomic joint to the linkage joint is

(1.5)

Figure 1.10 Pictures of animals. Source: Durfee et al . [102]. © 2009, ASME.

Apart from the above three examples, in some studies where motion trajectories of joints are captured, angle information is still derived for encoding human movements. For example, Adams et al . [15] developed a virtual reality system to assess the motor function of upper extremities in daily living. To encode the movement, they used the swing angle of the shoulder joint along the Y and Z axes, the twist angle of the shoulder, the angle of the elbow, their first and second derivatives, the bone length of the collarbone, upper arm and forearm, as well as the pose (position, yaw and pitch) of the vector along the collarbone to describe the movement of the upper body. Here the collarbone is a virtual bone connecting two shoulders. These parameters were utilised in an unscented Kalman filter as state, while the positions of the shoulders, elbows and wrists reading from a Kinect formed the observation. Another example is that of Wenbing et al . [378], who evaluated the feasibility of using a single Kinect with a series of rules to assess the quality of movements in rehabilitation. Five movements, including hip abduction, bowling, sit to stand, can turn and toe touch, were studied in this paper. For the first four movements, angles were used as encoders. For instance, the change of angle between left and right thighs (the vector from the hip centre to the left and right knee) was used to represent the angle of hip abduction, while the dot product of two vectors (from the hip centre to the left and right shoulders) was utilised to compute the angle encoding the movement of bowling. Additionally, Olesh et al . [267] proposed an automated approach to assess the impairment of upper limb movements caused by stroke. To encode the movement of the upper extremities, the angle of four joints, including shoulder flexion‐extension, shoulder abduction‐adduction, elbow flexion‐extension and wrist flexion‐extension, were calculated with the 3D positions of joints measured with Kinect.

Читать дальше