Stephen Winters-Hilt - Informatics and Machine Learning

Здесь есть возможность читать онлайн «Stephen Winters-Hilt - Informatics and Machine Learning» — ознакомительный отрывок электронной книги совершенно бесплатно, а после прочтения отрывка купить полную версию. В некоторых случаях можно слушать аудио, скачать через торрент в формате fb2 и присутствует краткое содержание. Жанр: unrecognised, на английском языке. Описание произведения, (предисловие) а так же отзывы посетителей доступны на портале библиотеки ЛибКат.

- Название:Informatics and Machine Learning

- Автор:

- Жанр:

- Год:неизвестен

- ISBN:нет данных

- Рейтинг книги:3 / 5. Голосов: 1

-

Избранное:Добавить в избранное

- Отзывы:

-

Ваша оценка:

- 60

- 1

- 2

- 3

- 4

- 5

Informatics and Machine Learning: краткое содержание, описание и аннотация

Предлагаем к чтению аннотацию, описание, краткое содержание или предисловие (зависит от того, что написал сам автор книги «Informatics and Machine Learning»). Если вы не нашли необходимую информацию о книге — напишите в комментариях, мы постараемся отыскать её.

Discover a thorough exploration of how to use computational, algorithmic, statistical, and informatics methods to analyze digital data Informatics and Machine Learning: From Martingales to Metaheuristics

ad hoc, ab initio

Informatics and Machine Learning: From Martingales to Metaheuristics

Informatics and Machine Learning — читать онлайн ознакомительный отрывок

Ниже представлен текст книги, разбитый по страницам. Система сохранения места последней прочитанной страницы, позволяет с удобством читать онлайн бесплатно книгу «Informatics and Machine Learning», без необходимости каждый раз заново искать на чём Вы остановились. Поставьте закладку, и сможете в любой момент перейти на страницу, на которой закончили чтение.

Интервал:

Закладка:

Is genomic DNA random? Let us read thru a dna file, consisting of a sequence of a,c,g, and t's, and get their counts… then compute the shannon entropy vs. random (uniform distribution, e.g. p = 1/4 for each of the four possibilities). In order to do this we must learn file input/output (i/o) to “read” the data file:

------------------ prog1.py addendum 4 ----------------------- fo = open("Norwalk_Virus.txt", "r+") str = fo.read() # print(str) fo.close() ---------------- end prog1.py addendum 4 ---------------------

Notes on syntax: the example above shows the standard template for reading a data file, where the datafile's name is Norwalk_Virus.txt. The subroutine “open” is a Python command that handles file i/o. As its name suggests, it “opens” a datafile.

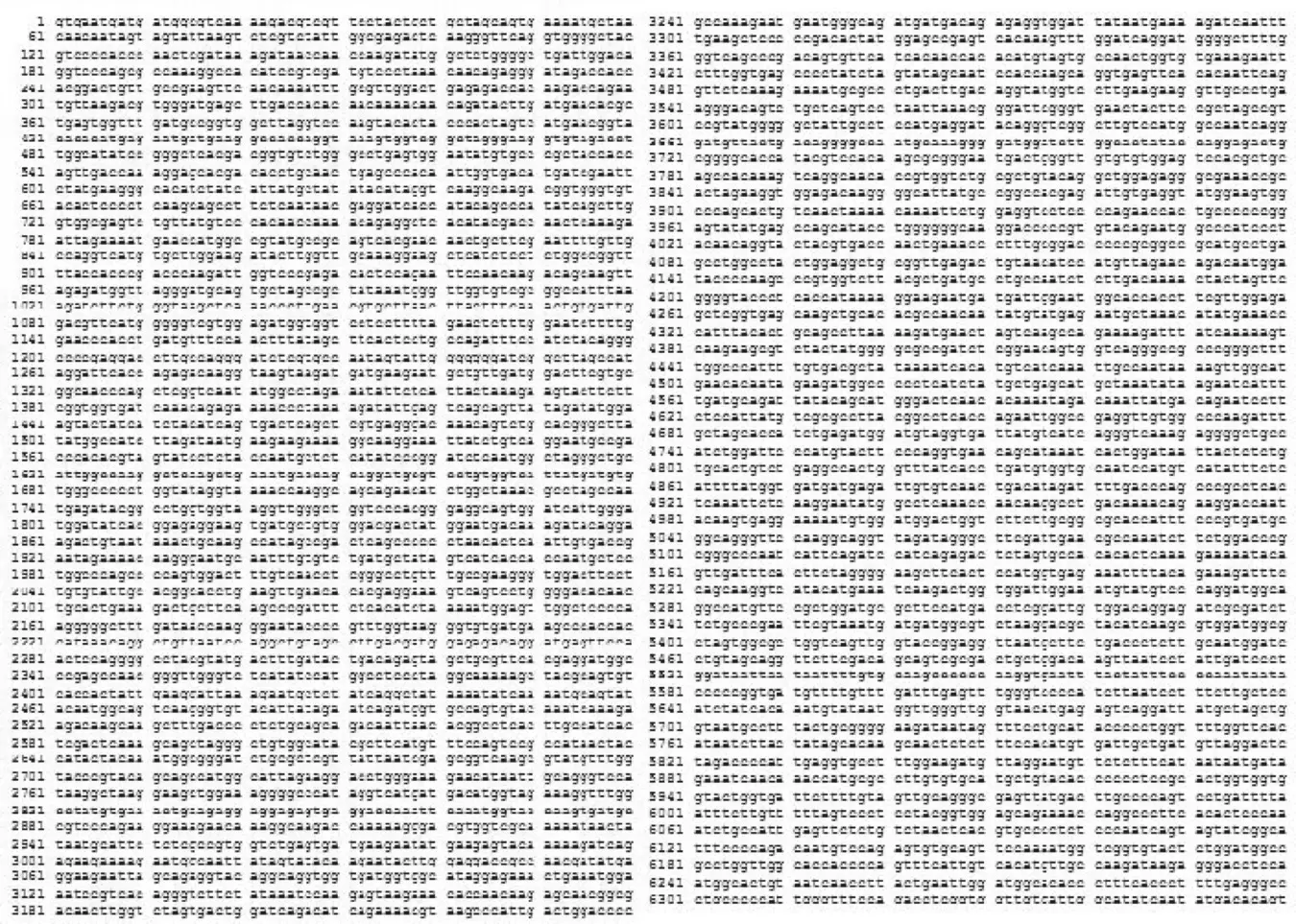

Figure 2.1 The Norwalk virus genome (the “cruise ship virus”).

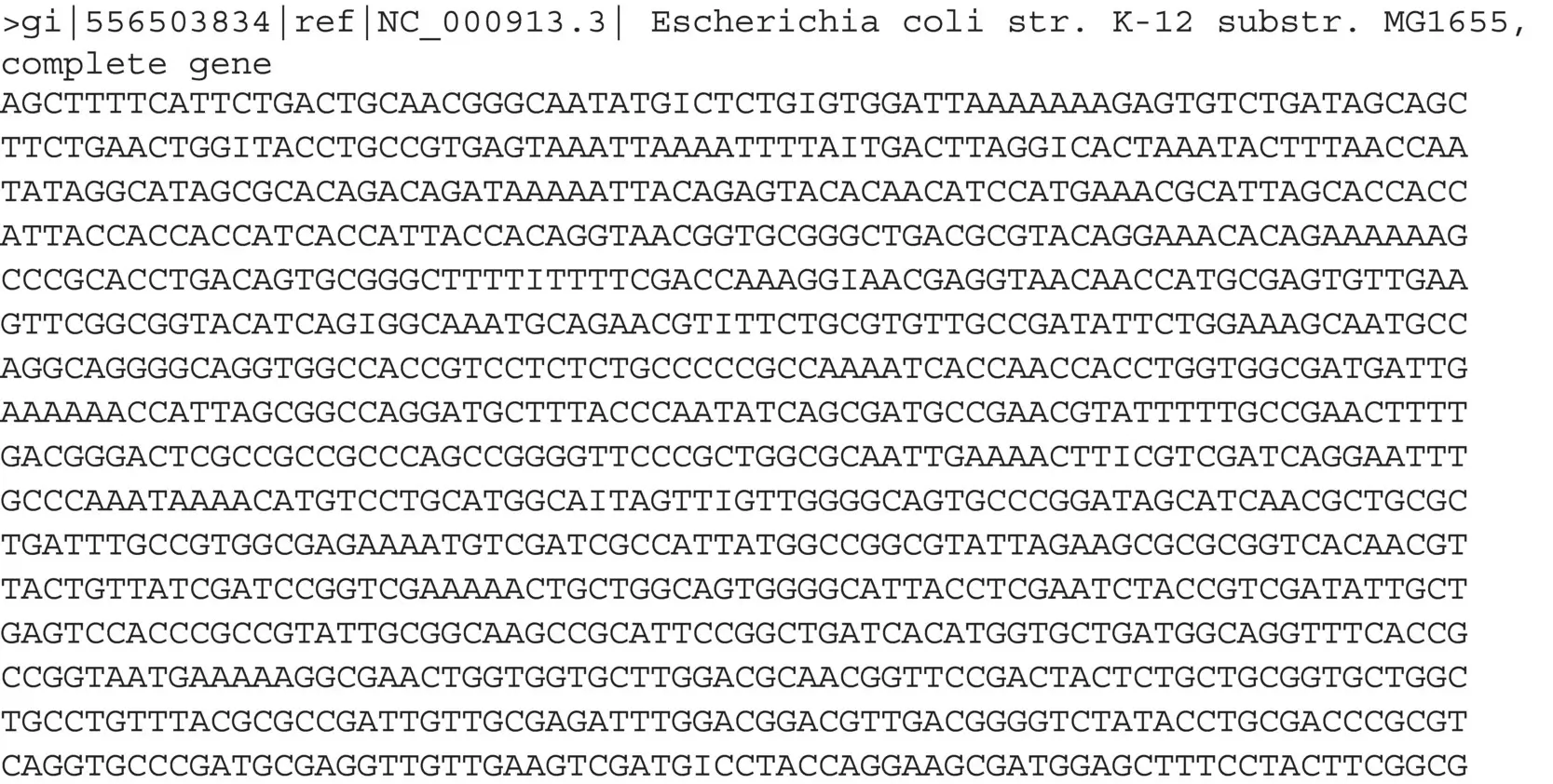

The Norwalk virus file has nonstandard format and is shown in its entirety in Figure 2.1(split into two columns). The Escherichia coli genome ( Figure 2.2), on the other hand, has standard FASTA format. (FASTA is the name of a program (~1985), where a file format convention was adopted, allowing “flat‐file” record access that has been used in similar form ever since.)

The E. coli genome file is shown only for the first part (it is 4.6 Mb) in Figure 2.2, where the key feature of the FASTA file is apparent on line 1, where a “>” symbol should be present, indicating a label (or comment – information that will almost always be present):

Python has a powerful regular expression module (named “re” and imported in the first code sample of prog1.py). Regular expression processing of strings of characters is a mini programming language in its own right, thus a complete list of the functionalities of the re module will not be given here. Focusing on the “findall” function, it does as its name suggests, it finds all entries matching the search string characters in an array in the order they are encountered in the string. We begin with the string comprising the data file read in the previous example. We now traverse the string searching for characters matching those specified in the pattern field. The array of a,c,g,t's that results has conveniently stripped any numbers, spaces, or line returns in this process, and a straightforward count can be done:

Figure 2.2 The start of the E. coli genome file, FASTA format.

---------------------- prog1.py addendum 5 ------------------- pattern = '[acgt]' result = re.findall(pattern, str) seqlen = len(result) # sequence = "" # sequence = sequence.join(result) # print(sequence) print("The sequence length of the Norwalk genome is: ") print(seqlen) a_count=0 c_count=0 g_count=0 t_count=0 for index in range(0,seqlen): if result[index] == 'a': a_count+=1.0 elif result[index] == 'c': c_count+=1.0 elif result[index] == 'g': g_count+=1.0 elif result[index] == 't': t_count+=1.0 else: print("bad char\n") norwalk_counts = np.array([a_count, c_count, g_count, t_count]) print(norwalk_counts) norwalk_probs = np.array([0.0,0,0,0]) norwalk_probs = count_to_freq(norwalk_counts) value = shannon(norwalk_probs) print(value) -------------------- end prog1.py addendum 5 -----------------

We now traverse the array of single acgt's extracted from the raw genome data file, and increment counters associated with the acgt's as appropriate. At the end we have gotten the needed counts, and can then use our subroutines to see what Shannon entropy occurs.

Note on the informatics result: notice how the Shannon entropy for the frequencies of {a,c,g,t} in the genomic data differs only slightly from the Shannon entropy that would be found for a perfectly random, uniform, probability distribution (e.g. log(4)). This shows that although the genomic data is not random, i.e. the genomic data holds “information” of some sort, but we are only weakly seeing it at the level of single base usage.

In order to see clearer signs of nonrandomness, let us try evaluating frequencies at the base‐pair, or dinucleotide, level. There are 16 (4 × 4) dinucleotides that we must now get counts on:

------------------ prog1.py addendum 6 ----------------------- di_uniform = [1.0/16]*16 stats = {} for i in result: if i in stats: stats[i]+=1 else: stats[i]=1 #for i in sorted(stats, key=stats.get): for i in sorted(stats): print("%dx'%s'" % (stats[i],i)) stats = {} for index in range(1,seqlen): dinucleotide = result[index-1] + result[index] if dinucleotide in stats: stats[dinucleotide]+=1 else: stats[dinucleotide]=1 for i in sorted(stats): print("%dx'%s'" % (stats[i],i)) -------------------- end prog1.py addendum 6 -----------------

In the above example we see our first use of hash variables (“stats”) in keeping tabs on counts of occurrences of various outcomes. This is a fundamental way to perform such counts without enumerating all of the outcomes beforehand (which results in what is known as the “enumeration problem,” which is not really a problem, just a poor algorithmic approach). Further discussion of the enumeration “problem” and how it can be circumvented with use of hash variables will be described in Section 2.2.

The sequence information is traversed in a manner such that each of the dinucleotides is counted in the order seen, where the dinucleotide is extracted as a “window” of width two bases is slid across the genomic sequence. Each dinucleotide is entered into the count hash variable as a “key” entry, with the associated “value” being an increment on the count already seen and held as the old “value.” These counts are then transferred to an array to make use of our prior subroutines count_to_freq and Shannon.

In the results for Shannon entropy on dinucleotides, we still do not see clear signs of nonrandomness. Similarly, let us try trinucleotide level. There are 64 (4 × 4 × 4) trinucleotides that we must now get counts on:

-------------------- prog1.py addendum 7 --------------------- stats = {} order = 3 for index in range(order-1,seqlen): xmer = "" for xmeri in range(0,order): xmer+=result[index-(order-1)+xmeri] if xmer in stats: stats[xmer]+=1 else: stats[xmer]=1 for i in sorted(stats): print("%dx'%s'" % (stats[i],i)) ---------------- end prog1.py addendum 7 ---------------------

Still do not see real clear signs of non‐random at tribase‐level! So let us try 6‐nucleotide level. There are 4096 6‐nucleotides that we must now get counts on:

----------------- prog1.py addendum 8 ------------------------ def shannon_order( seq, order ): stats = {} seqlen = len(seq) for index in range(order-1,seqlen): xmer = "" for xmeri in range(0,order): xmer+=result[index-(order-1)+xmeri] if xmer in stats: stats[xmer]+=1 else: stats[xmer]=1 nonzerocounts = len(stats) print("nonzerocounts=") print(nonzerocounts) counts = np.empty((0)) for i in sorted(stats): counts = np.append(counts,stats[i]+0.0) probs = count_to_freq(counts) value = shannon(probs) print "The shannon entropy at order", order, "is:", value, "." shannon_order(result,6) ------------------- end prog1.py addendum 8 ------------------

2.1.1 Sample Size Complications

The 6‐nucleotide statistics analyzed in prog1.py in the preceding is typically called a hexamer statistical analysis. Where the window‐size for extracting the substrings has “‐mer” appended, thus six‐mer or hexamer. The term “‐mer” comes from oligomer, a polymer containing a small number of monomers in its specification. In the case of the hexamers we saw that there were 4096 possible hexamers, or length six substrings, when the “alphabet” of monomer types consists of four elements: a,c,g, and t. In other words, there are 4 6= 4096 such substrings. In the Norwalk virus analysis this large number of different things to count, versus sample size overall, raises sampling questions. The Norwalk virus has a genome that is only 7654 nucleotides long. As we sweep the six‐base window over that string to extract all of the hexamer counts we then obtain only 7654 − 5 = 7649 hexamer samples! Even with uniform distribution we will be getting barely two counts for most of the different hexamer types! Limitations due to sample size play a critical role in these types of analysis.

Читать дальшеИнтервал:

Закладка:

Похожие книги на «Informatics and Machine Learning»

Представляем Вашему вниманию похожие книги на «Informatics and Machine Learning» списком для выбора. Мы отобрали схожую по названию и смыслу литературу в надежде предоставить читателям больше вариантов отыскать новые, интересные, ещё непрочитанные произведения.

Обсуждение, отзывы о книге «Informatics and Machine Learning» и просто собственные мнения читателей. Оставьте ваши комментарии, напишите, что Вы думаете о произведении, его смысле или главных героях. Укажите что конкретно понравилось, а что нет, и почему Вы так считаете.