HHMMs are also used in systems that understand the meaning of natural-language sentences, which represents going up the conceptual hierarchy.

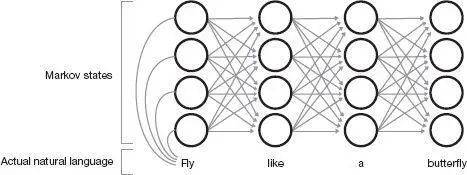

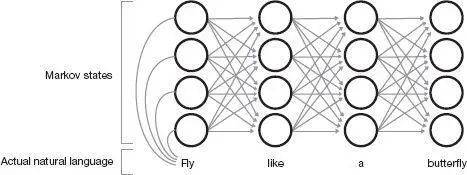

Hidden Markov states and possible transitions to produce a sequence of words in natural-language text.

To understand how the HHMM method works, we start out with a network that consists of all the state transitions that are possible. The vector quantization method described above is critical here, because otherwise there would be too many possibilities to consider.

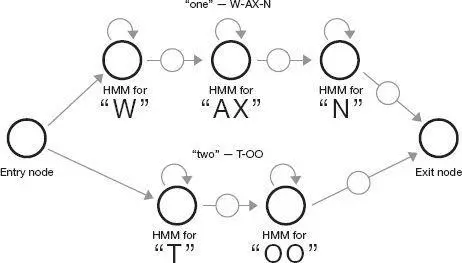

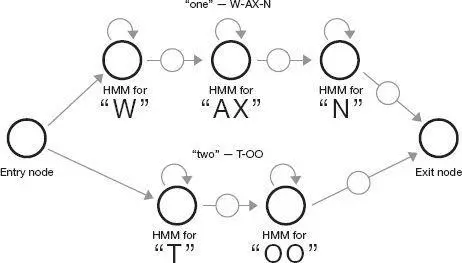

Here is a possible simplified initial topology:

A simple hidden Markov model topology to recognize two spoken words.

Sample utterances are processed one by one. For each, we iteratively modify the probabilities of the transitions to better reflect the input sample we have just processed. The Markov models used in speech recognition code the likelihood that specific patterns of sound are found in each phoneme, how the phonemes influence one another, and the likely orders of phonemes. The system can also include probability networks on higher levels of language structure, such as the order of words, the inclusion of phrases, and so on up the hierarchy of language.

Whereas our previous speech recognition systems incorporated specific rules about phoneme structures and sequences explicitly coded by human linguists, the new HHMM-based system was not explicitly told that there are forty-four phonemes in English, the sequences of vectors that were likely for each phoneme, or what phoneme sequences were more likely than others. We let the system discover these “rules” for itself from thousands of hours of transcribed human speech data. The advantage of this approach over hand-coded rules is that the models develop probabilistic rules of which human experts are often not aware. We noticed that many of the rules that the system had automatically learned from the data differed in subtle but important ways from the rules established by human experts.

Once the network was trained, we began to attempt to recognize speech by considering the alternate paths through the network and picking the path that was most likely, given the actual sequence of input vectors we had seen. In other words, if we saw a sequence of states that was likely to have produced that utterance, we concluded that the utterance came from that cortical sequence. This simulated HHMM-based neocortex included word labels, so it was able to propose a transcription of what it heard.

We were then able to improve our results further by continuing to train the network while we were using it for recognition. As we have discussed, simultaneous recognition and learning also take place at every level in our biological neocortical hierarchy.

Evolutionary (Genetic) Algorithms

There is another important consideration: How do we set the many parameters that control a pattern recognition system’s functioning? These could include the number of vectors that we allow in the vector quantization step, the initial topology of hierarchical states (before the training phase of the hidden Markov model process prunes them back), the recognition threshold at each level of the hierarchy, the parameters that control the handling of the size parameters, and many others. We can establish these based on our intuition, but the results will be far from optimal.

We call these parameters “God parameters” because they are set prior to the self-organizing method of determining the topology of the hidden Markov models (or, in the biological case, before the person learns her lessons by similarly creating connections in her cortical hierarchy). This is perhaps a misnomer, given that these initial DNA-based design details are determined by biological evolution, though some may see the hand of God in that process (and while I do consider evolution to be a spiritual process, this discussion properly belongs in chapter 9).

When it came to setting these “God parameters” in our simulated hierarchical learning and recognizing system, we again took a cue from nature and decided to evolve them—in our case, using a simulation of evolution. We used what are called genetic or evolutionary algorithms (GAs), which include simulated sexual reproduction and mutations.

Here is a simplified description of how this method works. First, we determine a way to code possible solutions to a given problem. If the problem is optimizing the design parameters for a circuit, then we define a list of all of the parameters (with a specific number of bits assigned to each parameter) that characterize the circuit. This list is regarded as the genetic code in the genetic algorithm. Then we randomly generate thousands or more genetic codes. Each such genetic code (which represents one set of design parameters) is considered a simulated “solution” organism.

Now we evaluate each simulated organism in a simulated environment by using a defined method to assess each set of parameters. This evaluation is a key to the success of a genetic algorithm. In our example, we would run each program generated by the parameters and judge it on appropriate criteria (did it complete the task, how long did it take, and so on). The best-solution organisms (the best designs) are allowed to survive, and the rest are eliminated.

Now we cause each of the survivors to multiply themselves until they reach the same number of solution creatures. This is done by simulating sexual reproduction: In other words, we create new offspring where each new creature draws one part of its genetic code from one parent and another part from a second parent. Usually no distinction is made between male or female organisms; it’s sufficient to generate an offspring from any two arbitrary parents, so we’re basically talking about same-sex marriage here. This is perhaps not as interesting as sexual reproduction in the natural world, but the relevant point here is having two parents. As these simulated organisms multiply, we allow some mutation (random change) in the chromosomes to occur.

We’ve now defined one generation of simulated evolution; now we repeat these steps for each subsequent generation. At the end of each generation we determine how much the designs have improved (that is, we compute the average improvement in the evaluation function over all the surviving organisms). When the degree of improvement in the evaluation of the design creatures from one generation to the next becomes very small, we stop this iterative cycle and use the best design(s) in the last generation. (For an algorithmic description of genetic algorithms, see this endnote.) 11

The key to a genetic algorithm is that the human designers don’t directly program a solution; rather, we let one emerge through an iterative process of simulated competition and improvement. Biological evolution is smart but slow, so to enhance its intelligence we greatly speed up its ponderous pace. The computer is fast enough to simulate many generations in a matter of hours or days, and we’ve occasionally had them run for as long as weeks to simulate hundreds of thousands of generations. But we have to go through this iterative process only once; as soon as we have let this simulated evolution run its course, we can apply the evolved and highly refined rules to real problems in a rapid fashion. In the case of our speech recognition systems, we used them to evolve the initial topology of the network and other critical parameters. We thus used two self-organizing methods: a GA to simulate the biological evolution that gave rise to a particular cortical design, and HHMMs to simulate the cortical organization that accompanies human learning.

Читать дальше