| N = |

4 |

6 |

2 |

|

| A = |

1 |

2 |

0 |

|

| C = |

0 |

2 |

0 |

Task 1 |

| 1 |

1 |

0 |

Task 2 |

| 1 |

1 |

1 |

Task 3 |

| 1 |

0 |

1 |

Task 4 |

| D = |

2 |

2 |

2 |

Task 1 |

| 1 |

1 |

0 |

Task 2 |

| 0 |

1 |

0 |

Task 3 |

| 1 |

1 |

1 |

Task 4 |

If task 2 requests one unit of resource R1, granting such a request does not lead to deadlock because a sequence of resource allocations exists, i.e., giving the remaining resources to task 2, to task 3, followed by allocation to task 4, and finally to task 1, which allows all tasks to complete. This request from task 2 is safe and is allowed. If task 4 were to make the same request for R1 and if such a request were granted, this process would prevent task 2 from completing, which would result in a deadlock such that no task could resume execution. The request from task 4 is an unsafe request, and the deadlock avoidance algorithm would reject the request and put task 4 on hold while allowing other tasks to continue.

In order for deadlock avoidance to work, each task must estimate in advance its maximum resource requirement per resource type. This estimation is often difficult to predict in a dynamic system. For more static embedded systems or for systems with predictable operating environments, however, deadlock avoidance can be achieved. The estimations from all tasks are used to construct the demand table, D. This resource estimation only identifies the potential maximum resource requirement through certain execution paths. In the majority of cases, there would be overestimation. Overestimation by each task can lead to inefficient resource utilization in a heavily loaded system. This problem is caused because the system might be running with most of the resources in use, and the algorithm might predict more requests as being unsafe. This issue could result in many tasks being blocked, while holding resources that were already allocated to them.

16.3.5 Deadlock Prevention

Deadlock prevention is a set of constraints and requirements constructed into a system so that resource requests that might lead to deadlocks are not made. Deadlock prevention differs from deadlock avoidance in that no run-time validation of resource allocation requests occurs. Deadlock prevention focuses on structuring a system to ensure that one or more of the four conditions for deadlock i.e., mutual exclusion, no preemption, hold-and-wait, and circular wait is not satisfied.

This set of constraints and requirements placed on resource allocation requests is as follows:

· Eliminating the hold-and-wait deadlock condition.A task requests at one time all resources that it will need. The task can begin execution only when every resource from the request set is granted.

This requirement addresses the hold-and-wait condition for deadlock. A task that obtains all required resources before execution avoids the need to wait for anything during execution. This approach, however, has limited practicality and several drawbacks. In a dynamic system, tasks have difficulty predicting in advance what resources will be required. Even if all possible resource requirements could be accurately predicted, this prediction does not guarantee that every resource in this predicted set would be used. Execution paths, which external factors affect, determine which resources are used.

One major drawbacks to this approach is the implicit requirement that all resources must be freed at the same time. This requirement is important because a resource can be needed in multiple code paths; it can be used and later be reused. So, the resource must be kept until the end of task execution. Some of the resources, however, might be used once or used only briefly. It is inefficient for these resources to be kept for a long time because they cannot be reassigned to other tasks.

· Eliminating the no-preemption deadlock condition. A task must release already acquired resources if a new request is denied. The task must then initiate a new request including both the new resource and all previously held resources.

This requirement addresses the no-preemption condition for deadlock. This approach is slightly more dynamic than the previous method in that resources are acquired on an as-needed basis and only those resources needed for a particular execution path, instead of all possible resources, are acquired.

This approach, however, is not much better than the previous one. For tasks holding non-preemptible resources, this requirement means that each task must restart execution either from the beginning or from well-defined checkpoints. This process nullifies partially complete work. Potentially, a task might never complete, depending on the average number of tasks existing in the system at a given time and depending on the overall system scheduling behavior.

· Eliminating the circular-wait deadlock condition. An ordering on the resources must be imposed so that if a task currently holds resource R i , a subsequent request must be for resource R j where j › i . The next request must be for resource R k where k › j , and so on.

This imposition addresses the circular-wait condition for deadlock. Resources are organized into a hierarchical structure. A task is allowed to acquire additional resources while holding other resources, but these new resources must be higher in the hierarchy than any currently held resources.

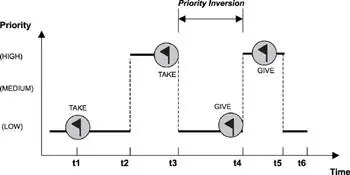

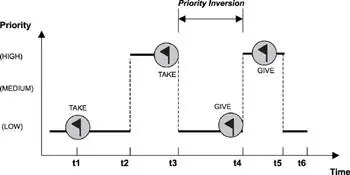

Priority inversion is a situation in which a low-priority task executes while a higher priority task waits on it due to resource contentions.

A high task priority implies a more stringent deadline. In a priority-based, preemptive scheduling system, the kernel schedules higher priority tasks first and postpones lower priority tasks until either all of the higher priority tasks are completed or the higher priority tasks voluntarily relinquish the CPU. In real-time embedded systems, the kernel strives to make the schedulability of the highest priority task deterministic. To do this, the kernel must preempt the currently running task and switch the context to run the higher priority task that has just become eligible, all within a known time interval. This system scheduling behavior is the norm when these tasks are independent of each other. Task interdependency is inevitable when tasks share resources and synchronizing activities. Priority inversion occurs when task interdependency exists among tasks with different priorities.

Consider the situation shown in Figure 16.6, in which a higher priority task shares a resource with a lower priority task. The higher priority task must wait when the lower priority task has locked the resource, even though the higher priority task is eligible to run.

Figure 16.6: Priority inversion example.

As shown in Figure 16.6, at time t1 the low-priority task (LP-task) locks the shared resource. The LP-task continues until time t2 when the high-priority task (HP-task) becomes eligible to run. The scheduler immediately preempts the LP-task and context-switches to the HP-task. The HP-task runs until time t3 when it requires the shared resource. Because the resource is in the locked state, the HP-task must block and wait for its release. At this point, the scheduler context-switches back to the LP-task. Priority inversion begins at time t3. At time t4, the LP-task releases the shared resource, which triggers preemption and allows the HP-task to resume execution. Priority inversion ends at time t4. The HP-task completes at time t5, which allows the LP-task to resume execution and finally complete at time t6.

Читать дальше