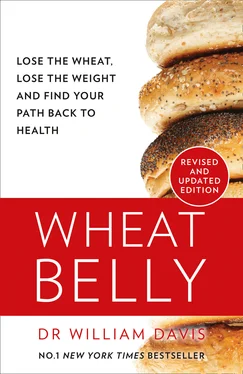

Wheat has been an undeniable financial success. How many other ways can a manufacturer transform a dime’s worth of raw material into $3.99 worth of glitzy, consumer-friendly product, topped off with endorsements from the American Heart Association? In most cases, the cost of marketing these products exceeds the cost of the ingredients themselves.

Foods made partly or entirely of wheat for breakfast, lunch, dinner, and snacks have become the rule. Indeed, such a regimen would make the USDA, the Whole Grains Council, the Whole Wheat Council, the Academy of Nutrition and Dietetics, the American Diabetes Association, and the American Heart Association happy, knowing that their message to eat more “healthy whole grains” has gained a wide and eager following.

So why has this seemingly benign plant that sustained generations of humans suddenly turned on us? For one thing, it is not the same grain our forebears ground into their daily bread. Wheat naturally evolved to only a modest degree over the centuries, but it has changed dramatically in the past sixty years under the influence of agricultural scientists. Wheat strains have been hybridized, crossbred, and chemically mutated to make the wheat plant resistant to environmental conditions, such as drought, or pathogens, such as fungi, as well as resistant to herbicides. But most of all, genetic changes have been introduced to increase yield per acre . The average yield on a modern North American farm is more than tenfold greater than farms of a century ago. Such enormous strides in yield have required drastic changes in genetic code, reducing the proud “amber waves of grain” of yesteryear to rigid, stocky, eighteen-inch-tall high-production “semi-dwarf” wheat of today. Such fundamental genetic changes, as you will see, have come at a price for the unwitting creatures who consume it.

Even in the few decades since your grandmother survived Prohibition and danced the Big Apple, wheat has undergone countless transformations. As the science of genetics has progressed over the past sixty years, permitting human intervention to unfold much more rapidly than nature’s slow, year-by-year breeding influence, the pace of change has increased exponentially. The genetic backbone of a high-tech poppy seed muffin has achieved its current condition by a process of evolutionary acceleration for agricultural advantage that makes us look like pre-humans trapped somewhere in the early Pleistocene.

FROM NATUFIAN PORRIDGE TO DONUT HOLES

“Give us this day our daily bread.”

It’s in the Bible. In Deuteronomy, Moses describes the Promised Land as “a land of wheat and barley and vineyards.” Bread is central to religious ritual. Jews celebrate Passover with unleavened matzo to commemorate the flight of the Israelites from Egypt. Christians consume wafers representing the body of Christ. Muslims regard unleavened naan as sacred, insisting it be stored upright and never thrown away in public. In the Bible, bread is a metaphor for bountiful harvest, times of plenty, freedom from starvation, even salvation.

Don’t we break bread with friends and family? Isn’t something new and wonderful “the best thing since sliced bread”? “Taking the bread out of someone’s mouth” is to deprive that person of a fundamental necessity. Bread is a nearly universal diet staple: chapati in India, tsoureki in Greece, pita in the Middle East, aebleskiver in Denmark, naan bya for breakfast in Burma, glazed donuts any old time in the United States.

The notion that a foodstuff so fundamental, so deeply ingrained in the human experience, can be bad for us is, well, unsettling and counter to long-held cultural views. But today’s bread bears little resemblance to the loaves that emerged from our forebears’ ovens. Just as a modern Napa Cabernet Sauvignon is a far cry from the crude ferment of fourth-century BC Georgian winemakers who buried wine urns in underground mounds, so has wheat changed. Bread and other foods made of wheat may have helped sustain humans for centuries (but at a chronic health price, as I shall discuss), but the wheat of our ancestors is not the same as modern commercial wheat that reaches your breakfast, lunch, and dinner table. From original strains of wild grass harvested by early humans, wheat has exploded to more than 25,000 varieties, virtually all of them the result of human intervention.

In the waning days of the Pleistocene, around 8500 BC, millennia before any Christian, Jew, or Muslim walked the earth, before the Egyptian, Greek, and Roman empires, the Natufians led a semi-nomadic life roaming the Fertile Crescent (now Syria, Jordan, Lebanon, Israel, and Iraq), supplementing hunting and gathering by harvesting indigenous plants. They harvested the ancestor of modern wheat, einkorn, from fields that flourished wildly in open plains. Meals of gazelle, boar, fowl, and ibex were rounded out with dishes of wild-growing grain and fruit. Relics like those excavated at the Tell Abu Hureyra settlement in what is now central Syria suggest skilled use of tools such as sickles and mortars to harvest and grind grains, as well as storage pits for stockpiling harvested food. Remains of harvested wheat have been found at archaeological digs in Tell Aswad, Jericho, Nahal Hemar, Navali Cori, and other locales. Wheat was ground by hand, then eaten as porridge. The modern concept of bread leavened by yeast would not come along for several thousand years.

Natufians harvested wild einkorn wheat and stored seeds to sow in areas of their own choosing the following season. Einkorn wheat eventually became an essential component of the Natufian diet, reducing need for hunting and gathering. The shift from harvesting wild grain to cultivating it from one season to the next was a fundamental change that shaped subsequent human migratory behavior, as well as development of tools, language, and culture. It marked the beginning of agriculture, a lifestyle that required long-term commitment to permanent settlement, a turning point in the course of human civilization. Growing grains and other foods yielded a surplus of food that allowed for occupational specialization, government, and all the elaborate trappings of culture (while, in contrast, the absence of agriculture arrested development of other cultures in a lifestyle of nomadic hunting and gathering).

Over most of the ten thousand years that wheat has occupied a prominent place in the caves, huts, and adobes, and on the tables of humans, what started out as harvested einkorn, then emmer, followed by cultivated Triticum aestivum , changed gradually and only in fits and starts. The wheat of the seventeenth century was the wheat of the eighteenth century, which in turn was much the same as the wheat of the nineteenth century and the first half of the twentieth century. Riding your oxcart through the countryside during any of these centuries, you’d see fields of five-foot-tall “amber waves of grain” swaying in the breeze. Crude human wheat-breeding efforts yielded hit-and-miss, year-over-year incremental modifications, some successful, most not, and even a discerning eye would be hard-pressed to tell the difference between the wheat of early twentieth-century farming from its centuries of predecessors.

During the nineteenth and early twentieth centuries, as in many preceding centuries, wheat therefore changed little. The Pillsbury’s Best XXXX flour my grandmother used to make her famous sour cream muffins in 1940 was little different from the flour of her great-grandmother sixty years earlier or, for that matter, from that of a distant relative two or three centuries before that. Grinding of wheat became mechanized in the twentieth century, yielding finer flour on a larger scale, but the basic composition of the flour remained much the same.

Читать дальше