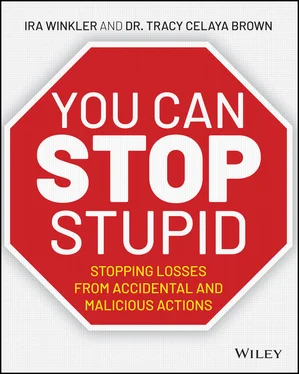

Everyone has experience with inadequate training and can relate to the fact that such training results in loss. Fortunately, training can be strengthened to make it more effective. Chapter 15, “Creating Effective Awareness Programs,” addresses the improvement of training.

Technology Implementation

As we talk about UIL, it is important to consider contributing factors to those losses. Everyone has experience with difficult-to-use systems that inevitably contribute to loss of some type. Some systems cause typographical errors that cause people to transfer the wrong amount of money. Some navigational systems cause drivers to go to the wrong destination or to drive the wrong way on a one-way street.

Some user interfaces contribute to users making security-related mistakes. For example, one of the most common security-related loss results from autocomplete in the Recipients field of an email. People frequently choose the wrong recipient from common names. In one case, after one of our employees left our organization to work for a client and solicited bids from a variety of vendors, we received a proposal from a competitor. The competitor apparently used an old email to send the proposal to our former employee. In another case, we had a client issue an email with a request for proposals to various organizations, including us and our competitors. One competitor mistakenly clicked Reply All, and all potential bidders received a copy of their proposal.

These are minor issues compared to some more serious losses. For example, as mentioned earlier, in 2019 two Boeing 737 MAX airplanes crashed. In both cases, Boeing initially attributed the crashes to pilot error, but it appeared that malfunctions of a mechanical device, an angle of attack sensor (AOA), caused the computer system to force the plane to descend rapidly. There are two AOAs on each plane, and computer systems in similar Boeing 737 models would let the pilot know that there was a discrepancy between the readings of the two AOAs. In the 737 MAX airplanes, the warning for the discrepancy was removed and made an optional feature, and pilots were not properly warned about the differing functionality. (See “Boeing Waited Until After Lion Air Crash to Tell Southwest Safety Alert Was Turned Off on 737 Max,” CNBC, www.cnbc.com/2019/04/28/boeing-didnt-tell-southwest-that-safety-feature-on-737-max-was-turned-off-wsj.html.) Clearly, even if there was some pilot error involved, it was only enabled due to the technological implementation of the system.

There are many aspects of technological implementation that contribute to UIL. The following sections examine design and maintenance, user enablement, shadow IT, and user interfaces.

There are a wide variety of decisions made in the implementation of technology. These design decisions drive the interactions and capabilities provided to the end users. Although it is easy to blame end users when they commit an act that inevitably leads to damage, if the design of the system leads them to commit the harmful action, it is hard to attribute the blame solely to the end user. Such is the case in attempting to blame the Lion Air and Ethiopian Airlines pilots of the doomed Boeing 737 MAX airplanes.

In the implementation of technology, there are many common design issues that essentially automate loss. Programming errors can cause the crash of major computer systems. If this happens to a financial institution, transactions can be blocked for hours. If it happens to an airline's schedule systems, planes can be grounded until the problem is resolved.

People maintaining systems fail to properly maintain and update them for a variety of reasons. Trucks that are not properly maintained will break down. Computers that are not properly maintained may crash or be more easily hacked. Such was the case with the Equifax hack. As we described earlier, one administrator failed to update one system, which allowed the criminals into their infrastructure. Other failings, including a simple maintenance function of renewing a digital certificate, which is essentially paying a fee, caused the data breach to go undetected. No end user initiated a loss in this case, but other people initiated the loss through their actions, and as we explained in Chapter 2, anyone who interacts with the system should be considered to be a user who can initiate loss.

Another category of loss that many professionals fail to consider is the disposal of equipment. Just about all technology seems to have local storage. Before an organization discards computers, they generally know to remove the storage drives. Many people know that they should delete everything on their cellphones. However, many organizations and individuals fail to consider that the same diligence should apply to printers, copy machines, and other devices that had access to the organization's network or data.

If a loss results from the decisions, actions, or inactions of a person, it is a loss that you have to consider in your risk reduction plans, and that includes loss that relates to design and maintenance.

While you can expect end users to make mistakes or be malicious, you do not have to enable the mistakes or malice. Unfortunately, some technology teams are doing exactly that. It is a given that users have to be able to perform their required business functions. However, you can design a user's access and function to limit the amount of loss they can initiate.

As we discussed earlier, McDonald's eliminates the possibility for cashiers to steal or miscount money by removing the cashier from the process. Similarly, ransomware is a constant problem for organizations, but that problem can be greatly reduced by not providing users with administrator privileges on their computer systems. Without administrator privileges, new software, even malicious software, cannot be installed on a computer.

There are limits to any measures that you employ to reduce user enablement. Some malware can bypass administrator privileges. While the elimination of cashiers eliminates risk of cashier theft, it also increases the risk posed by the people maintaining the kiosks, including those who count the cash collected by the kiosks. Even so, there is a significant reduction in the overall risk.

Just as users rarely need administrator privileges on their computers, they are frequently provided with much more technological access and capability than they require to do their jobs. In one extreme example, Chelsea Manning was a U.S. Army intelligence analyst in an obscure facility in Iraq. Manning was allowed to download massive amounts of data from SIPRNet, which is a communications network used by the U.S. Department of Defense and U.S. Department of State for data classified up to the SECRET level. Manning had access to data well beyond what her job function required. Some might argue that Manning's excessive access was part of an effort to ensure intelligence analysts had access to needed information and that compartmentalization of data was a contributing factor in the 9/11 failures. However, in the case of Manning, such access was not implemented with the appropriate security controls (see abcnews.go.com/US/top-brass-held-responsible-bradley-mannings-wikileaks-breach/story?id=12276038). After all, the United States has been dealing with insider threats since Benedict Arnold. Examples like Manning's excessive information access are not unique to the military, and they're often even worse in commercial organizations.

In college, author Ira Winkler worked for his college's admissions office and was responsible for recording admission statuses in the college's mainframe computer. He realized that he also had menu options that provided access to the school registrar's system, which maintained grades. Although he never abused the access, you can assume that other people were not as ethical. You can also assume that many people in other university offices with access to legitimate functions also had excessive access privileges. As you can see, such information access is a combination of both technology and process.

Читать дальше

![Сьюзан Кейн - Quiet [The Power of Introverts in a World That Can't Stop Talking]](/books/33084/syuzan-kejn-quiet-the-power-of-introverts-in-a-wo-thumb.webp)