Since IoT will be among the most immense wellsprings of new data, estimations analysis will surrender a gigantic responsibility for making IoT applications additional insightful. Data analysis is the mix of exceptional coherent fields that uses records mining, PC learning, and different techniques to find structures and new bits of information from data. These techniques fuse a wide extent of figuring’s significant specifically zones. The methodology for using real factors examination techniques to regions joins describing information sorts, for instance, volume, arrangement, and speed; information models, for instance, neural frameworks, request, and clustering methodologies, and using capable computations that strong with the real factor’s characteristics [4]. Based on the reviews, first, since records are created from obvious sources with uncommon bits of knowledge types, it is basic to endeavor or lift counts that can manage the characteristics of the real factors. Second, the sensational collection of sources that produce information persistently is no longer without the trouble of scale and speed. Finally, finding the eminent data model that fits the information is the fundamental issue for test thought and higher assessment of IoT data.

The explanation behind this is to develop progressively splendid ecological elements and a smoothed-out lifestyle by saving time, essentialness, and money. Through this development, costs in select organizations can be lessened. The sizeable hypotheses and numerous investigations running on IoT have made IoT a making design of late. IoT includes an associated unit that can move records among one another to update their introduction [5]; these improvements show precisely and besides human thought or information. IoT involves four key parts:

Sensors,

Dealing with frameworks,

Information evaluation data, and

Machine detecting.

The most recent advances made in IoT began when RFID marks have been put into use even more, as a rule, lower regard sensors got increasingly imperative open, web mechanical aptitude made, and verbal exchange shows balanced. The IoT is worked in with a collection of advances, and the system is an objective and satisfactory condition for it to work. Thus, verbal exchange shows are portions of this mechanical skill that must be updated. Planning and getting ready estimations for these correspondences is a fundamental test. To respond to this test, wonderful sorts of records getting ready, for instance, assessment at the edge, circle examination, and IoT appraisal at the database must be applied. The decision to follow any of the referred to systems depends upon the application and its wants. Murkiness and cloud taking care of our two indicative techniques got for getting ready and planning records before moving it to various things. The entire task of IoT is summarized as follows. First, sensors and IoT units’ aggregate records from the earth. Next, data is isolated from the uncooked data. By then, records are set ready for moving to different things, devices, or servers by methods for the Internet [6].

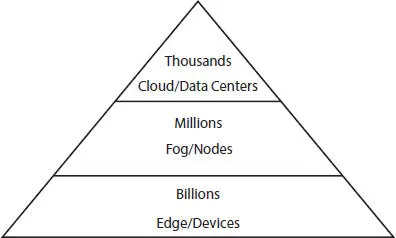

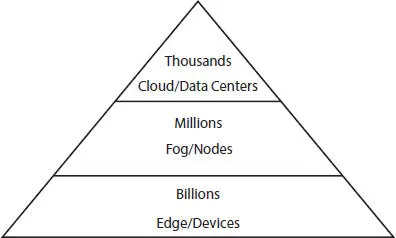

Figure 1.2 Fog computing and edge computing.

1.2.1 Computing Framework

Another imperative portion of IoT is the computing system of handling information, the foremost celebrated of which fog and cloud are computing. IoT applications utilize both systems depending on the application and handle area. In a few applications, information ought to be handled upon the era, whereas in other applications, it is not essential to prepare informatio n quickly. In Figure 1.2, the moment preparing of information and the organization and design that underpins it is known as fog computing. Collectively, these are connected to edge computing.

The engineering of this computing is associated with relocating data from an information center assignment to the frame of the servers. This is constructed based on the frame servers. Fog computing gives restricted computing, capacity, and organize administrations, moreover giving coherent insights and sifting of information for information centers. This engineering has been and is being executed in imperative ranges like e-health and military applications.

In this design, handling is run at a separate from the center, toward the edging of the association [6]. This sort of preparing empowers information to be at first handled at edge gadgets. Gadgets at the edge may not be associated with the arranging ceaselessly, and so, they require a duplicate of the ace data/reference information for offline handling. Edge gadgets have diverse highlights such as

Improving security,

Examining and cleaning information, and

Putting away nearby information for region utilization.

Here, information for handling is sent to information centers, and after being analyzed and prepared, they ended up accessible. This design has tall idleness and tall stack adjusting, demonstrating that this design is not adequate for handling IoT information since most preparation ought to run at tall speeds. The volume of this information is tall, and enormous information handling will increment the CPU utilization of the cloud servers.

1.2.5 Distributed Computing

This building is gotten ready for planning tall volumes of data. In IoT applications, since the sensors badly produce data, enormous data challenges are experienced [7]. To defeat this wonder, dispersed figuring is intended to seclude data into packs and give out the groups to differing PCs for dealing with. This scattered processing has assorted frameworks like Hadoop and Start. While moving from cloud to fog and passed on registering, the taking after wonders occurs:

1 A decrease in organizing stacking,

2 In addition to data planning speed,

3 A diminishment in CPU usage,

4 A diminishment in imperativeness use, and

5 An ability to set up the following volume of data.

Since the adroit city is one of the essential utilization of IoT, the preeminent basic use instances of the keen city and their data attributes are discussed inside the taking after regions.

1.3 Machine Learning Applied to Data Analysis

AI has wrapped up constantly fundamental for information analysis evaluation since it has been for a giant number of various locales. A depicting typical for AI is the restriction of a reveal to be a huge contract of representative facts and after that later used to see for complete goals and determinations indistinguishable issues. There is no must unequivocally program an application to illuminate the issue. A show could be a depiction of this current reality battle. For depiction, a client buys can be utilized to set up an outline. Accordingly, guesses can be made around such buys a client may thusly make. This allows a relationship to modify notification and coupons for a client and possibly giving evacuated client experience. In Figure 1.3, arranging can be acted in one of the different explicit methods.

Supervised Learning: The model is set up with commented on, stepped, information displaying seeing right outcomes.

Unsupervised Learning: The information does not contain results; in any case, the model is required to discover the relationship in isolation.

Semi-Coordinated: An obliged measure of stepped information is gotten along with a more prominent extent of unlabeled information.

Reinforcement learning: This looks like managed learning; at any rate, a prize is obliged sufficient outcomes.

Читать дальше