THE BOTTOM LINE:

• If we one day succeed in building human-level AGI, this may trigger an intelligence explosion, leaving us far behind.

• If a group of humans manage to control an intelligence explosion, they may be able to take over the world in a matter of years.

• If humans fail to control an intelligence explosion, the AI itself may take over the world even faster.

• Whereas a rapid intelligence explosion is likely to lead to a single world power, a slow one dragging on for years or decades may be more likely to lead to a multipolar scenario with a balance of power between a large number of rather independent entities.

• The history of life shows it self-organizing into an ever more complex hierarchy shaped by collaboration, competition and control. Superintelligence is likely to enable coordination on ever-larger cosmic scales, but it’s unclear whether it will ultimately lead to more totalitarian top-down control or more individual empowerment.

• Cyborgs and uploads are plausible, but arguably not the fastest route to advanced machine intelligence.

• The climax of our current race toward AI may be either the best or the worst thing ever to happen to humanity, with a fascinating spectrum of possible outcomes that we’ll explore in the next chapter.

• We need to start thinking hard about which outcome we prefer and how to steer in that direction, because if we don’t know what we want, we’re unlikely to get it.

* As Bostrom has explained, the ability to simulate a leading human AI developer at a much lower cost than his/her hourly salary would enable an AI company to scale up their workforce dramatically, amassing great wealth and recursively accelerating their progress in building better computers and ultimately smarter minds.

Chapter 5 Aftermath: The Next 10,000 Years

It is easy to imagine human thought freed from bondage to a mortal body—belief in an afterlife is common. But it is not necessary to adopt a mystical or religious stance to accept this possibility. Computers provide a model for even the most ardent mechanist.

Hans Moravec, Mind Children

I, for one, welcome our new computer overlords.

Ken Jennings, upon his Jeopardy! loss to IBM’s Watson

Humans will become as irrelevant as cockroaches.

Marshall Brain

The race toward AGI is on, and we have no idea how it will unfold. But that shouldn’t stop us from thinking about what we want the aftermath to be like, because what we want will affect the outcome. What do you personally prefer, and why?

1. Do you want there to be superintelligence?

2. Do you want humans to still exist, be replaced, cyborgized and/or uploaded/simulated?

3. Do you want humans or machines in control?

4. Do you want AIs to be conscious or not?

5. Do you want to maximize positive experiences, minimize suffering or leave this to sort itself out?

6. Do you want life spreading into the cosmos?

7. Do you want a civilization striving toward a greater purpose that you sympathize with, or are you OK with future life forms that appear content even if you view their goals as pointlessly banal?

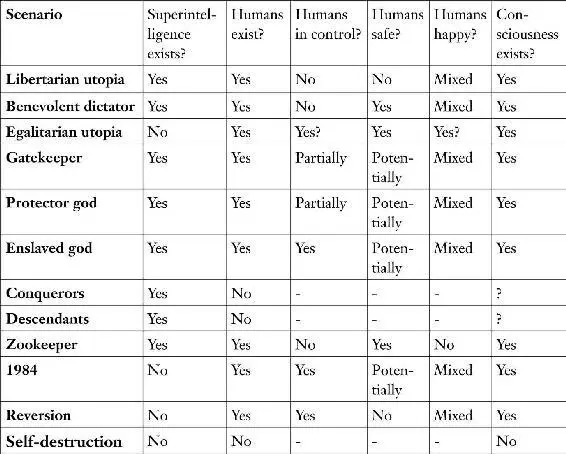

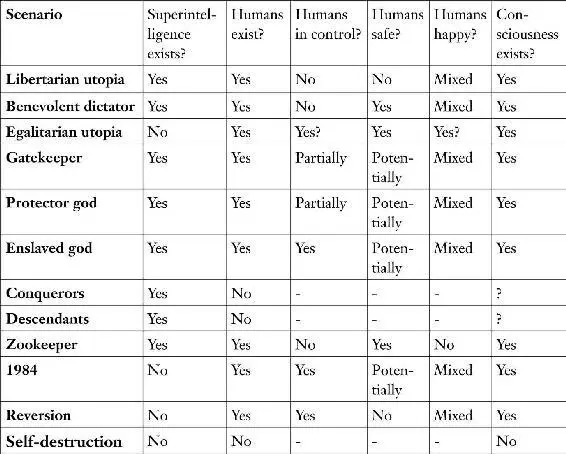

To help fuel such contemplation and conversation, let’s explore the broad range of scenarios summarized in table 5.1. This obviously isn’t an exhaustive list, but I’ve chosen it to span the spectrum of possibilities. We clearly don’t want to end up in the wrong endgame because of poor planning. I recommend jotting down your tentative answers to questions 1–7 and then revisiting them after reading this chapter to see if you’ve changed your mind! You can do this at http://AgeOfAi.org, where you can also compare notes and discuss with other readers. AI Aftermath Scenarios Libertarian utopiaHumans, cyborgs, uploads and superintelligences coexist peacefully thanks to property rights. Benevolent dictatorEverybody knows that the AI runs society and enforces strict rules, but most people view this as a good thing. Egalitarian utopiaHumans, cyborgs and uploads coexist peacefully thanks to property abolition and guaranteed income. GatekeeperA superintelligent AI is created with the goal of interfering as little as necessary to prevent the creation of another superintelligence. As a result, helper robots with slightly subhuman intelligence abound, and human-machine cyborgs exist, but technological progress is forever stymied. Protector godEssentially omniscient and omnipotent AI maximizes human happiness by intervening only in ways that preserve our feeling of control of our own destiny and hides well enough that many humans even doubt the AI’s existence. Enslaved godA superintelligent AI is confined by humans, who use it to produce unimaginable technology and wealth that can be used for good or bad depending on the human controllers. ConquerorsAI takes control, decides that humans are a threat/nuisance/waste of resources, and gets rid of us by a method that we don’t even understand. DescendantsAIs replace humans, but give us a graceful exit, making us view them as our worthy descendants, much as parents feel happy and proud to have a child who’s smarter than them, who learns from them and then accomplishes what they could only dream of—even if they can’t live to see it all. ZookeeperAn omnipotent AI keeps some humans around, who feel treated like zoo animals and lament their fate. 1984Technological progress toward superintelligence is permanently curtailed not by an AI but by a human-led Orwellian surveillance state where certain kinds of AI research are banned. ReversionTechnological progress toward superintelligence is prevented by reverting to a pre-technological society in the style of the Amish. Self-destructionSuperintelligence is never created because humanity drives itself extinct by other means (say nuclear and/or biotech mayhem fueled by climate crisis).

Table 5.1: Summary of AI Aftermath Scenarios

Table 5.2: Properties of AI Aftermath Scenarios

Libertarian Utopia

Let’s begin with a scenario where humans peacefully coexist with technology and in some cases merge with it, as imagined by many futurists and science fiction writers alike:

Life on Earth (and beyond—more on that in the next chapter) is more diverse than ever before. If you looked at satellite footage of Earth, you’d easily be able to tell apart the machine zones, mixed zones and human-only zones. The machine zones are enormous robot-controlled factories and computing facilities devoid of biological life, aiming to put every atom to its most efficient use. Although the machine zones look monotonous and drab from the outside, they’re spectacularly alive on the inside, with amazing experiences occurring in virtual worlds while colossal computations unlock secrets of our Universe and develop transformative technologies. Earth hosts many superintelligent minds that compete and collaborate, and they all inhabit the machine zones.

The denizens of the mixed zones are a wild and idiosyncratic mix of computers, robots, humans and hybrids of all three. As envisioned by futurists such as Hans Moravec and Ray Kurzweil, many of the humans have technologically upgraded their bodies to cyborgs in various degrees, and some have uploaded their minds into new hardware, blurring the distinction between man and machine. Most intelligent beings lack a permanent physical form. Instead, they exist as software capable of instantly moving between computers and manifesting themselves in the physical world through robotic bodies. Because these minds can readily duplicate themselves or merge, the “population size” keeps changing. Being unfettered from their physical substrate gives such beings a rather different outlook on life: they feel less individualistic because they can trivially share knowledge and experience modules with others, and they feel subjectively immortal because they can readily make backup copies of themselves. In a sense, the central entities of life aren’t minds, but experiences: exceptionally amazing experiences live on because they get continually copied and re-enjoyed by other minds, while uninteresting experiences get deleted by their owners to free up storage space for better ones.

Читать дальше