Table 1.1: Many misunderstandings about AI are caused by people using the words above to mean different things. Here’s what I take them to mean in this book. (Some of these definitions will only be properly introduced and explained in later chapters.)

In addition to confusion over terminology, I’ve also seen many AI conversations get derailed by simple misconceptions. Let’s clear up the most common ones.

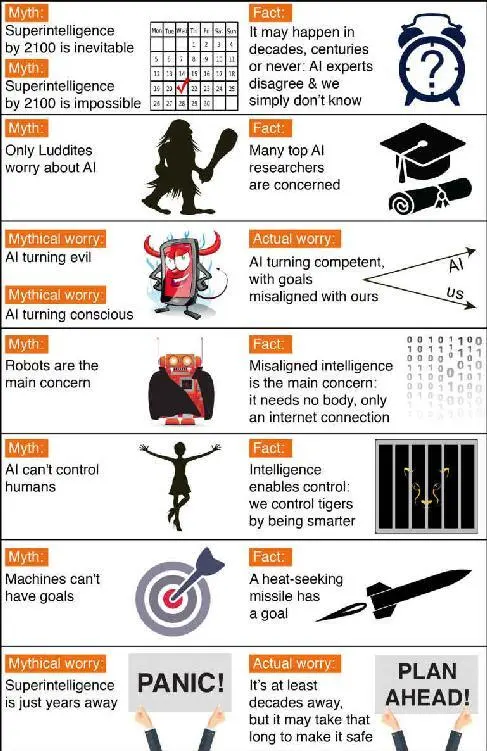

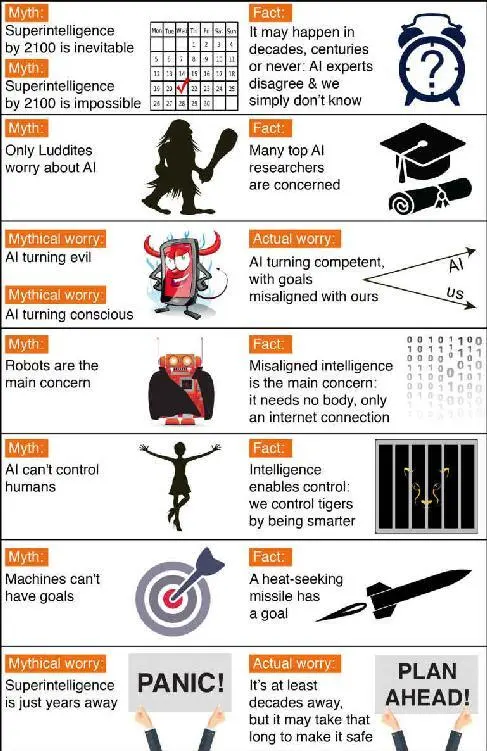

Timeline Myths

The first one regards the timeline from figure 1.2: how long will it take until machines greatly supersede human-level AGI? Here, a common misconception is that we know the answer with great certainty.

One popular myth is that we know we’ll get superhuman AGI this century. In fact, history is full of technological over-hyping. Where are those fusion power plants and flying cars we were promised we’d have by now? AI too has been repeatedly over-hyped in the past, even by some of the founders of the field: for example, John McCarthy (who coined the term “artificial intelligence”), Marvin Minsky, Nathaniel Rochester and Claude Shannon wrote this overly optimistic forecast about what could be accomplished during two months with stone-age computers: “We propose that a 2 month, 10 man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College…An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.”

On the other hand, a popular counter-myth is that we know we won’t get superhuman AGI this century. Researchers have made a wide range of estimates for how far we are from superhuman AGI, but we certainly can’t say with great confidence that the probability is zero this century, given the dismal track record of such techno-skeptic predictions. For example, Ernest Rutherford, arguably the greatest nuclear physicist of his time, said in 1933—less than twenty-four hours before Leo Szilard’s invention of the nuclear chain reaction—that nuclear energy was “moonshine,” and in 1956 Astronomer Royal Richard Woolley called talk about space travel “utter bilge.” The most extreme form of this myth is that superhuman AGI will never arrive because it’s physically impossible. However, physicists know that a brain consists of quarks and electrons arranged to act as a powerful computer, and that there’s no law of physics preventing us from building even more intelligent quark blobs.

Figure 1.5: Common myths about superintelligent AI.

There have been a number of surveys asking AI researchers how many years from now they think we’ll have human-level AGI with at least 50% probability, and all these surveys have the same conclusion: the world’s leading experts disagree, so we simply don’t know. For example, in such a poll of the AI researchers at the Puerto Rico AI conference, the average (median) answer was by the year 2055, but some researchers guessed hundreds of years or more.

There’s also a related myth that people who worry about AI think it’s only a few years away. In fact, most people on record worrying about superhuman AGI guess it’s still at least decades away. But they argue that as long as we’re not 100% sure that it won’t happen this century, it’s smart to start safety research now to prepare for the eventuality. As we’ll see in this book, many of the safety problems are so hard that they may take decades to solve, so it’s prudent to start researching them now rather than the night before some programmers drinking Red Bull decide to switch on human-level AGI.

Controversy Myths

Another common misconception is that the only people harboring concerns about AI and advocating AI-safety research are Luddites who don’t know much about AI. When Stuart Russell mentioned this during his Puerto Rico talk, the audience laughed loudly. A related misconception is that supporting AI-safety research is hugely controversial. In fact, to support a modest investment in AI-safety research, people don’t need to be convinced that risks are high, merely non-negligible, just as a modest investment in home insurance is justified by a non-negligible probability of the home burning down.

My personal analysis is that the media have made the AI-safety debate seem more controversial than it really is. After all, fear sells, and articles using out-of-context quotes to proclaim imminent doom can generate more clicks than nuanced and balanced ones. As a result, two people who only know about each other’s positions from media quotes are likely to think they disagree more than they really do. For example, a techno-skeptic whose only knowledge about Bill Gates’ position comes from a British tabloid may mistakenly think he believes superintelligence to be imminent. Similarly, someone in the beneficial-AI movement who knows nothing about Andrew Ng’s position except his above-mentioned quote about overpopulation on Mars may mistakenly think he doesn’t care about AI safety. In fact, I personally know that he does—the crux is simply that because his timeline estimates are longer, he naturally tends to prioritize short-term AI challenges over long-term ones.

Myths About What the Risks Are

I rolled my eyes when seeing this headline in the Daily Mail: 3 “Stephen Hawking Warns That Rise of Robots May Be Disastrous for Mankind.” I’ve lost count of how many similar articles I’ve seen. Typically, they’re accompanied by an evil-looking robot carrying a weapon, and suggest that we should worry about robots rising up and killing us because they’ve become conscious and/or evil. On a lighter note, such articles are actually rather impressive, because they succinctly summarize the scenario that my AI colleagues don’t worry about. That scenario combines as many as three separate misconceptions: concern about consciousness, evil and robots, respectively.

If you drive down the road, you have a subjective experience of colors, sounds, etc. But does a self-driving car have a subjective experience? Does it feel like anything at all to be a self-driving car, or is it like an unconscious zombie without any subjective experience? Although this mystery of consciousness is interesting in its own right, and we’ll devote chapter 8 to it, it’s irrelevant to AI risk. If you get struck by a driverless car, it makes no difference to you whether it subjectively feels conscious. In the same way, what will affect us humans is what superintelligent AI does, not how it subjectively feels.

The fear of machines turning evil is another red herring. The real worry isn’t malevolence, but competence. A superintelligent AI is by definition very good at attaining its goals, whatever they may be, so we need to ensure that its goals are aligned with ours. You’re probably not an ant hater who steps on ants out of malice, but if you’re in charge of a hydroelectric green energy project and there’s an anthill in the region to be flooded, too bad for the ants. The beneficial-AI movement wants to avoid placing humanity in the position of those ants.

The consciousness misconception is related to the myth that machines can’t have goals. Machines can obviously have goals in the narrow sense of exhibiting goal-oriented behavior: the behavior of a heat-seeking missile is most economically explained as a goal to hit a target. If you feel threatened by a machine whose goals are misaligned with yours, then it’s precisely its goals in this narrow sense that trouble you, not whether the machine is conscious and experiences a sense of purpose. If that heat-seeking missile were chasing you, you probably wouldn’t exclaim “I’m not worried, because machines can’t have goals!”

Читать дальше