This graph was used to show customers that the team was becoming consistent with their testing and their releases. Once the team and customers had faith the numbers were not going up, the metrics were no longer needed and were dropped.

—Janet

Don’t be afraid to stop using metrics when they are no longer useful. If the problem they were initially gathered for no longer exists, there is no reason to keep gathering them.

Your team may have to provide metrics to upper managers or a Project Management Office (PMO), especially if you work for a large organization. Patrick Fleisch, an Accenture Consultant who was working as a functional analyst at a software company during the time we wrote this book, gave us the following examples of metrics his team provides to their PMO.

Useful Iteration Metrics

Coni Tartaglia, a software test manager at Primavera Systems, Inc., explains some ways she has found to achieve useful iteration metrics.

Collecting metrics at the end of the iteration is particularly useful when many different teams are working on the same product releases. This helps ensure all teams end the iteration with the same standard for “done.” The teams should agree on what should be measured. What follows are some standards for potentially shippable software [Schwaber 2004], and different ways of judging the state of each one.

• Sprint deliverables are refactored and coded to standards.

Use a static analysis tool. Focus on data that is useful and actionable. Decide each sprint if corrective action is needed. For example, use an open source tool like FindBugs, and look for an increase each sprint in the number of priority one issues. Correct these accordingly.

• Sprint deliverables are unit tested.

For example, look at the code coverage results each sprint. Count the number of packages with unit test coverage falling into ranges of 0%–30% (low coverage), 31%–55% (average coverage), and 56%–100% (high) coverage. Legacy packages may fall into the low coverage range, while coverage for new packages should fall into the 56%–100% range, if you are practicing test driven development. An increase in the high coverage range is desirable.

• Sprint deliverables have passing, automated acceptance tests.

Map automated acceptance tests to requirements in a quality management system. At the end of the iteration, generate a coverage report showing that all requirements selected as goals for the iteration have passing tests. Requirements that do not show passing test coverage are not complete. The same approach is easily executed using story cards on a bulletin board. The intent is simply to show that the agreed-upon tests for each requirement or story are passing at the end of the sprint.

• Sprint deliverables are successfully integrated.

Check the continuous integration build test results to ensure they are passing. Run other integration tests during the sprint. Make corrections prior to the beginning of the next iteration. Hesitate to start a new iteration if integration tests are failing.

• Sprint deliverables are free of defects.

Requirements completed during the iteration should be free of defects.

• Can the product ship in [30] days?

Simply ask yourself this question at the end of each iteration, and proceed into the next iteration according to the answer.

Metrics like this are easy to collect and easy to analyze, and can provide valuable opportunities to help teams correct their course. They can also confirm the engineering standards the teams have put in place to create potentially shippable software in each iteration.

Test execution numbers by story and functional area

Test execution numbers by story and functional area

Test automation status (number of tests automated vs. manual)

Test automation status (number of tests automated vs. manual)

Line graph of the number of tests passing/failing over time

Line graph of the number of tests passing/failing over time

Summary and status of each story

Summary and status of each story

Defect metrics

Defect metrics

Gathering and reporting metrics such as these may result in significant overhead. Look for the simplest ways to satisfy the needs of your organization.

Summary

At this point in our example iteration, our agile tester works closely with programmers, customers, and other team members to produce stories in small testing-coding-reviewing-testing increments. Some points to keep in mind are:

Coding and testing are part of one process during the iteration.

Coding and testing are part of one process during the iteration.

Write detailed tests for a story as soon as coding begins.

Write detailed tests for a story as soon as coding begins.

Drive development by starting with a simple test; when the simple tests pass, write more complex test cases to further guide coding.

Drive development by starting with a simple test; when the simple tests pass, write more complex test cases to further guide coding.

Use simple risk assessment techniques to help focus testing efforts.

Use simple risk assessment techniques to help focus testing efforts.

Use the “Power of Three” when requirements aren’t clear or opinions vary.

Use the “Power of Three” when requirements aren’t clear or opinions vary.

Focus on completing one story at a time.

Focus on completing one story at a time.

Collaborate closely with programmers so that testing and coding are integrated.

Collaborate closely with programmers so that testing and coding are integrated.

Tests that critique the product are part of development.

Tests that critique the product are part of development.

Keep customers in the loop throughout the iteration; let them review early and often.

Keep customers in the loop throughout the iteration; let them review early and often.

Everyone on the team can work on testing tasks.

Everyone on the team can work on testing tasks.

Testers can facilitate communication between the customer team and development team.

Testers can facilitate communication between the customer team and development team.

Determine what the best “bug fixing” choice for your team is, but a good goal is to aim to have no bugs by release time.

Determine what the best “bug fixing” choice for your team is, but a good goal is to aim to have no bugs by release time.

Add new automated tests to the regression suite and schedule it to run often enough to provide adequate feedback.

Add new automated tests to the regression suite and schedule it to run often enough to provide adequate feedback.

Manual exploratory testing helps find missing requirements after all the application has been coded.

Manual exploratory testing helps find missing requirements after all the application has been coded.

Collaborate with other experts to get the resources and infrastructure needed to complete testing.

Collaborate with other experts to get the resources and infrastructure needed to complete testing.

Consider what metrics you need during the iteration; progress and defect metrics are two examples.

Consider what metrics you need during the iteration; progress and defect metrics are two examples.

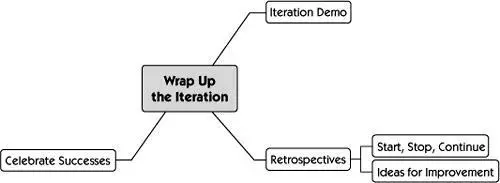

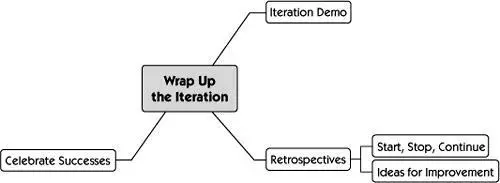

Chapter 19 Wrap Up the Iteration

We’ve completed an iteration. What do testers do as the team wraps up this iteration and prepares for the next? We like to focus on how we and the rest of our team can improve and deliver a better product next time.

Читать дальше

Test execution numbers by story and functional area

Test execution numbers by story and functional area