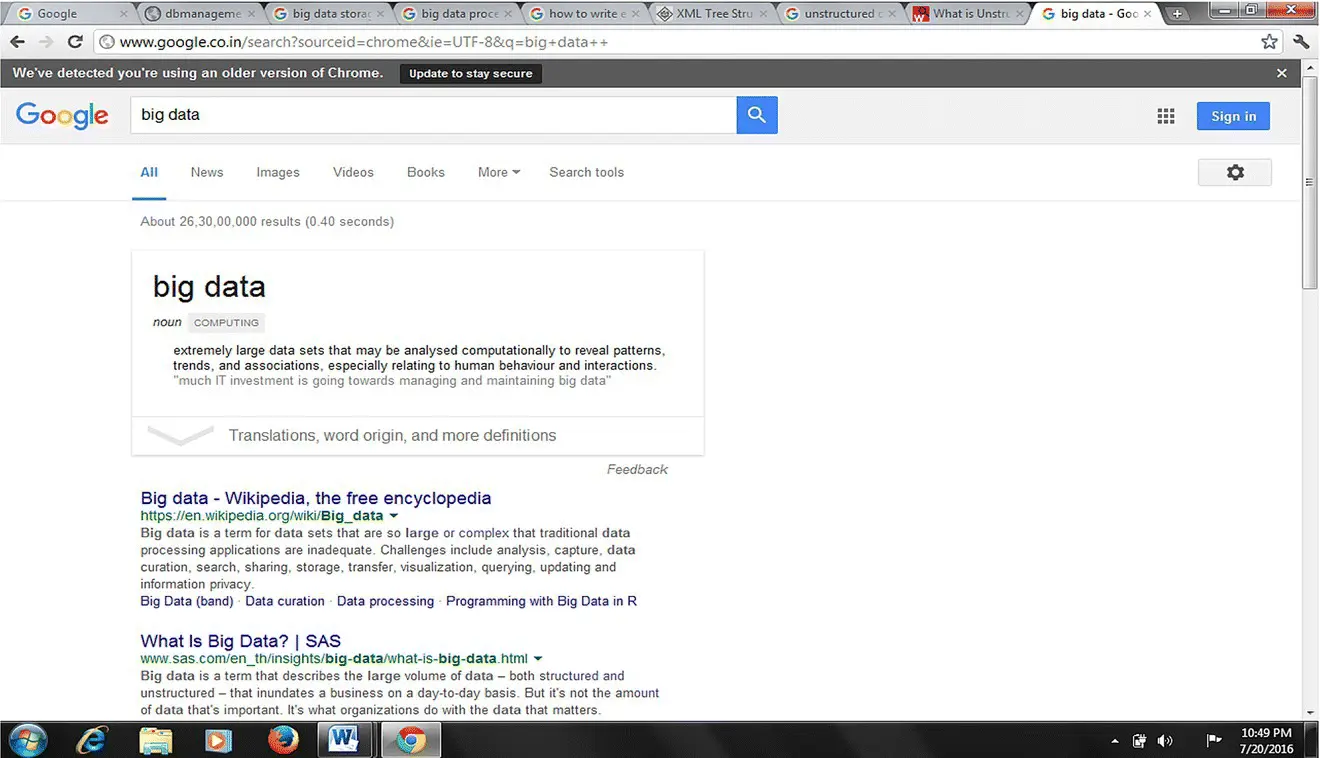

Data that are raw, unorganized, and do not fit into the relational database systems are called unstructured data. Nearly 80% of the data generated are unstructured. Examples of unstructured data include video, audio, images, e‐mails, text files, and social media posts. Unstructured data usually reside on either text files or binary files. Data that reside in binary files do not have any identifiable internal structure, for example, audio, video, and images. Data that reside in text files are e‐mails, social media posts, pdf files, and word processing documents. Figure 1.8shows unstructured data, the result of a Google search.

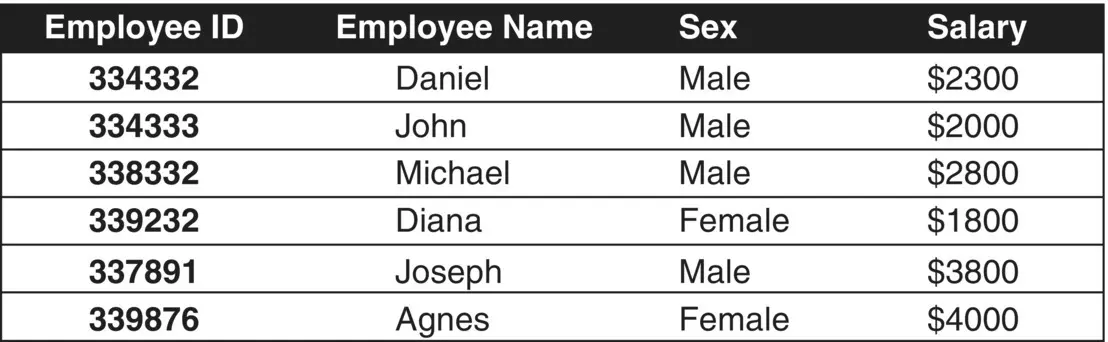

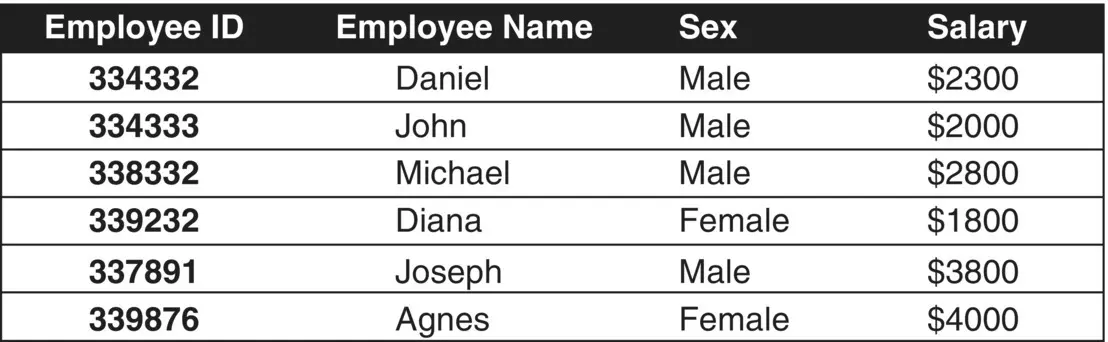

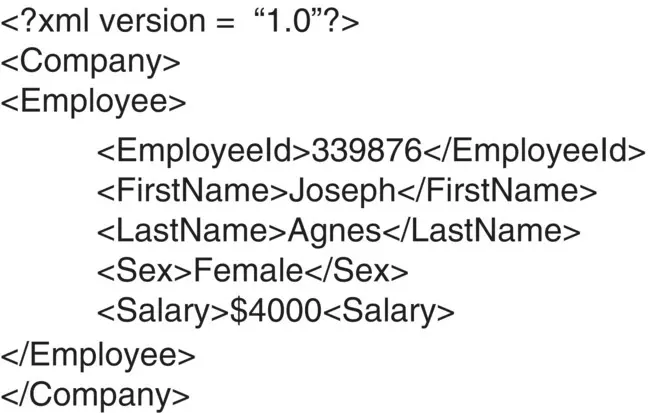

Figure 1.7 Structured data—employee details of an organization.

Figure 1.8 Unstructured data—the result of a Google search.

1.6.3 Semi‐Structured Data

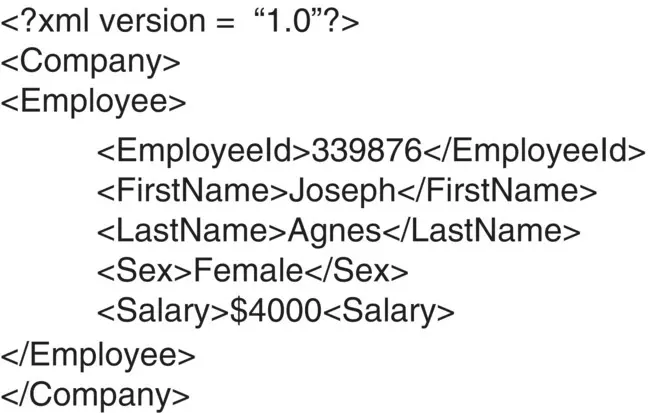

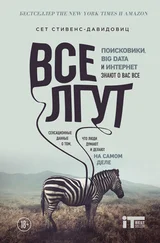

Semi‐structured data are those that have a structure but do not fit into the relational database. Semi‐structured data are organized, which makes it easier to analyze when compared to unstructured data. JSON and XML are examples of semi‐structured data. Figure 1.9is an XML file that represents the details of an employee in an organization.

Figure 1.9 XML file with employee details.

1.7 Big Data Infrastructure

The core components of big data technologies are the tools and technologies that provide the capacity to store, process, and analyze the data. The method of storing the data in tables was no longer supportive with the evolution of data with 3 Vs, namely volume, velocity, and variety. The robust RBDMS was no longer cost effective. The scaling of RDBMS to store and process huge amount of data became expensive. This led to the emergence of new technology, which was highly scalable at very low cost.

The key technologies include

Hadoop

HDFS

MapReduce

Hadoop –Apache Hadoop, written in Java, is open‐source framework that supports processing of large data sets. It can store a large volume of structured, semi‐structured, and unstructured data in a distributed file system and process them in parallel. It is a highly scalable and cost‐effective storage platform. Scalability of Hadoop refers to its capability to sustain its performance even under highly increasing loads by adding more nodes. Hadoop files are written once and read many times. The contents of the files cannot be changed. A large number of computers interconnected working together as a single system is called a cluster. Hadoop clusters are designed to store and analyze the massive amount of disparate data in distributed computing environments in a cost effective manner.

Hadoop Distributed File system –HDFS is designed to store large data sets with streaming access pattern running on low‐cost commodity hardware. It does not require highly reliable, expensive hardware. The data set is generated from multiple sources, stored in an HDFS file system in a write‐once, read‐many‐times pattern, and analyses are performed on the data set to extract knowledge from it.

MapReduce –MapReduce is the batch‐processing programming model for the Hadoop framework, which adopts a divide‐and‐conquer principle. It is highly scalable, reliable, and fault tolerant, capable of processing input data with any format in parallel and distributed computing environments supporting only batch workloads. Its performance reduces the processing time significantly compared to the traditional batch‐processing paradigm, as the traditional approach was to move the data from the storage platform to the processing platform, whereas the MapReduce processing paradigm resides in the framework where the data actually resides.

Big data yields big benefits, starting from innovative business ideas to unconventional ways to treat diseases, overcoming the challenges. The challenges arise because so much of the data is collected by the technology today. Big data technologies are capable of capturing and analyzing them effectively. Big data infrastructure involves new computing models with the capability to process both distributed and parallel computations with highly scalable storage and performance. Some of the big data components include Hadoop (framework), HDFS (storage), and MapReduce (processing).

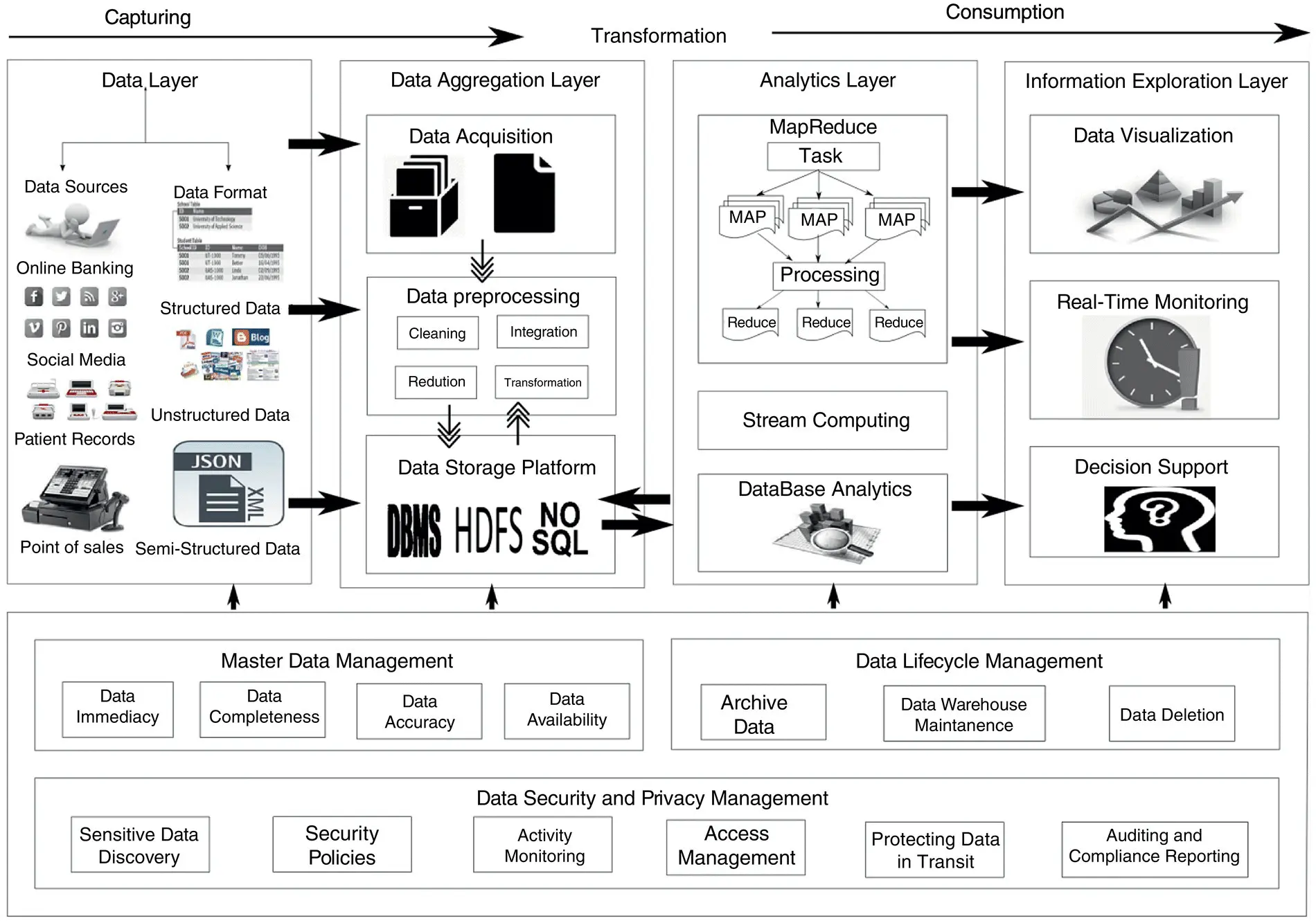

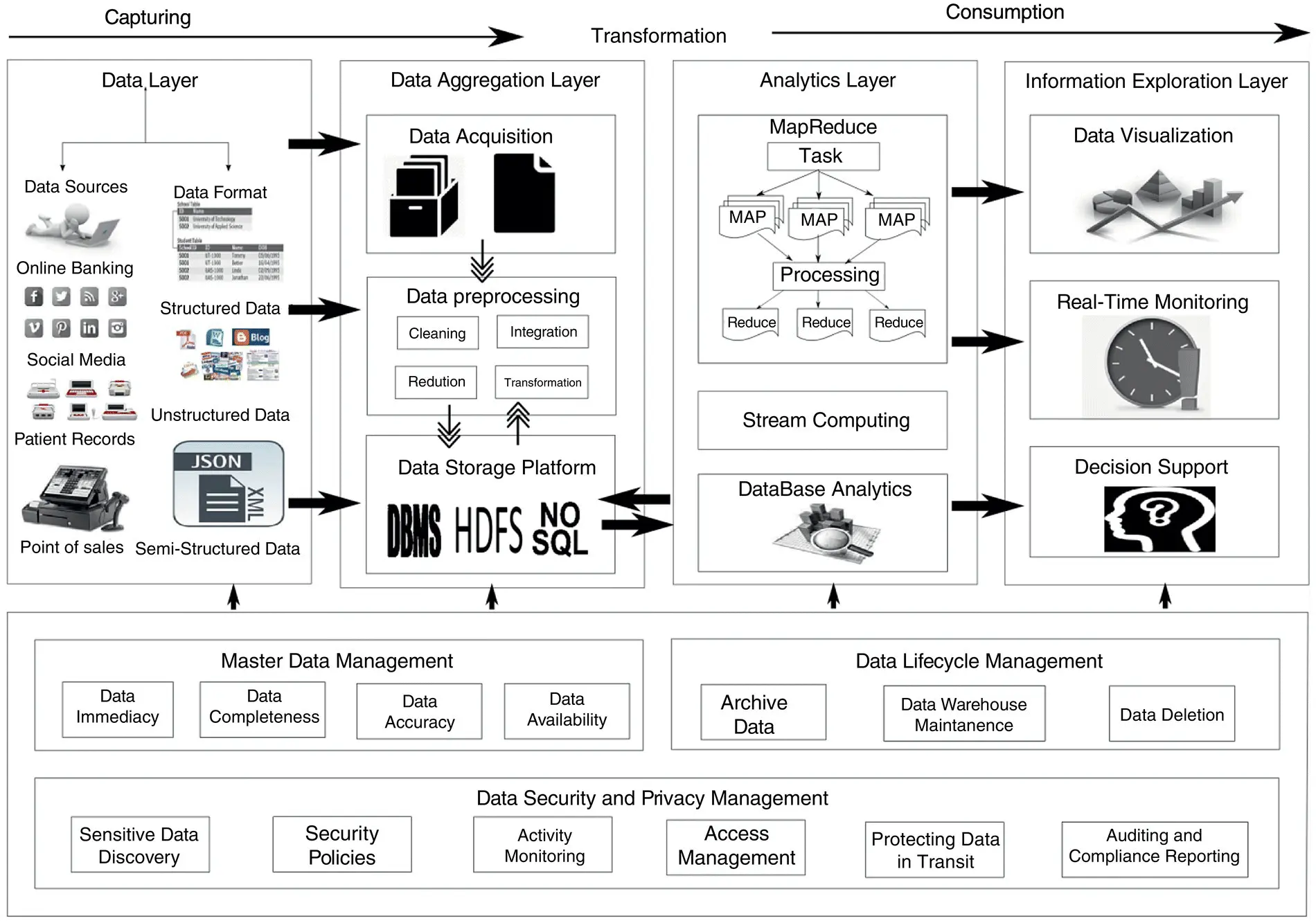

Figure 1.10illustrates the big data life cycle. Data arriving at high velocity from multiple sources with different data formats are captured. The captured data is stored in a storage platform such as HDFS and NoSQL and then preprocessed to make the data suitable for analysis. The preprocessed data stored in the storage platform is then passed to the analytics layer, where the data is processed using big data tools such as MapReduce and YARN and analysis is performed on the processed data to uncover hidden knowledge from it. Analytics and machine learning are important concepts in the life cycle of big data. Text analytics is a type of analysis performed on unstructured textual data. With the growth of social media and e‐mail transactions, the importance of text analytics has surged up. Predictive analysis on consumer behavior and consumer interest analysis are all performed on the text data extracted from various online sources such as social media, online retailing websites, and much more. Machine learning has made text analytics possible. The analyzed data is visually represented by visualization tools such as Tableau to make it easily understandable by the end user to make decisions.

1.8.1 Big Data Generation

The first phase of the life cycle of big data is the data generation. The scale of data generated from diversified sources is gradually expanding. Sources of this large volume of data were discussed under the Section 1.5, “Sources of Big Data.”

Figure 1.10 Big data life cycle.

The data aggregation phase of the big data life cycle involves collecting the raw data, transmitting the data to the storage platform, and preprocessing them. Data acquisition in the big data world means acquiring the high‐volume data arriving at an ever‐increasing pace. The raw data thus collected is transmitted to a proper storage infrastructure to support processing and various analytical applications. Preprocessing involves data cleansing, data integration, data transformation, and data reduction to make the data reliable, error free, consistent, and accurate. The data gathered may have redundancies, which occupy the storage space and increase the storage cost and can be handled by data preprocessing. Also, much of the data gathered may not be related to the analysis objective, and hence it needs to be compressed while being preprocessed. Hence, efficient data preprocessing is indispensable for cost‐effective and efficient data storage. The preprocessed data are then transmitted for various purposes such as data modeling and data analytics.

Читать дальше

![Алексей Благирев - Big data простым языком [litres]](/books/416853/aleksej-blagirev-big-data-prostym-yazykom-litres-thumb.webp)