I now have a public track record of more than a quarter of a century of predictions based on the law of accelerating returns, starting with those presented in The Age of Intelligent Machines , which I wrote in the mid-1980s. Examples of accurate predictions from that book include: the emergence in the mid- to late 1990s of a vast worldwide web of communications tying together people around the world to one another and to all human knowledge; a great wave of democratization emerging from this decentralized communication network, sweeping away the Soviet Union; the defeat of the world chess champion by 1998; and many others.

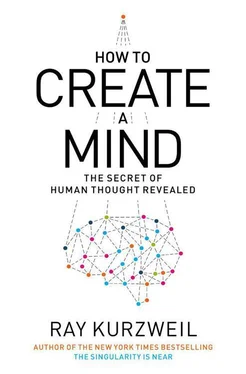

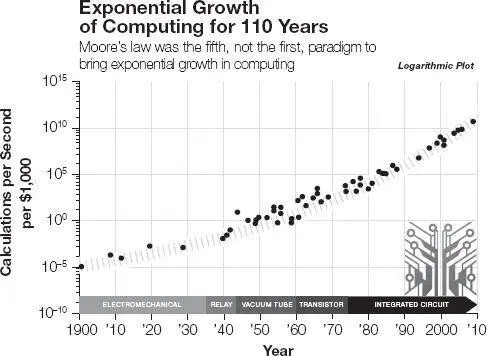

I described the law of accelerating returns, as it is applied to computation, extensively in The Age of Spiritual Machines , where I provided a century of data showing the doubly exponential progression of the price/performance of computation through 1998. It is updated through 2009 below.

I recently wrote a 146-page review of the predictions I made in The Age of Intelligent Machines, The Age of Spiritual Machines , and The Singularity Is Near . (You can read the essay here by going to the link in this endnote.) 9 The Age of Spiritual Machines included hundreds of predictions for specific decades (2009, 2019, 2029, and 2099). For example, I made 147 predictions for 2009 in The Age of Spiritual Machines , which I wrote in the 1990s. Of these, 115 (78 percent) are entirely correct as of the end of 2009; the predictions that were concerned with basic measurements of the capacity and price/performance of information technologies were particularly accurate. Another 12 (8 percent) are “essentially correct.” A total of 127 predictions (86 percent) are correct or essentially correct. (Since the predictions were made specific to a given decade, a prediction for 2009 was considered “essentially correct” if it came true in 2010 or 2011.) Another 17 (12 percent) are partially correct, and 3 (2 percent) are wrong.

Calculations per second per (constant) thousand dollars of different computing devices. 10

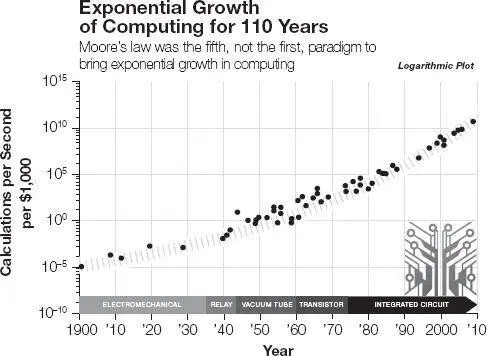

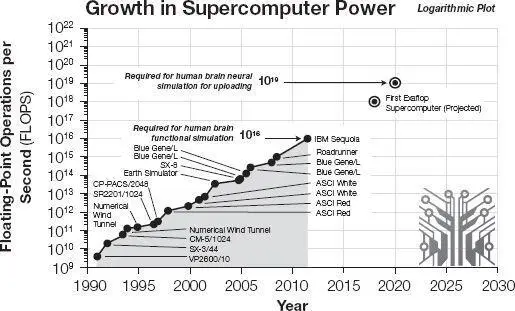

Floating-point operations per second of different supercomputers. 11

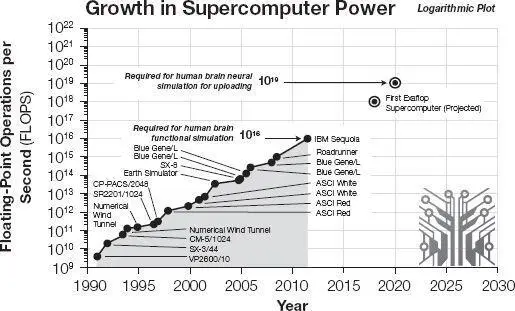

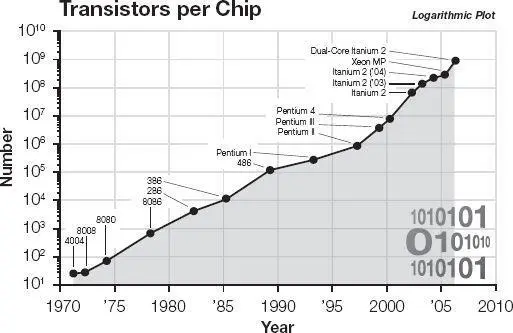

Transistors per chip for different Intel processors. 12

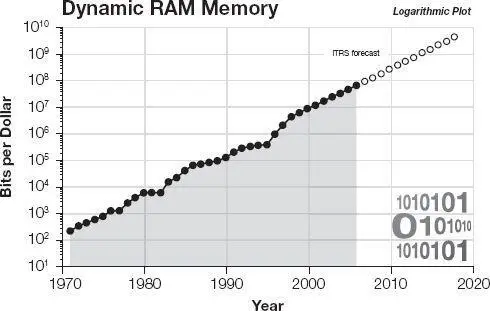

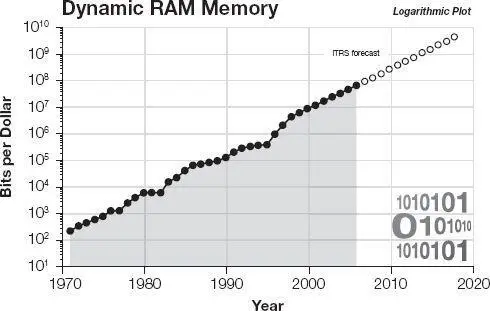

Bits per dollar for dynamic random access memory chips. 13

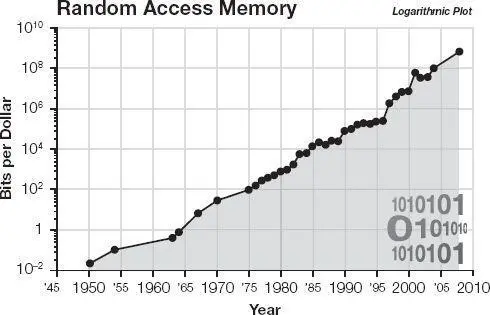

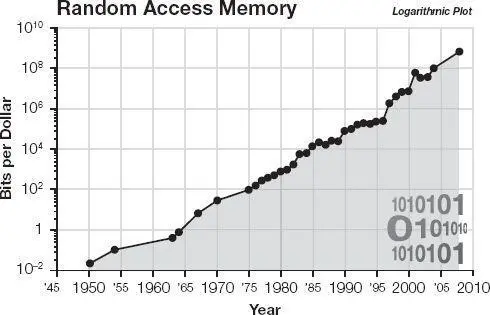

Bits per dollar for random access memory chips. 14

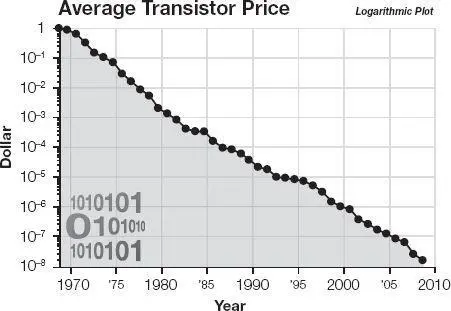

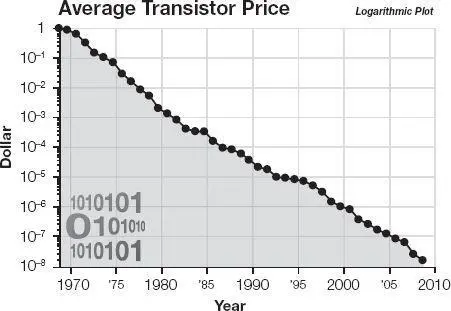

The average price per transistor in dollars. 15

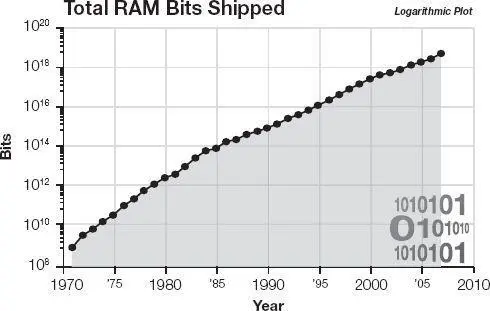

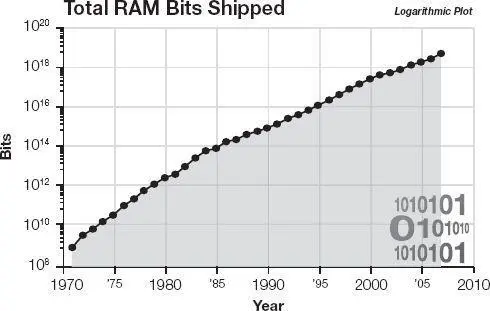

The total number of bits of random access memory shipped each year. 16

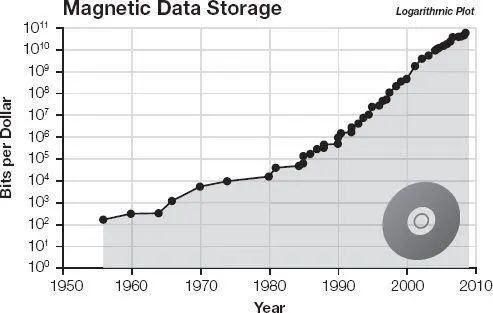

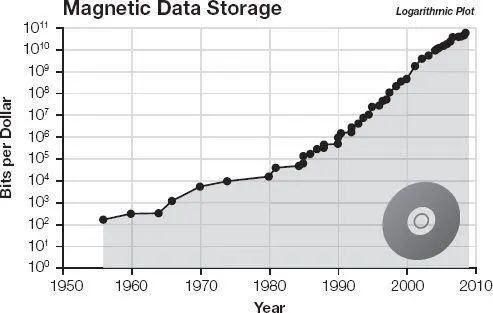

Bits per dollar (in constant 2000 dollars) for magnetic data storage. 17

Even the predictions that were “wrong” were not all wrong. For example, I judged my prediction that we would have self-driving cars to be wrong, even though Google has demonstrated self-driving cars, and even though in October 2010 four driverless electric vans successfully concluded a 13,000-kilometer test drive from Italy to China. 18 Experts in the field currently predict that these technologies will be routinely available to consumers by the end of this decade.

Exponentially expanding computational and communication technologies all contribute to the project to understand and re-create the methods of the human brain. This effort is not a single organized project but rather the result of a great many diverse projects, including detailed modeling of constituents of the brain ranging from individual neurons to the entire neocortex, the mapping of the “connectome” (the neural connections in the brain), simulations of brain regions, and many others. All of these have been scaling up exponentially. Much of the evidence presented in this book has only become available recently—for example, the 2012 Wedeen study discussed in chapter 4 that showed the very orderly and “simple” (to quote the researchers) gridlike pattern of the connections in the neocortex. The researchers in that study acknowledge that their insight (and images) only became feasible as the result of new high-resolution imaging technology.

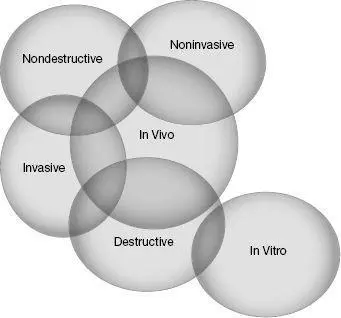

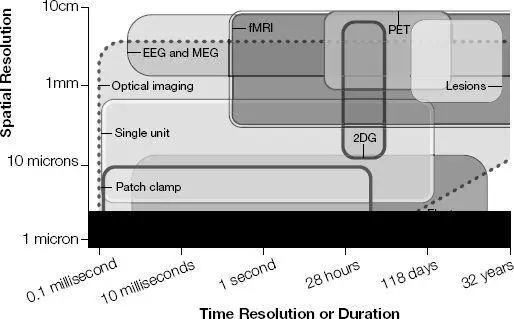

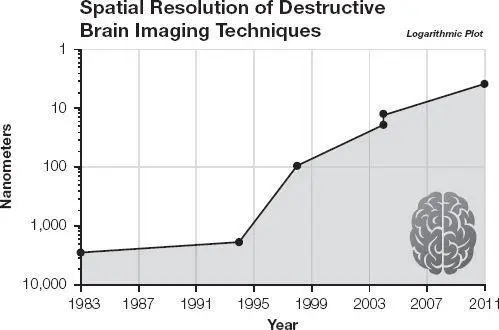

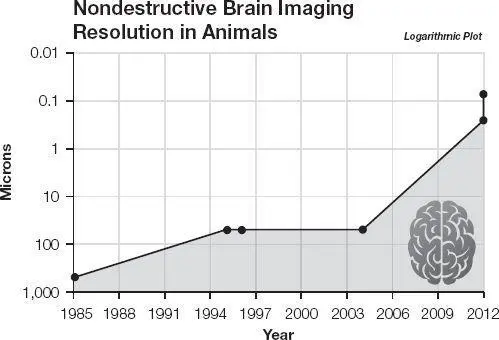

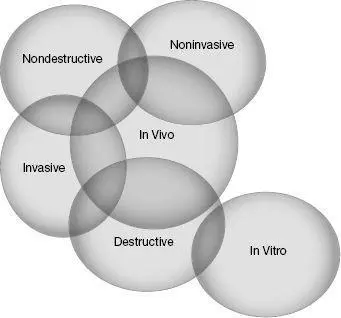

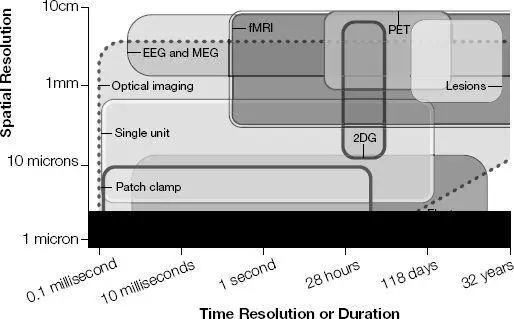

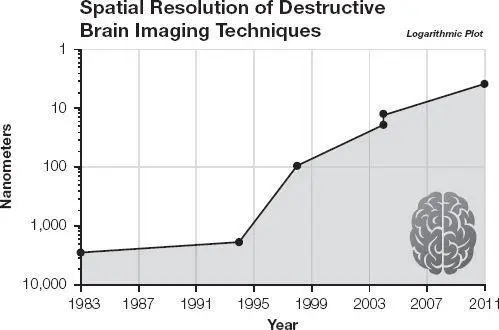

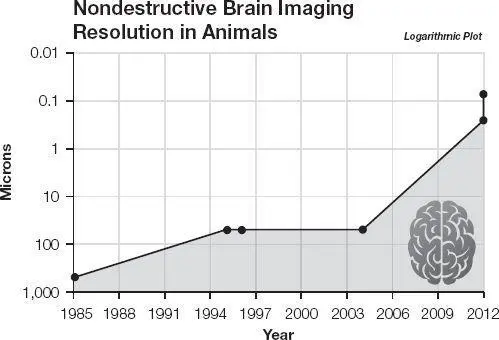

Brain scanning technologies are improving in resolution, spatial and temporal, at an exponential rate. Different types of brain scanning methods being pursued range from completely noninvasive methods that can be used with humans to more invasive or destructive methods on animals.

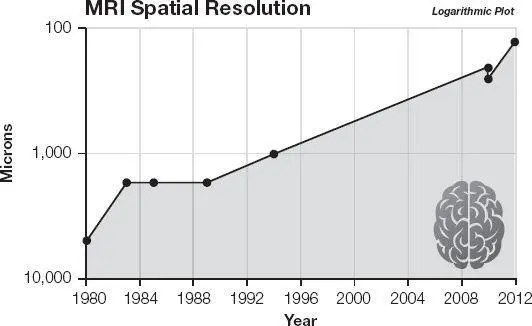

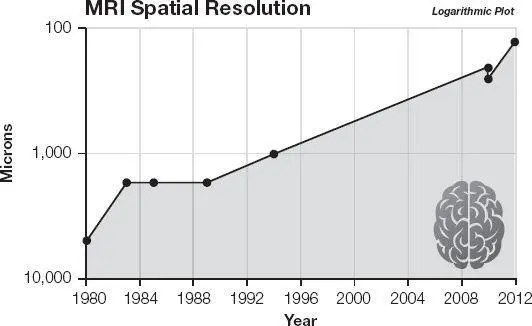

MRI (magnetic resonance imaging), a noninvasive imaging technique with relatively high temporal resolution, has steadily improved at an exponential pace, to the point that spatial resolutions are now close to 100 microns (millionths of a meter).

A Venn diagram of brain imaging methods. 19

Tools for imaging the brain. 20

MRI spatial resolution in microns. 21

Spatial resolution of destructive imaging techniques. 22

Spatial resolution of nondestructive imaging techniques in animals. 23

Destructive imaging, which is performed to collect the connectome (map of all interneuronal connections) in animal brains, has also improved at an exponential pace. Current maximum resolution is around four nanometers, which is sufficient to see individual connections.

Artificial intelligence technologies such as natural-language-understanding systems are not necessarily designed to emulate theorized principles of brain function, but rather for maximum effectiveness. Given this, it is notable that the techniques that have won out are consistent with the principles I have outlined in this book: self-organizing, hierarchical recognizers of invariant self-associative patterns with redundancy and up-and-down predictions. These systems are also scaling up exponentially, as Watson has demonstrated.

A primary purpose of understanding the brain is to expand our toolkit of techniques to create intelligent systems. Although many AI researchers may not fully appreciate this, they have already been deeply influenced by our knowledge of the principles of the operation of the brain. Understanding the brain also helps us to reverse brain dysfunctions of various kinds. There is, of course, another key goal of the project to reverse-engineer the brain: understanding who we are.

Читать дальше