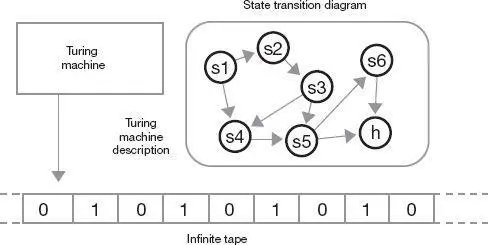

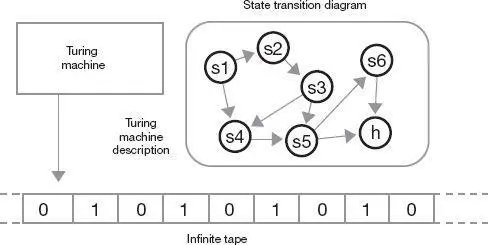

The input to the Turing machine is presented on the tape. The program runs, and when the machine halts, it has completed its algorithm, and the output of the process is left on the tape. Note that even though the tape is theoretically infinite in length, any actual program that does not get into an infinite loop will use only a finite portion of the tape, so if we limit ourselves to a finite tape, the machine will still solve a useful set of problems.

If the Turing machine sounds simple, it is because that was its inventor’s objective. Turing wanted his machine to be as simple as possible (but no simpler, to paraphrase Einstein). Turing and Alonzo Church (1903–1995), his former professor, went on to develop the Church-Turing thesis, which states that if a problem that can be presented to a Turing machine is not solvable by it, it is also not solvable by any machine, following natural law. Even though the Turing machine has only a handful of commands and processes only one bit at a time, it can compute anything that any computer can compute. Another way to say this is that any machine that is “Turing complete” (that is, that has equivalent capabilities to a Turing machine) can compute any algorithm (any procedure that we can define).

A block diagram of a Turing machine with a head that reads and writes the tape and an internal program consisting of state transitions.

“Strong” interpretations of the Church-Turing thesis propose an essential equivalence between what a human can think or know and what is computable by a machine. The basic idea is that the human brain is likewise subject to natural law, and thus its information-processing ability cannot exceed that of a machine (and therefore of a Turing machine).

We can properly credit Turing with establishing the theoretical foundation of computation with his 1936 paper, but it is important to note that he was deeply influenced by a lecture that Hungarian American mathematician John von Neumann (1903–1957) gave in Cambridge in 1935 on his stored program concept, a concept enshrined in the Turing machine. 1 In turn, von Neumann was influenced by Turing’s 1936 paper, which elegantly laid out the principles of computation, and made it required reading for his colleagues in the late 1930s and early 1940s. 2

In the same paper Turing reports another unexpected discovery: that of unsolvable problems. These are problems that are well defined with unique answers that can be shown to exist, but that we can also prove can never be computed by any Turing machine—that is to say, by any machine, a reversal of what had been a nineteenth-century dogma that problems that could be defined would ultimately be solved. Turing showed that there are as many unsolvable problems as solvable ones. Austrian American mathematician and philosopher Kurt Gödel reached a similar conclusion in his 1931 “incompleteness theorem.” We are thus left with the perplexing situation of being able to define a problem, to prove that a unique answer exists, and yet know that the answer can never be found.

Turing had shown that at its essence, computation is based on a very simple mechanism. Because the Turing machine (and therefore any computer) is capable of basing its future course of action on results it has already computed, it is capable of making decisions and modeling arbitrarily complex hierarchies of information.

In 1939 Turing designed an electronic calculator called Bombe that helped decode messages that had been encrypted by the Nazi Enigma coding machine. By 1943, an engineering team influenced by Turing completed what is arguably the first computer, the Colossus, that enabled the Allies to continue decoding messages from more sophisticated versions of Enigma. The Bombe and Colossus were designed for a single task and could not be reprogrammed for a different one. But they performed this task brilliantly and are credited with having enabled the Allies to overcome the three-to-one advantage that the German Luftwaffe enjoyed over the British Royal Air Force and win the crucial Battle of Britain, as well as to continue anticipating Nazi tactics throughout the war.

It was on these foundations that John von Neumann created the architecture of the modern computer, which represents our third major idea. Called the von Neumann machine, it has remained the core structure of essentially every computer for the past sixty-seven years, from the microcontroller in your washing machine to the largest supercomputers. In a paper dated June 30, 1945, and titled “First Draft of a Report on the EDVAC,” von Neumann presented the ideas that have dominated computation ever since. 3 The von Neumann model includes a central processing unit, where arithmetical and logical operations are carried out; a memory unit, where the program and data are stored; mass storage; a program counter; and input/output channels. Although this paper was intended as an internal project document, it has become the bible for computer designers. You never know when a seemingly routine internal memo will end up revolutionizing the world.

The Turing machine was not designed to be practical. Turing’s theorems were concerned not with the efficiency of solving problems but rather in examining the range of problems that could in theory be solved by computation. Von Neumann’s goal, on the other hand, was to create a feasible concept of a computational machine. His model replaces Turing’s one-bit computations with multiple-bit words (generally some multiple of eight bits). Turing’s memory tape is sequential, so Turing machine programs spend an inordinate amount of time moving the tape back and forth to store and retrieve intermediate results. In contrast, von Neumann’s memory is random access, so that any data item can be immediately retrieved.

One of von Neumann’s key ideas is the stored program, which he had introduced a decade earlier: placing the program in the same type of random access memory as the data (and often in the same block of memory). This allows the computer to be reprogrammed for different tasks as well as for self-modifying code (if the program store is writable), which enables a powerful form of recursion. Up until that time, virtually all computers, including the Colossus, were built for a specific task. The stored program makes it possible for a computer to be truly universal, thereby fulfilling Turing’s vision of the universality of computation.

Another key aspect of the von Neumann machine is that each instruction includes an operation code specifying the arithmetic or logical operation to be performed and the address of an operand from memory.

Von Neumann’s concept of how a computer should be architected was introduced with his publication of the design of the EDVAC, a project he conducted with collaborators J. Presper Eckert and John Mauchly. The EDVAC itself did not actually run until 1951, by which time there were other stored-program computers, such as the Manchester Small-Scale Experimental Machine, ENIAC, EDSAC, and BINAC, all of which had been deeply influenced by von Neumann’s paper and involved Eckert and Mauchly as designers. Von Neumann was a direct contributor to the design of a number of these machines, including a later version of ENIAC, which supported a stored program.

There were a few precursors to von Neumann’s architecture, although with one surprising exception, none are true von Neumann machines. In 1944 Howard Aiken introduced the Mark I, which had an element of programmability but did not use a stored program. It read instructions from a punched paper tape and then executed each command immediately. It also lacked a conditional branch instruction.

Читать дальше