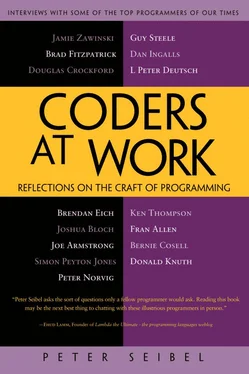

Peter Seibel - Coders at Work - Reflections on the craft of programming

Здесь есть возможность читать онлайн «Peter Seibel - Coders at Work - Reflections on the craft of programming» весь текст электронной книги совершенно бесплатно (целиком полную версию без сокращений). В некоторых случаях можно слушать аудио, скачать через торрент в формате fb2 и присутствует краткое содержание. Жанр: Программирование, на английском языке. Описание произведения, (предисловие) а так же отзывы посетителей доступны на портале библиотеки ЛибКат.

- Название:Coders at Work: Reflections on the craft of programming

- Автор:

- Жанр:

- Год:неизвестен

- ISBN:нет данных

- Рейтинг книги:3 / 5. Голосов: 1

-

Избранное:Добавить в избранное

- Отзывы:

-

Ваша оценка:

- 60

- 1

- 2

- 3

- 4

- 5

Coders at Work: Reflections on the craft of programming: краткое содержание, описание и аннотация

Предлагаем к чтению аннотацию, описание, краткое содержание или предисловие (зависит от того, что написал сам автор книги «Coders at Work: Reflections on the craft of programming»). Если вы не нашли необходимую информацию о книге — напишите в комментариях, мы постараемся отыскать её.

Coders at Work

Founders at Work

Coders at Work: Reflections on the craft of programming — читать онлайн бесплатно полную книгу (весь текст) целиком

Ниже представлен текст книги, разбитый по страницам. Система сохранения места последней прочитанной страницы, позволяет с удобством читать онлайн бесплатно книгу «Coders at Work: Reflections on the craft of programming», без необходимости каждый раз заново искать на чём Вы остановились. Поставьте закладку, и сможете в любой момент перейти на страницу, на которой закончили чтение.

Интервал:

Закладка:

Lazy evaluation lets you write generators—his example is generate all the possible moves in your chess game—separately from your consumer, which walks over the tree and does alpha-beta minimaxing or something. Or if you’re generating all the sequence of approximations of an answer, then you have a consumer who says when to stop. It turns out that by separating generators from consumers you can modularly decompose your program. Whereas, if you’re having to generate it along with a consumer that’s saying when to stop, that can make your program much less modular. Modular in the sense of separate thoughts in separate places that can be composed together. John’s paper gives some nice examples of ways in which you can change the consumer or change the generator, independently from each other, and that lets you plug together new programs that would have been more difficult to get by modifying one tightly interwoven one.

So that’s all about why laziness is a good thing. It’s also very helpful in a very local level in your program. You tend to find Haskell programmers will write down a function definition with some local definitions. So they’ll say “f of x equals blah, blah, blah where …” And in the whereclause they write down a bunch of definitions and of these definitions, not all are needed in all cases. But you just write them down anyway. The ones that are needed will get evaluated; the ones that aren’t needed won’t. So you don’t have to think, “Oh, goodness, all of these sub expressions are going to be evaluated but I can’t evaluate that because that would crash because of a divide by zero so I have to move the definition into the right branch of the conditional.”

There’s none of that. You tend to just write down auxiliary definitions that might be needed and the ones that are needed will be evaluated. So that’s a kind of programming convenience thing. It’s a very, very convenient mechanism.

But getting back to the big picture, if you have a lazy evaluator, it’s harder to predict exactly when an expression is going to be evaluated. So that means if you want to print something on the screen, every call-by-value language, where the order of evaluation is completely explicit, does that by having an impure “function”—I’m putting quotes around it because it now isn’t a function at all—with type something like string to unit. You call this function and as a side effect it puts something on the screen. That’s what happens in Lisp; it also happens in ML. It happens in essentially every call-by-value language.

Now in a pure language, if you have a function from string to unit you would never need to call it because you know that it just gives the answer unit. That’s all a function can do, is give you the answer. And you know what the answer is. But of course if it has side effects, it’s very important that you do call it. In a lazy language the trouble is if you say, “ fapplied to print "hello",” then whether fevaluates its first argument is not apparent to the caller of the function. It’s something to do with the innards of the function. And if you pass it two arguments, fof print "hello"and print "goodbye", then you might print either or both in either order or neither. So somehow, with lazy evaluation, doing input/output by side effect just isn’t feasible. You can’t write sensible, reliable, predictable programs that way. So, we had to put up with that. It was a bit embarrassing really because you couldn’t really do any input/output to speak of. So for a long time we essentially had programs which could just take a string to a string. That was what the whole program did. The input string was the input and result string was the output and that’s all the program could really ever do.

You could get a bit clever by making the output string encode some output commands that were interpreted by some outer interpreter. So the output string might say, “Print this on the screen; put that on the disk.” An interpreter could actually do that. So you imagine the functional program is all nice and pure and there’s sort of this evil interpreter that interprets a string of commands. But then, of course, if you read a file, how do you get the input back into the program? Well, that’s not a problem, because you can output a string of commands that are interpreted by the evil interpreter and using lazy evaluation, it can dump the results back into the input of the program. So the program now takes a stream of responses to a stream of requests. The stream of requests go to the evil interpreter that does the things to the world. Each request generates a response that’s then fed back to the input. And because evaluation is lazy, the program has emitted a response just in time for it to come round the loop and be consumed as an input. But it was a bit fragile because if you consumed your response a bit too eagerly, then you get some kind of deadlock. Because you’d be asking for the answer to a question you hadn’t yet spat out of your back end yet.

The point of this is laziness drove us into a corner in which we had to think of ways around this I/O problem. I think that that was extremely important. The single most important thing about laziness was it drove us there. But that wasn’t the way it started. Where it started was, laziness is cool; what a great programming idiom.

Seibel:Since you started programming, what’s changed about how you think about programming?

Peyton Jones:I think probably the big changes in how I think about programming have been to do with monads and type systems. Compared to the early 80s, thinking about purely functional programming with relatively simple type systems, now I think about a mixture of purely functional, imperative, and concurrent programming mediated by monads. And the types have become a lot more sophisticated, allowing you to express a much wider range of programs than I think, at that stage, I’d envisaged. You can view both of those as somewhat evolutionary, I suppose.

Seibel:For instance, since your first abortive attempt at writing a compiler you’ve written lots of compilers. You must have learned some things about how to do that that enable you to do it successfully now.

Peyton Jones:Yes. Well, lots of things. Of course that was a compiler for an imperative language written in an imperative language. Now I’m writing a compiler for a functional language in a functional language. But a big feature of GHC, our compiler for Haskell, is that the intermediate language it uses is itself typed.

Seibel:And is the typing on the intermediate representation just carrying through the typing from the original source?

Peyton Jones:It is, but it’s much more explicit. In the original source, lots of type inference is going on and the source language is carefully crafted so that type inference is possible. In the intermediate language, the type system is much more general, much more expressive because it’s more explicit: every function argument is decorated with its type. There’s no type inference , there’s just type checking for the intermediate language. So it’s an explicitly typed language whereas the source language is implicitly typed.

Type inference is based on a carefully chosen set of rules that make sure that it just fits within what the type inference engine can figure out. If you transform the program by a source-to-source transformation, maybe you’ve now moved outside that boundary. Type inference can’t reach it any more. So that’s bad for an optimization. You don’t want optimizations to have to worry about whether you might have just gone out of the boundaries of type inference.

Читать дальшеИнтервал:

Закладка:

Похожие книги на «Coders at Work: Reflections on the craft of programming»

Представляем Вашему вниманию похожие книги на «Coders at Work: Reflections on the craft of programming» списком для выбора. Мы отобрали схожую по названию и смыслу литературу в надежде предоставить читателям больше вариантов отыскать новые, интересные, ещё непрочитанные произведения.

Обсуждение, отзывы о книге «Coders at Work: Reflections on the craft of programming» и просто собственные мнения читателей. Оставьте ваши комментарии, напишите, что Вы думаете о произведении, его смысле или главных героях. Укажите что конкретно понравилось, а что нет, и почему Вы так считаете.