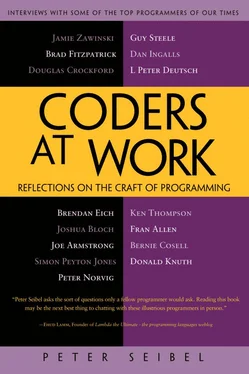

Peter Seibel - Coders at Work - Reflections on the craft of programming

Здесь есть возможность читать онлайн «Peter Seibel - Coders at Work - Reflections on the craft of programming» весь текст электронной книги совершенно бесплатно (целиком полную версию без сокращений). В некоторых случаях можно слушать аудио, скачать через торрент в формате fb2 и присутствует краткое содержание. Жанр: Программирование, на английском языке. Описание произведения, (предисловие) а так же отзывы посетителей доступны на портале библиотеки ЛибКат.

- Название:Coders at Work: Reflections on the craft of programming

- Автор:

- Жанр:

- Год:неизвестен

- ISBN:нет данных

- Рейтинг книги:3 / 5. Голосов: 1

-

Избранное:Добавить в избранное

- Отзывы:

-

Ваша оценка:

- 60

- 1

- 2

- 3

- 4

- 5

Coders at Work: Reflections on the craft of programming: краткое содержание, описание и аннотация

Предлагаем к чтению аннотацию, описание, краткое содержание или предисловие (зависит от того, что написал сам автор книги «Coders at Work: Reflections on the craft of programming»). Если вы не нашли необходимую информацию о книге — напишите в комментариях, мы постараемся отыскать её.

Coders at Work

Founders at Work

Coders at Work: Reflections on the craft of programming — читать онлайн бесплатно полную книгу (весь текст) целиком

Ниже представлен текст книги, разбитый по страницам. Система сохранения места последней прочитанной страницы, позволяет с удобством читать онлайн бесплатно книгу «Coders at Work: Reflections on the craft of programming», без необходимости каждый раз заново искать на чём Вы остановились. Поставьте закладку, и сможете в любой момент перейти на страницу, на которой закончили чтение.

Интервал:

Закладка:

Seibel:You mentioned that when you and Robert Virding were passing the code back and forth how each of you changed the low-level details of formatting, stuff that programmers argue endlessly about.

Armstrong:That’s not affecting the beauty of the algorithm.

Seibel:But it’s part of the aesthetic. It’s people’s taste.

Armstrong:Yeah. But I wouldn’t say, “This is ugly code because there’s a blank after the comma.” Ugly is when it’s done with a linear search and it could have been done with a binary interval halving. Or it could have done logarithmically and it’s done linearly. For the wrong reasons. Sure do it linearly if we know we’re searching through a list of ten elements, who cares? But if it’s a big data structure then it should have been done with a binary search. And so it’s really not very pretty to do it in a linear form. The mathematical algorithms—that’s like Platonic beauty. This is more like architecture. You admire a fine building—it’s not a mathematical object. Not a solid or a sphere or a prism—it’s a skyscraper. It looks nice.

Seibel:What makes a good programmer? If you are hiring programmers—what do you look for?

Armstrong:Choice of problem, I think. Are you driven by the problems or by the solutions? I tend to favor the people who say, “I’ve got this really interesting problem.” Then you ask, “What was the most fun project you ever wrote; show me the code for this stuff. How would you solve this problem?” I’m not so hung up on what they know about language X or Y. From what I’ve seen of programmers, they’re either good at all languages or good at none. The guy who’s a good C programmer will be good at Erlang—it’s an incredibly good predictor. I have seen exceptions to that but the mental skills necessary to be good at one language seem to convert to other languages.

Seibel:Some companies are famous for using logic puzzles during interviews. Do you ask people that kind of question in interviews?

Armstrong:No. Some very good programmers are kind of slow at that kind of stuff. One of the guys who worked on Erlang, he got a PhD in math, and the only analogy I have of him, it’s like a diamond drill drilling a hole through granite. I remember he had the flu so he took the Erlang listings home. And then he came in and he wrote an atom in an Erlang program and he said, “This will put the emulator into an infinite loop.” He found the initial hash value of this atom was exactly zero and we took something mod something to get the next value which also turned out to be zero. So he reverse engineered the hash algorithm for a pathological case. He didn’t even execute the programs to see if they were going to work; he read the programs. But he didn’t do it quickly. He read them rather slowly. I don’t know how good he would have been at these quick mental things.

Seibel:Are there any other characteristics of good programmers?

Armstrong:I read somewhere, that you have to have a good memory to be a reasonable programmer. I believe that to be true.

Seibel:Bill Gates once claimed that he could still go to a blackboard and write out big chunks of the code to the BASIC that he written for the Altair, a decade or so after he had originally written it. Do you think you can remember your old code that way?

Armstrong:Yeah. Well, I could reconstruct something. Sometimes I’ve just completely lost some old code and it doesn’t worry me in the slightest. I haven’t got a listing or anything; just type it in again. It would be logically equivalent. Some of the variable names would change and the ordering of the functions in the file would change and the names of the functions would change. But it would be almost isomorphic. Or what I would type in would be an improved version because my brain had worked at it.

Take the pattern matching in the compiler which I wrote ten years ago. I could sit down and type that in. It would be different to the original version but it’d be an improved version if I did it from memory. Because it sort of improves itself while you’re not doing anything. But it’d probably have a pretty similar structure.

I’m not worried about losing code or anything like that. It’s these patterns in your head that you remember. Well, I can’t even say you remember them. You can do it again. It’s not so much remembering. When I say you can remember a program exactly, I don’t think that it’s actually remembering. But you can do it again. If Bill could remember the actual text, I can’t do that. But I can certainly remember the structure for quite a long time.

Seibel:Is Erlang-style message passing a silver bullet for slaying the problem of concurrent programming?

Armstrong:Oh, it’s not. It’s an improvement. It’s a lot better than shared memory programming. I think that’s the one thing Erlang has done—it has actually demonstrated that. When we first did Erlang and we went to conferences and said, “You should copy all your data.” And I think they accepted the arguments over fault tolerance—the reason you copy all your data is to make the system fault tolerant. They said, “It’ll be terribly inefficient if you do that,” and we said, “Yeah, it will but it’ll be fault tolerant.”

The thing that is surprising is that it’s more efficient in certain circumstances. What we did for the reasons of fault tolerance, turned out to be, in many circumstances, just as efficient or even more efficient than sharing.

Then we asked the question, “Why is that?” Because it increased the concurrency. When you’re sharing, you’ve got to lock your data when you access it. And you’ve forgotten about the cost of the locks. And maybe the amount of data you’re copying isn’t that big. If the amount of data you’re copying is pretty small and if you’re doing lots of updates and accesses and lots of locks, suddenly it’s not so bad to copy everything. And then on the multicores, if you’ve got the old sharing model, the locks can stop all the cores. You’ve got a thousand-core CPU and one program does a global lock—all the thousand cores have got to stop.

I’m also very skeptical about implicit parallelism. Your programming language can have parallel constructs but if it doesn’t map into hardware that’s parallel, if it’s just being emulated by your programming system, it’s not a benefit. So there are three types of hardware parallelism.

There’s pipeline parallelism—so you make a deeper pipeline in the chip so you can do things in parallel. Well, that’s once and for all when you design the chip. A normal programmer can’t do anything about the instructionlevel parallelism.

There’s data parallelism, which is not really parallelism but it has to do with cache behavior. If you want to make a C program go efficiently, if *pis on a 16-byte boundary, if you access *p, then the access to *(p + 1)is free, basically, because the cache line pulls it in. Then you need to worry about how wide the cache lines are—how many bytes do you pull in in one cache transfer? That’s data parallelism, which the programmer can use by being very careful about their structures and knowing exactly how it’s laid out in memory. Messy stuff—you don’t really want to do that.

The other source of real concurrency in the chip are multicores. There’ll be 32 cores by the end of the decade and a million cores by 2019 or whatever. So you have to take the granules of concurrency in your program and map them onto the cores of the computer. Of course that’s quite a heavyweight operation. Starting a computation on a different core and getting the answer back is itself something that takes time. So if you’re just adding two numbers together, it’s just not worth the effort—you’re spending more effort in moving it to another core and doing it and getting the answer back than you are in doing it in place.

Читать дальшеИнтервал:

Закладка:

Похожие книги на «Coders at Work: Reflections on the craft of programming»

Представляем Вашему вниманию похожие книги на «Coders at Work: Reflections on the craft of programming» списком для выбора. Мы отобрали схожую по названию и смыслу литературу в надежде предоставить читателям больше вариантов отыскать новые, интересные, ещё непрочитанные произведения.

Обсуждение, отзывы о книге «Coders at Work: Reflections on the craft of programming» и просто собственные мнения читателей. Оставьте ваши комментарии, напишите, что Вы думаете о произведении, его смысле или главных героях. Укажите что конкретно понравилось, а что нет, и почему Вы так считаете.